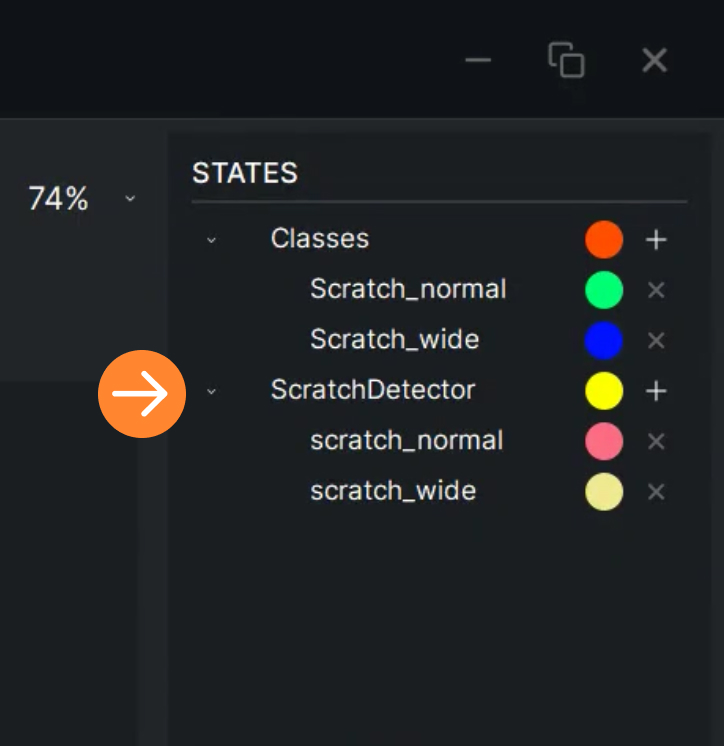

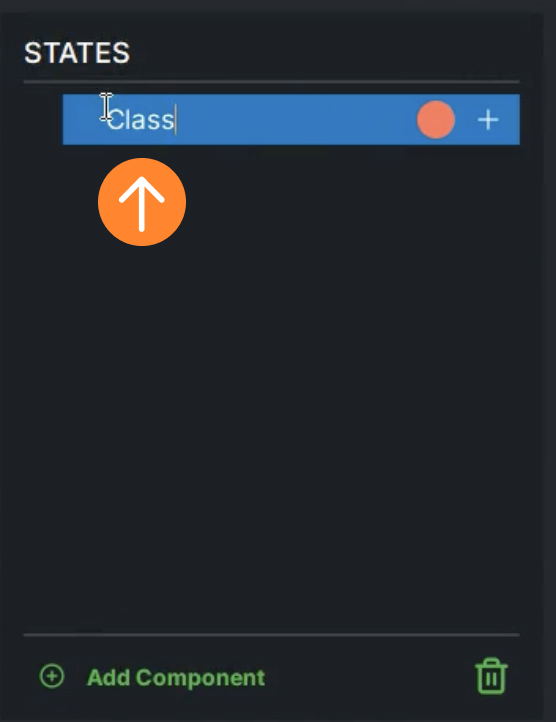

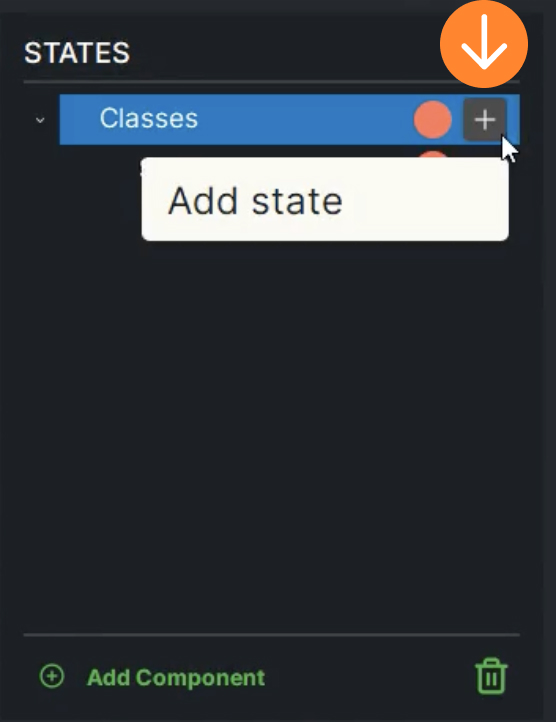

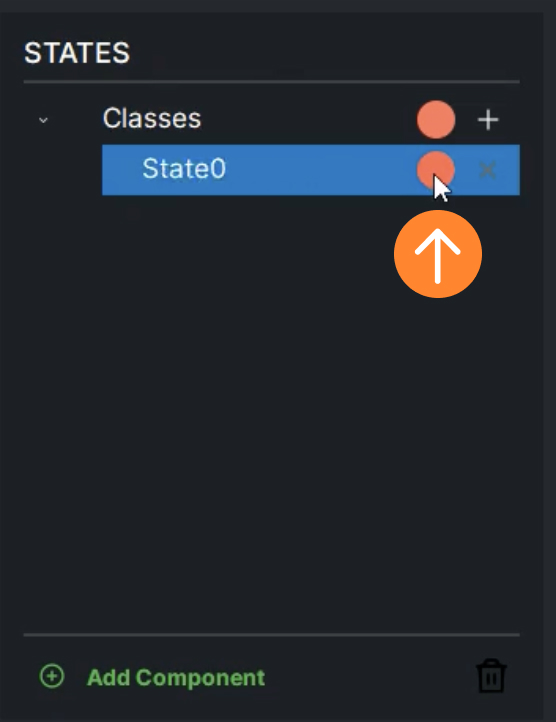

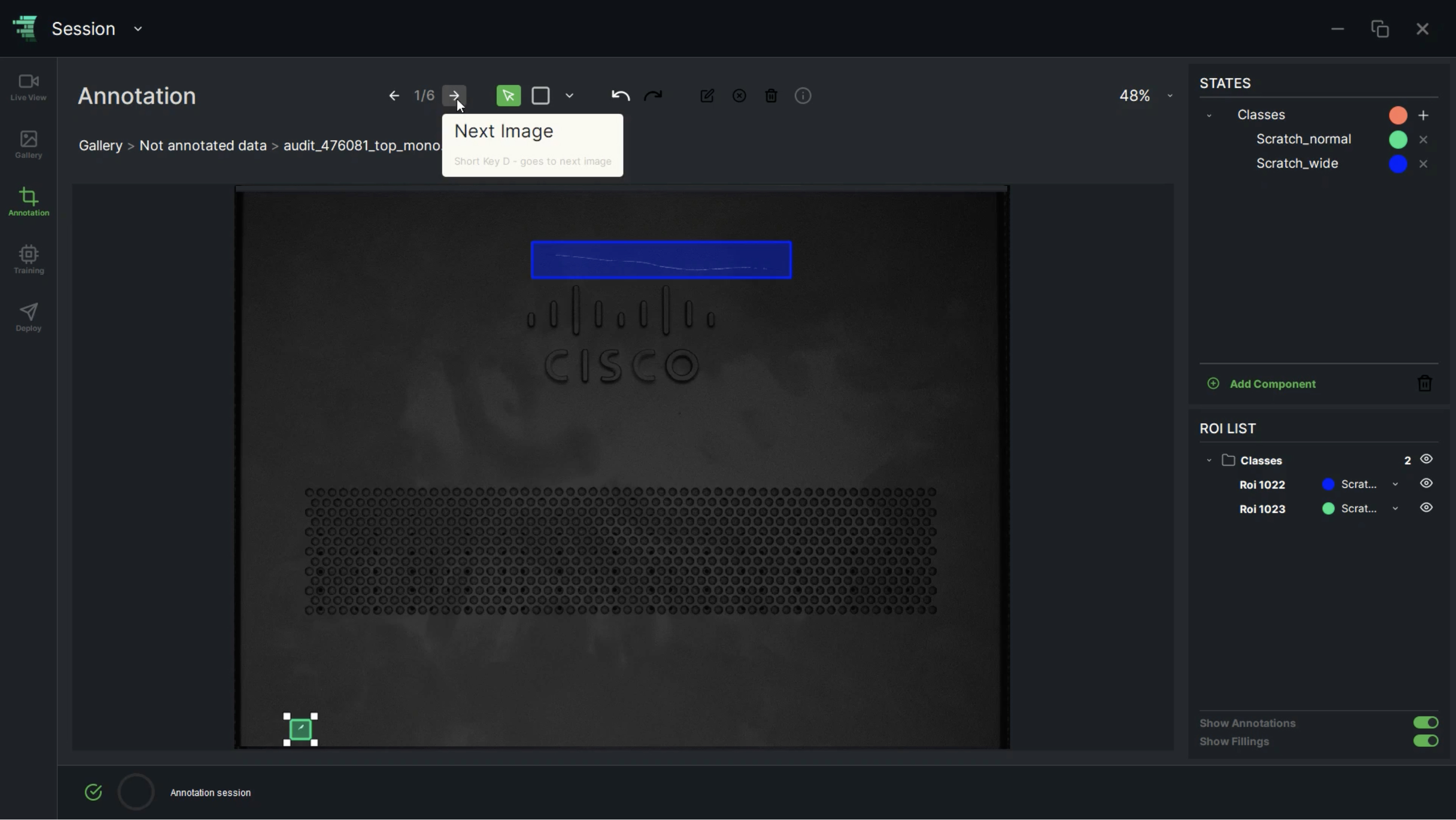

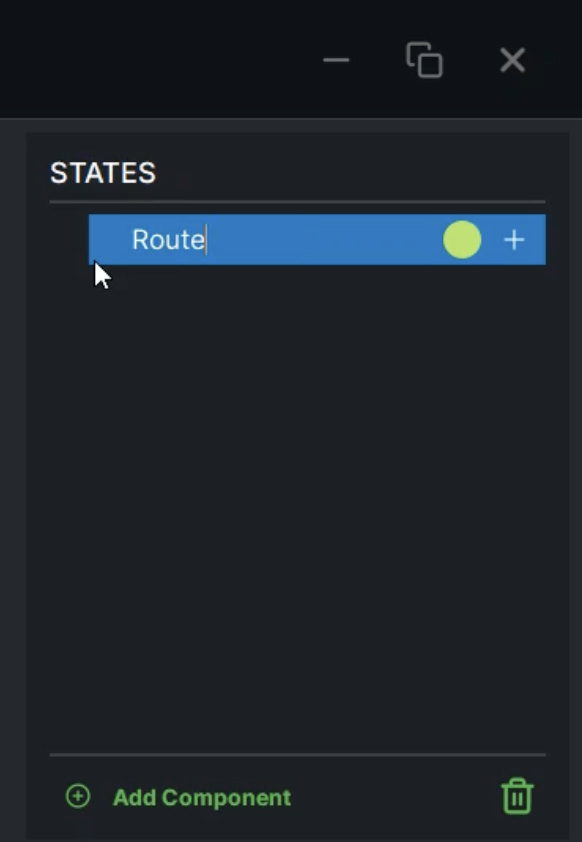

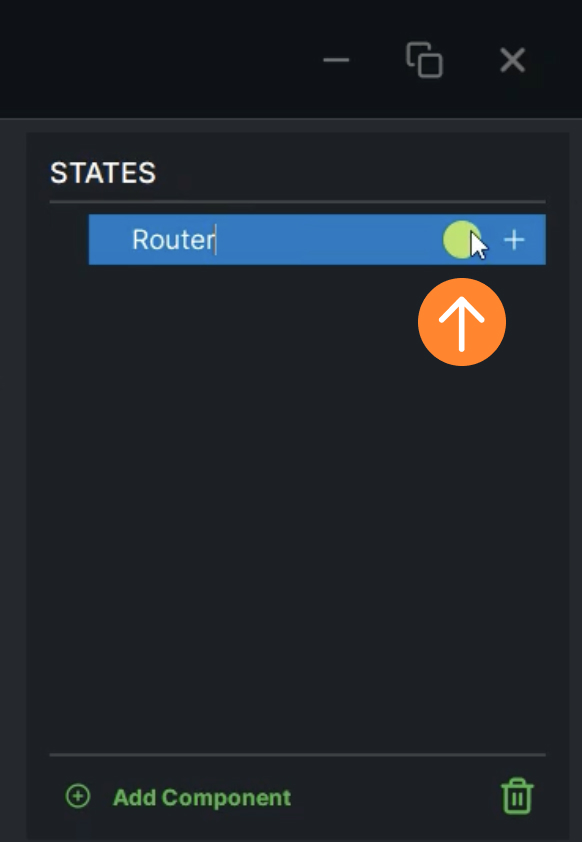

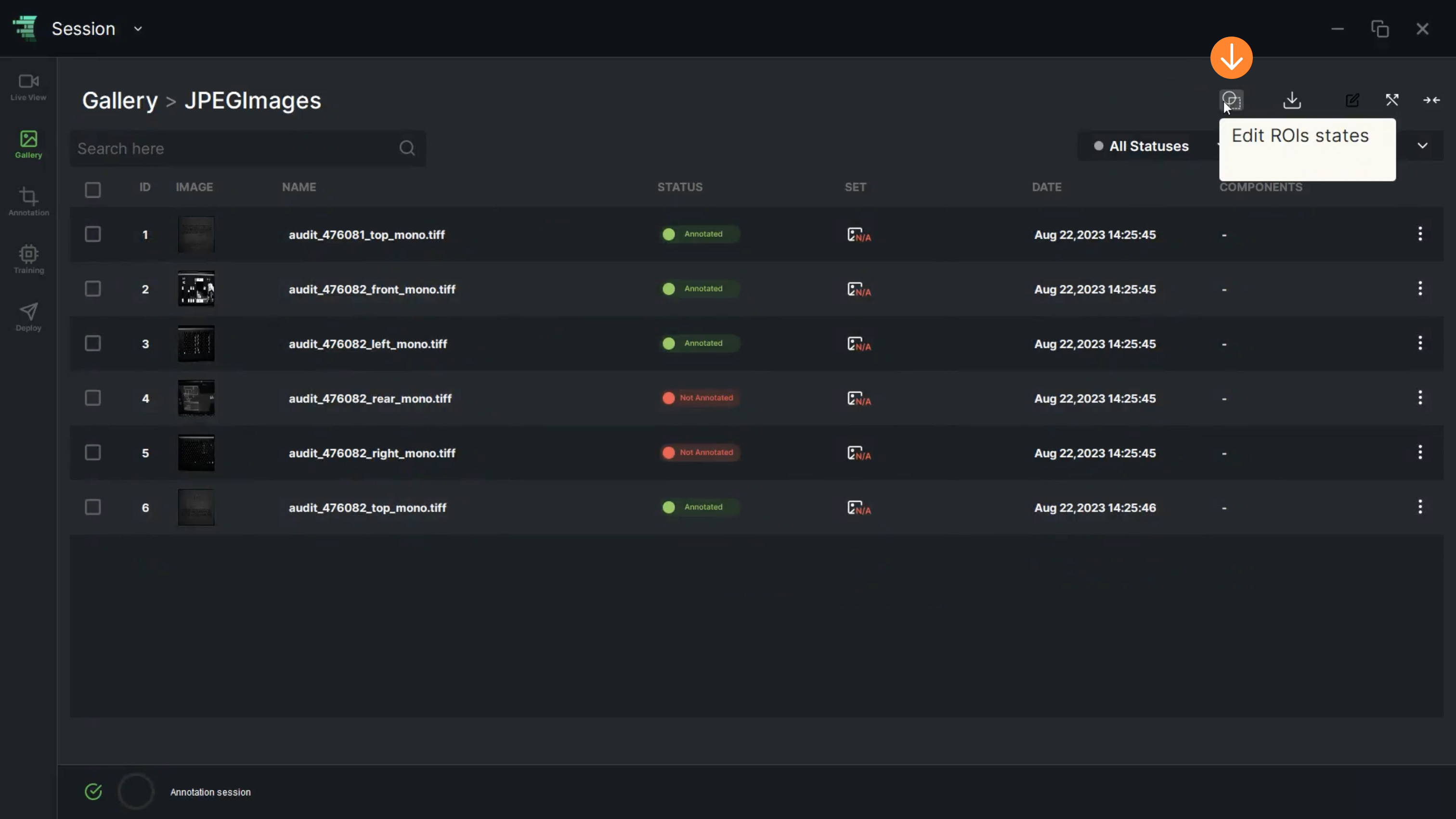

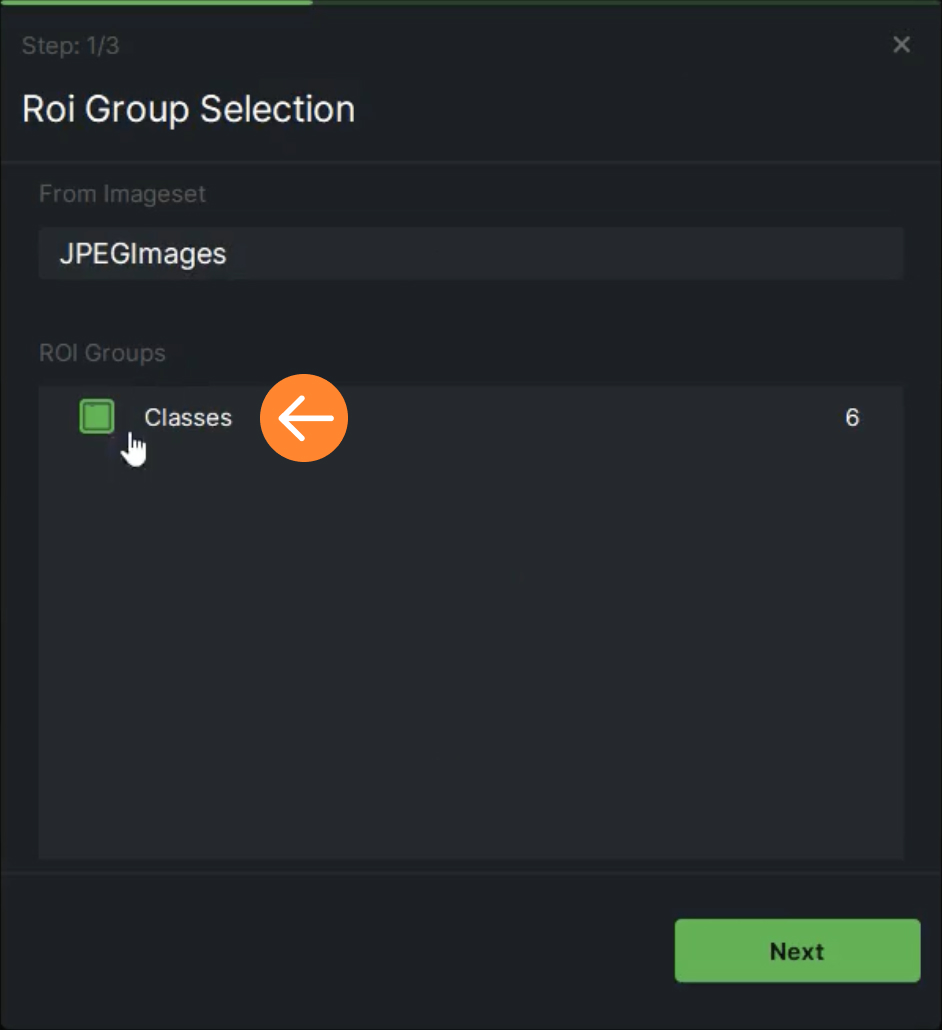

1. In the image list, “Edit ROIs States” allows the user to change the state of ROIs

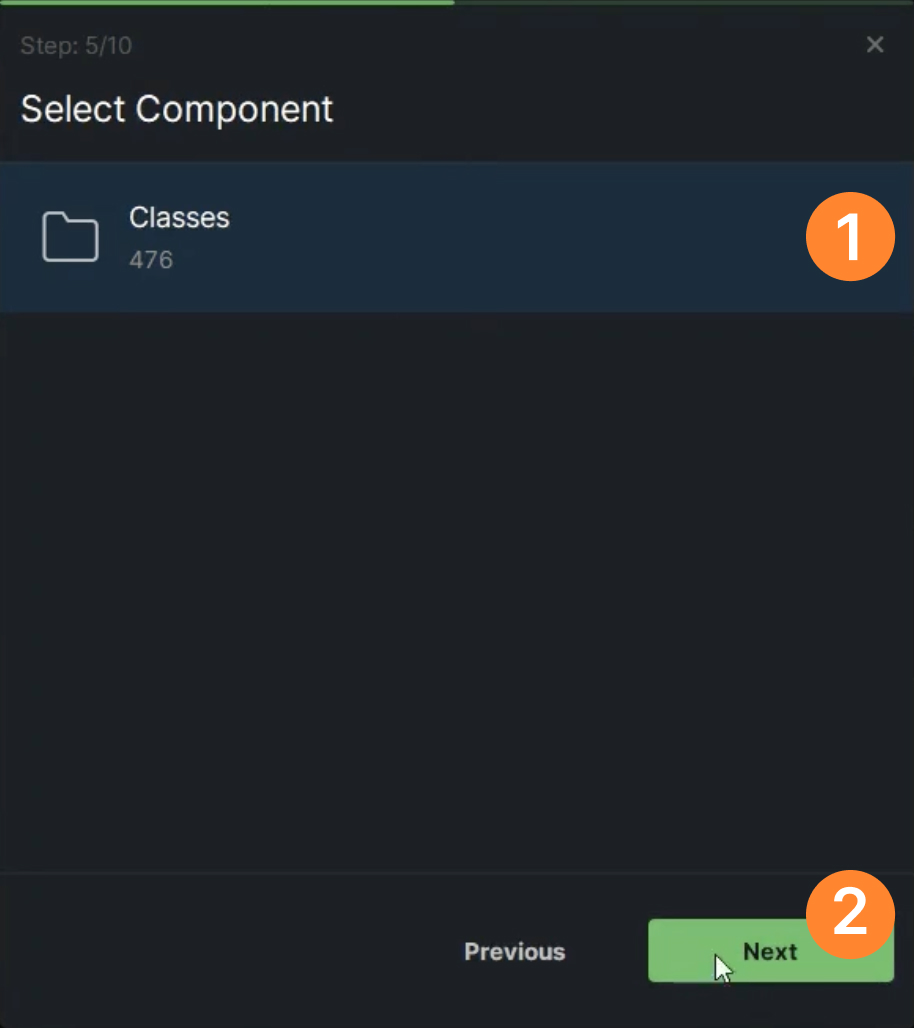

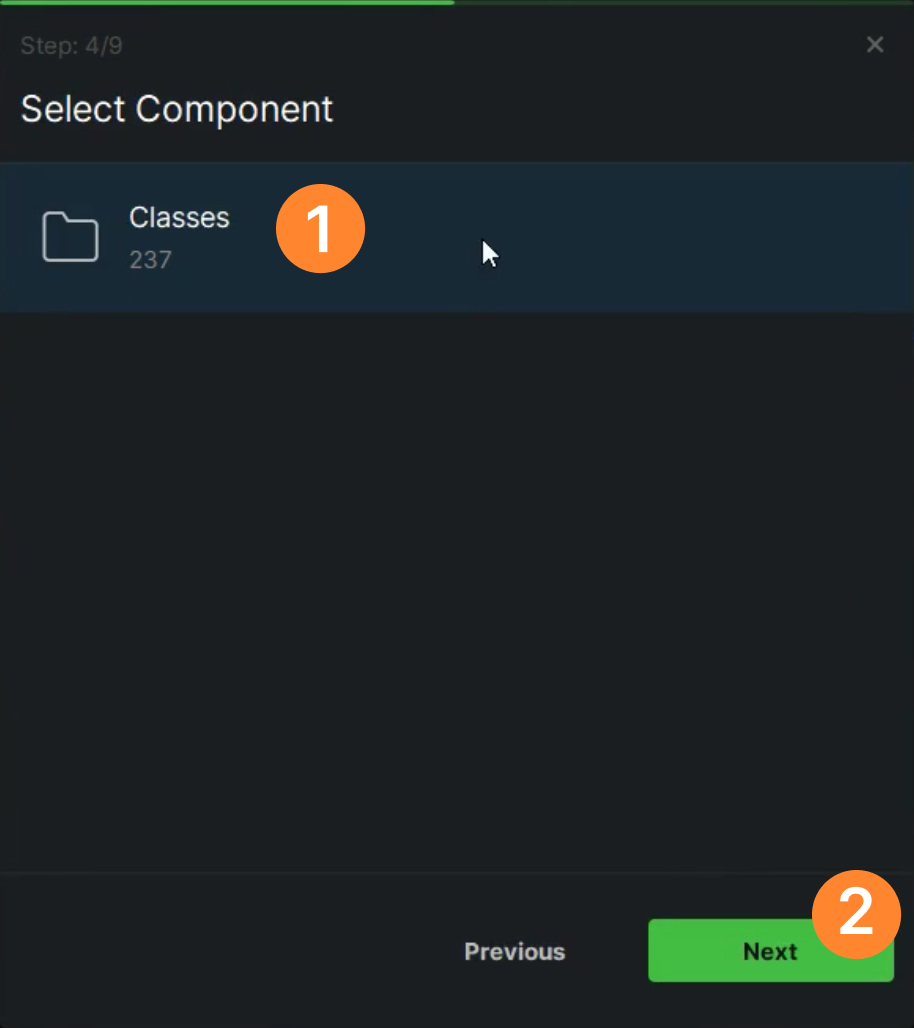

- From the available “ROI Groups”, the user can make the selections. When the component name “Classes” is chosen, simply click on “Next” to proceed

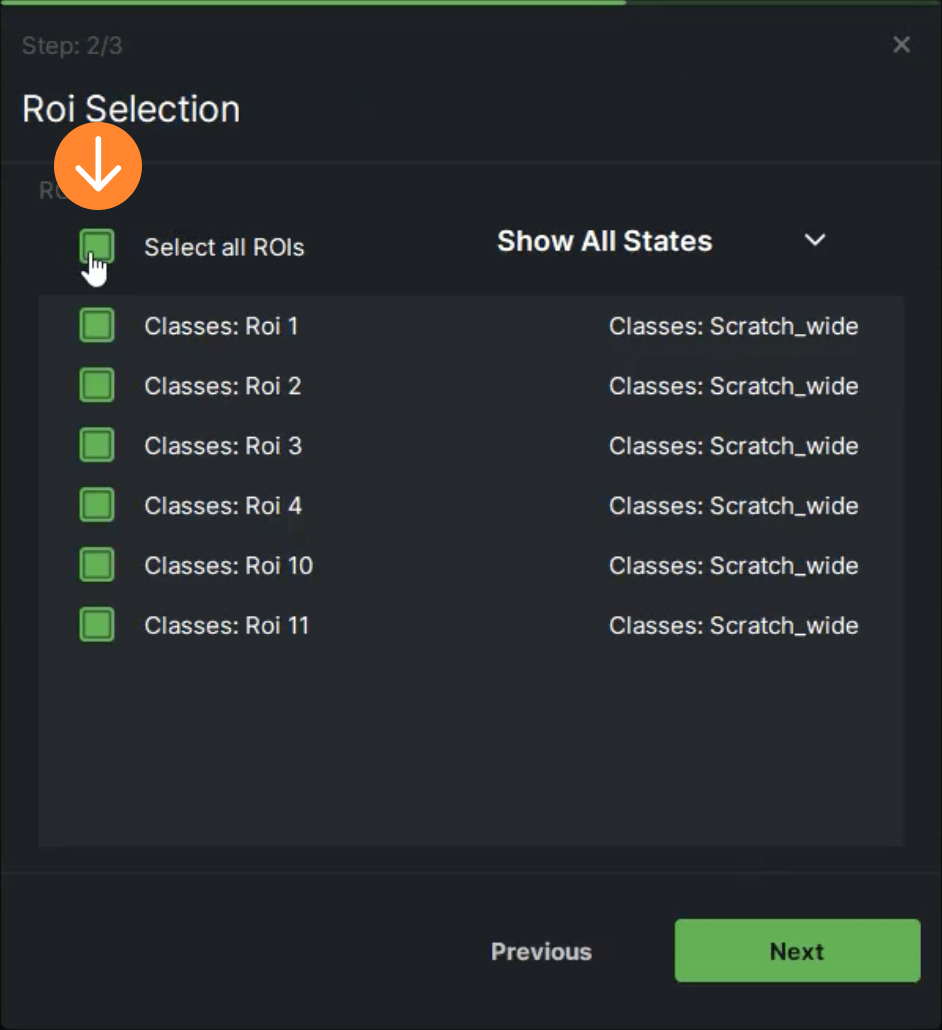

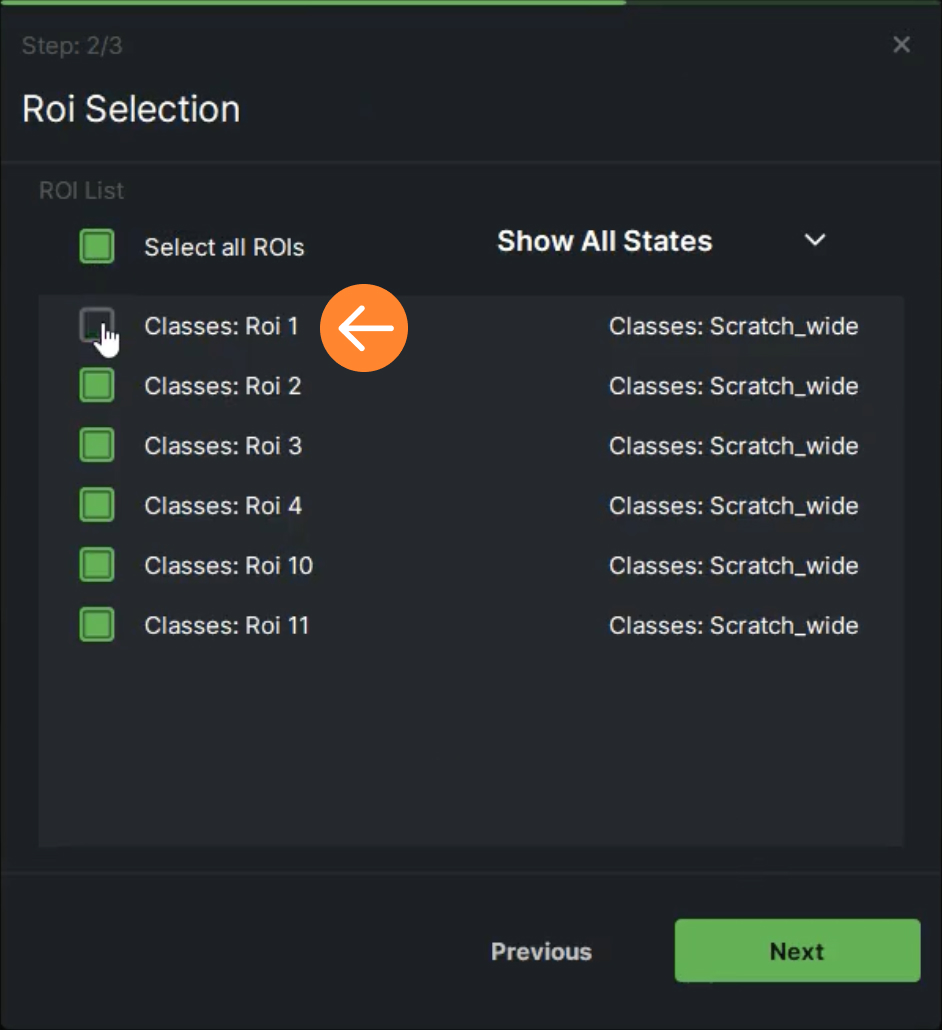

- When the “Select all ROIs” checkbox is marked, it allows the user to choose all ROIs collectively. Alternatively, specific ROIs can be individually selected or deselected from the list

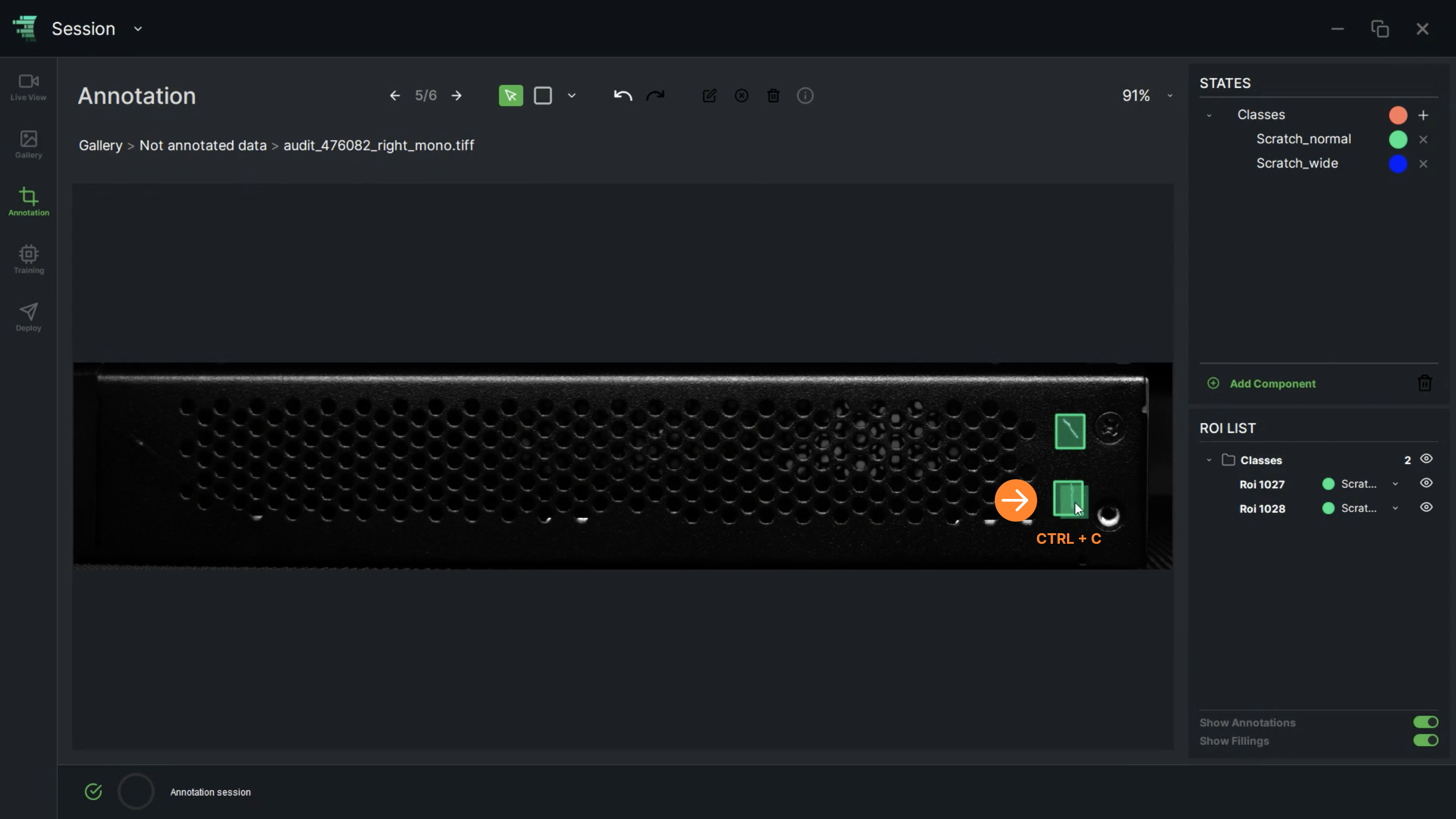

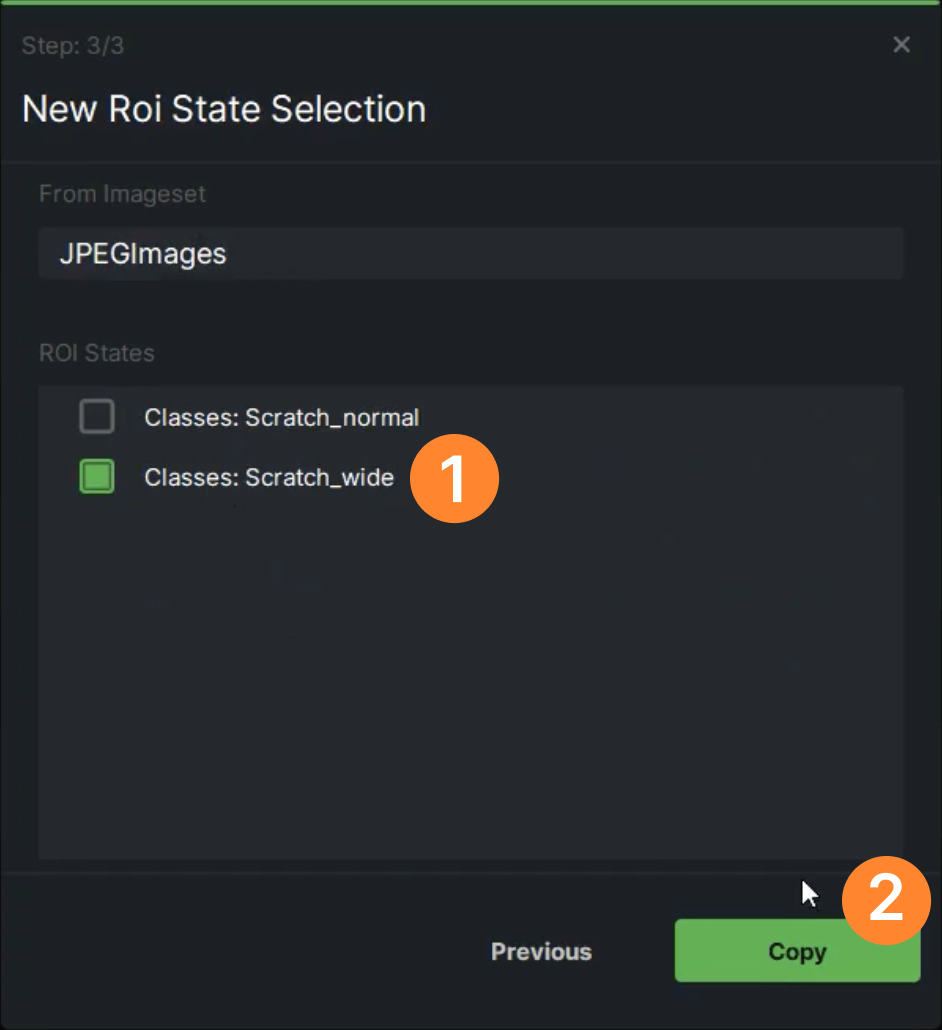

- The user should first choose one of the defined states to assign a state as a new ROI for the dataset. After selecting a state, click on “Copy” to confirm the assignment

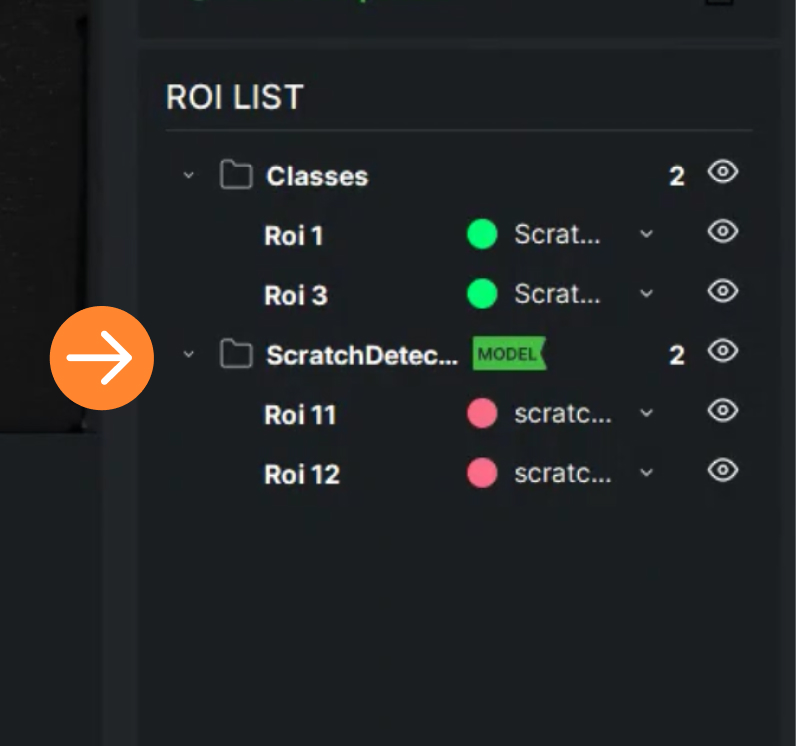

2. The dataset will be updated with the “New state/states”. The user can review them in the ROI LIST

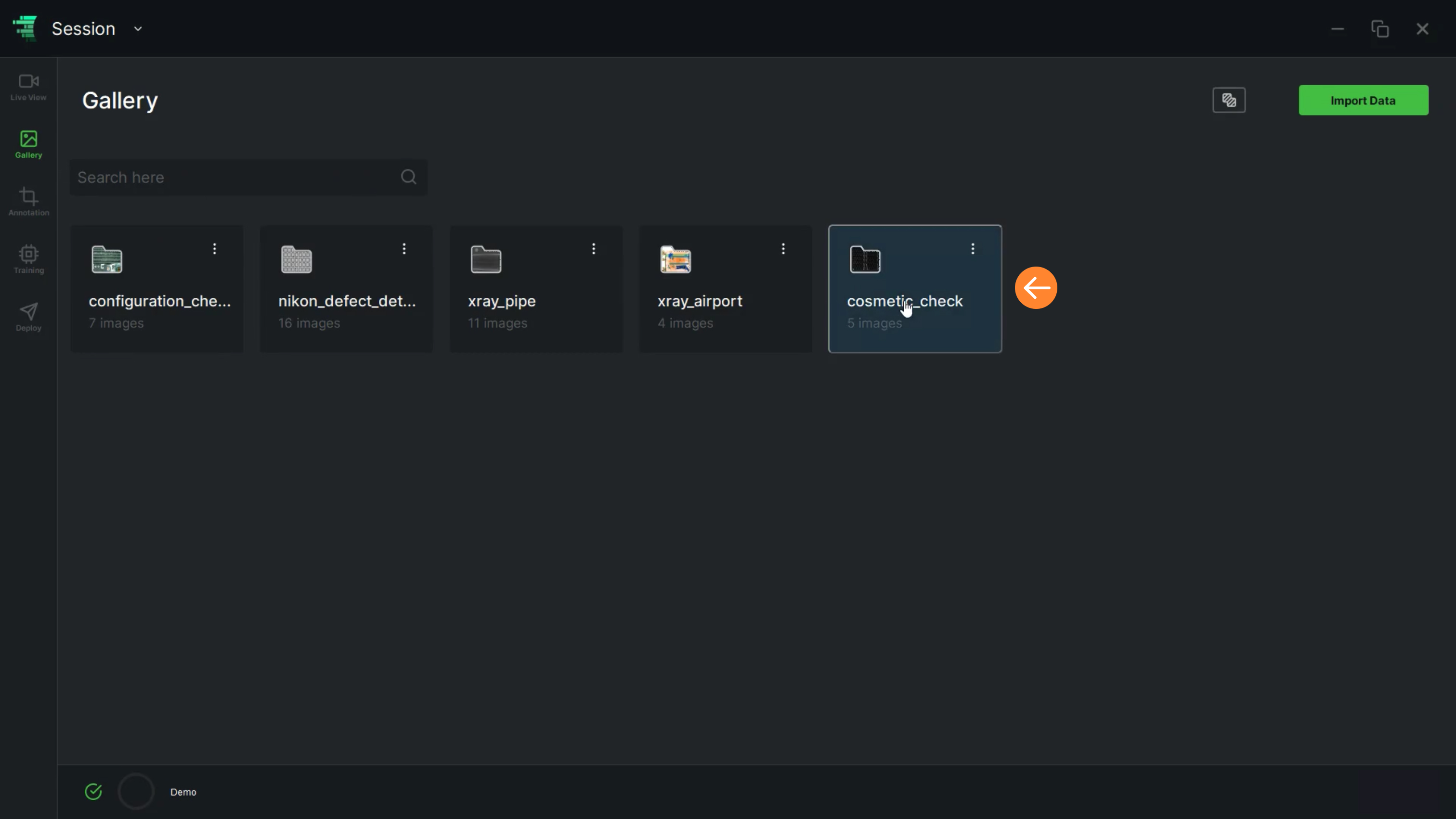

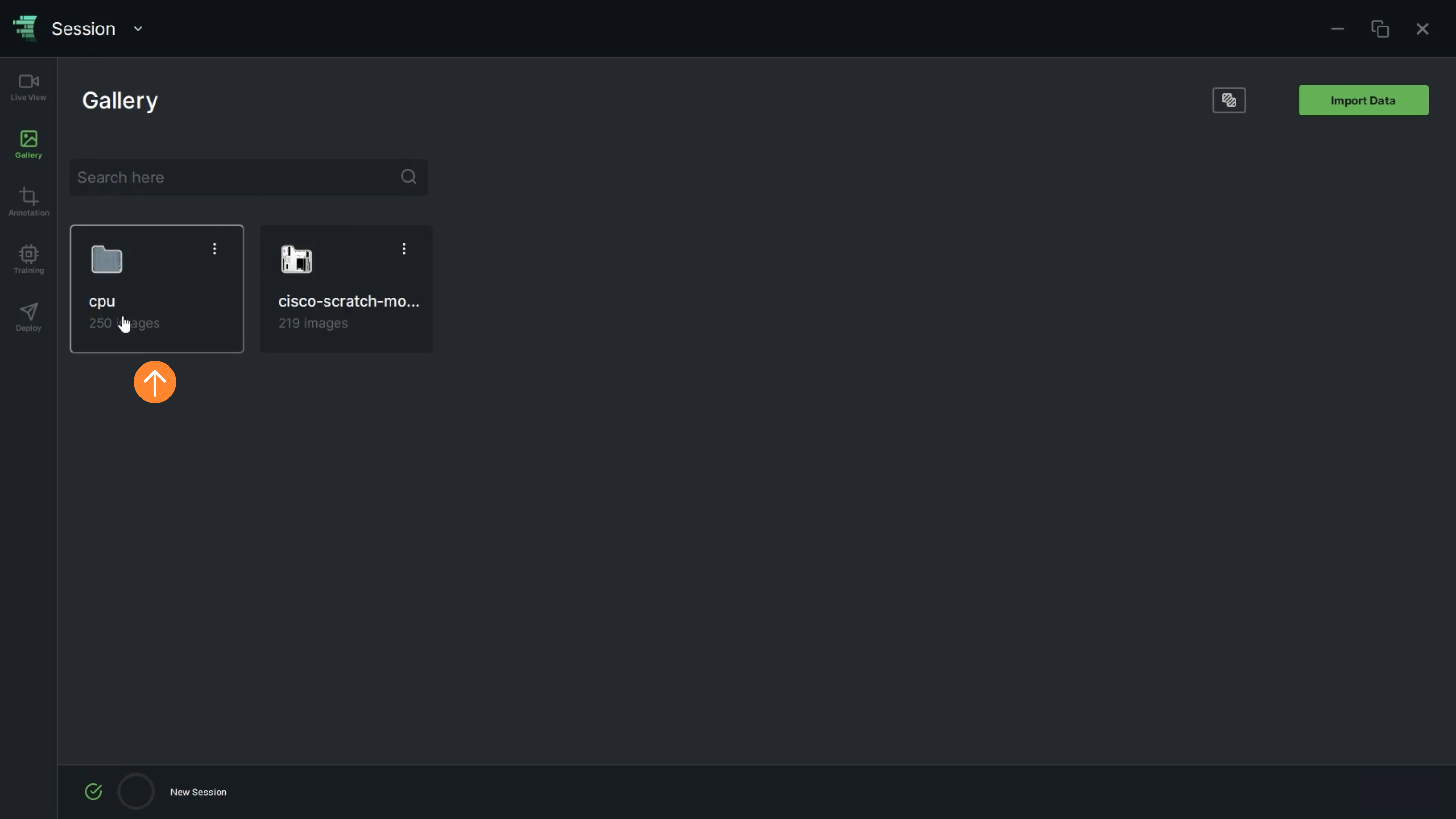

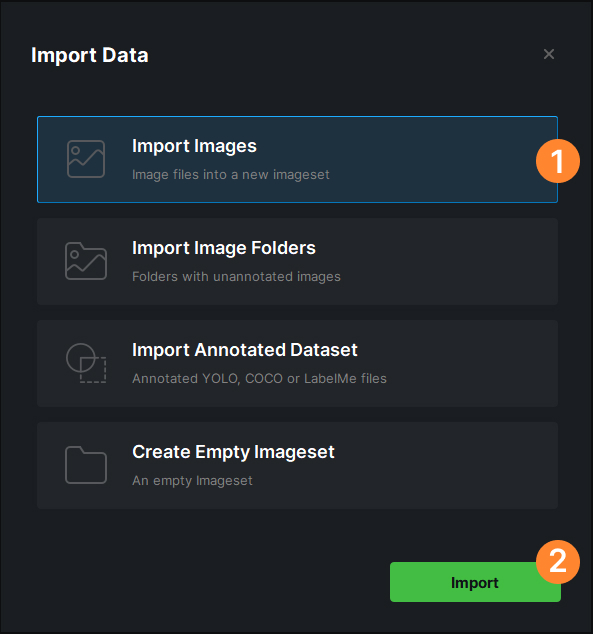

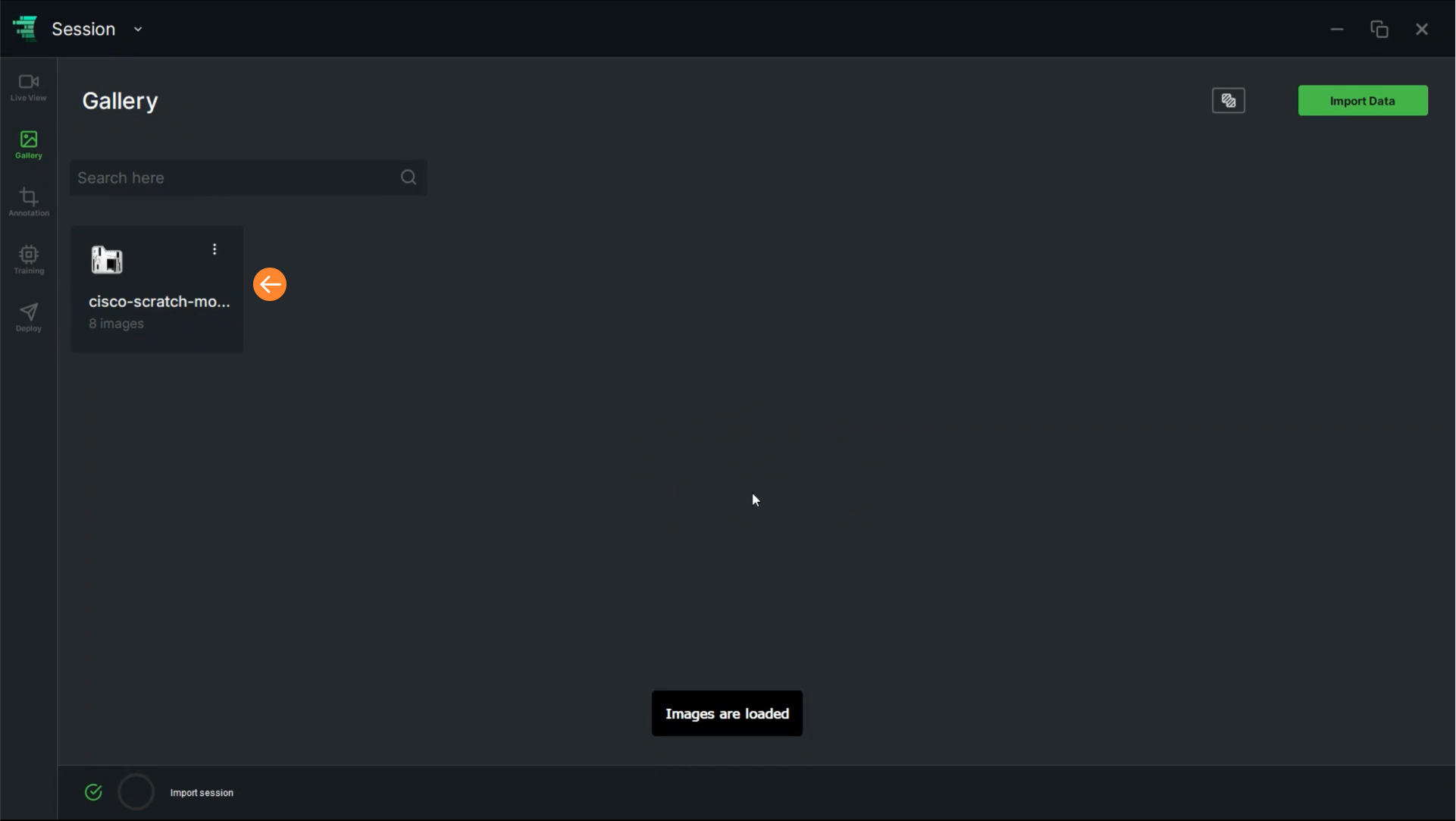

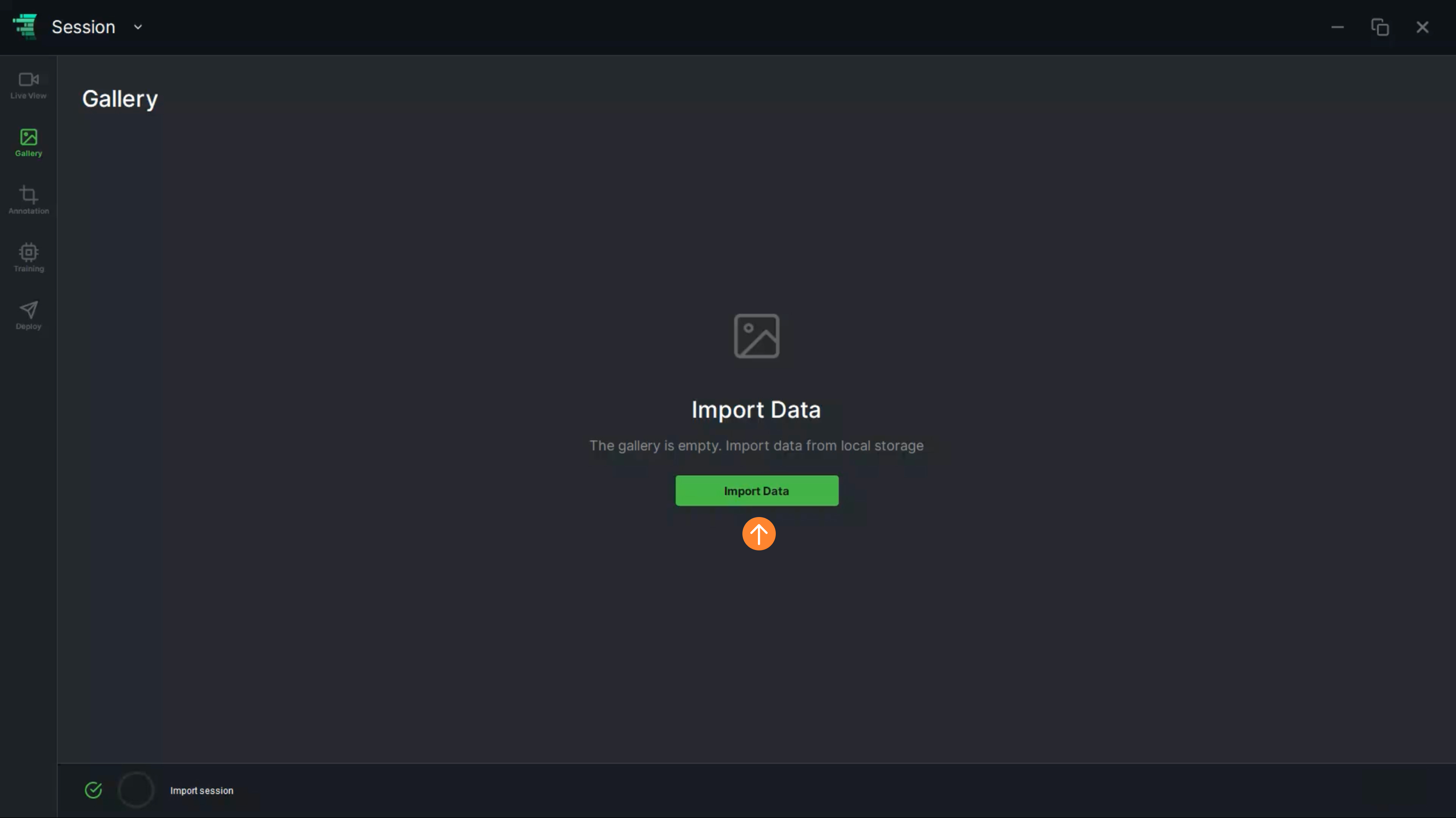

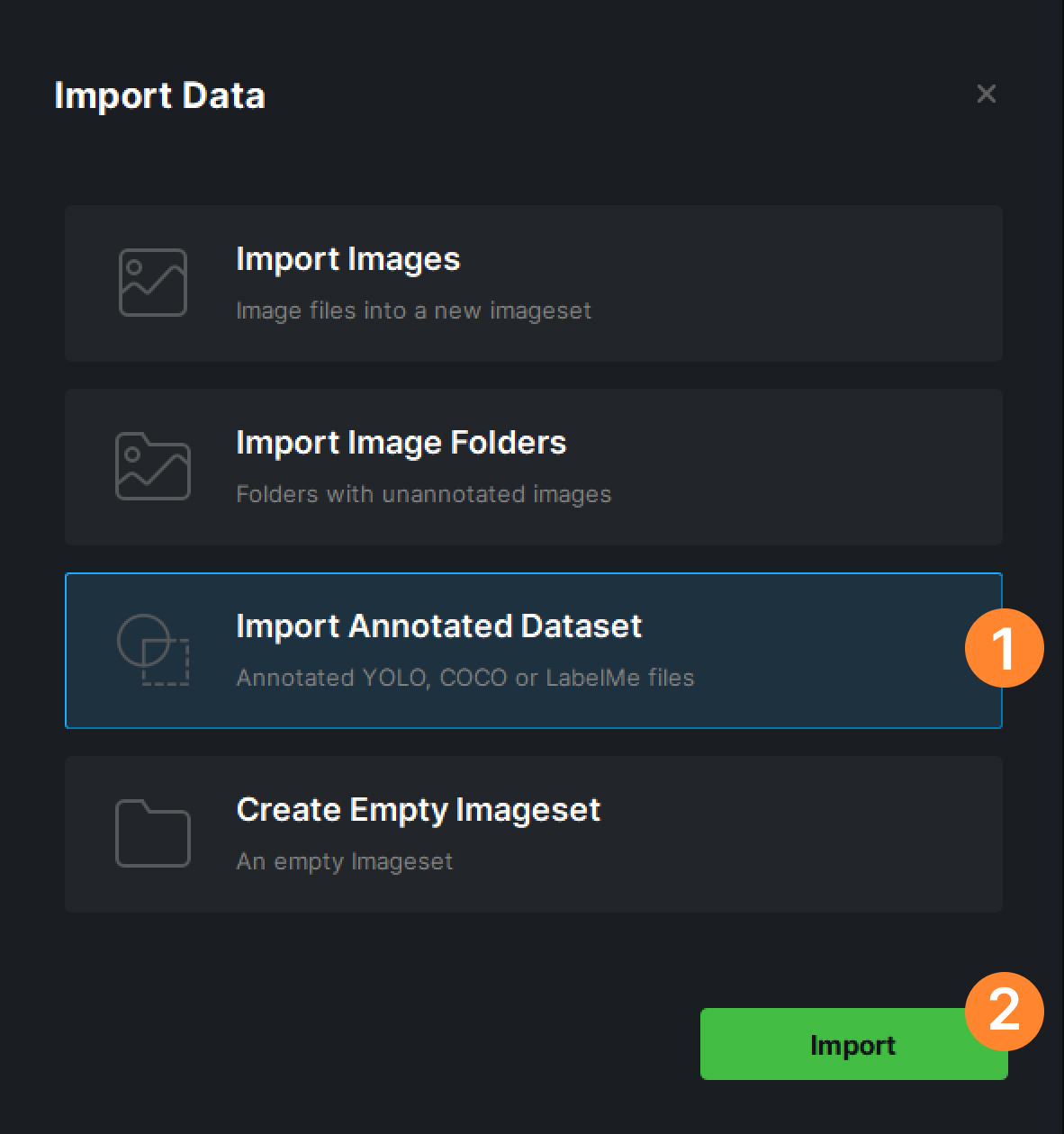

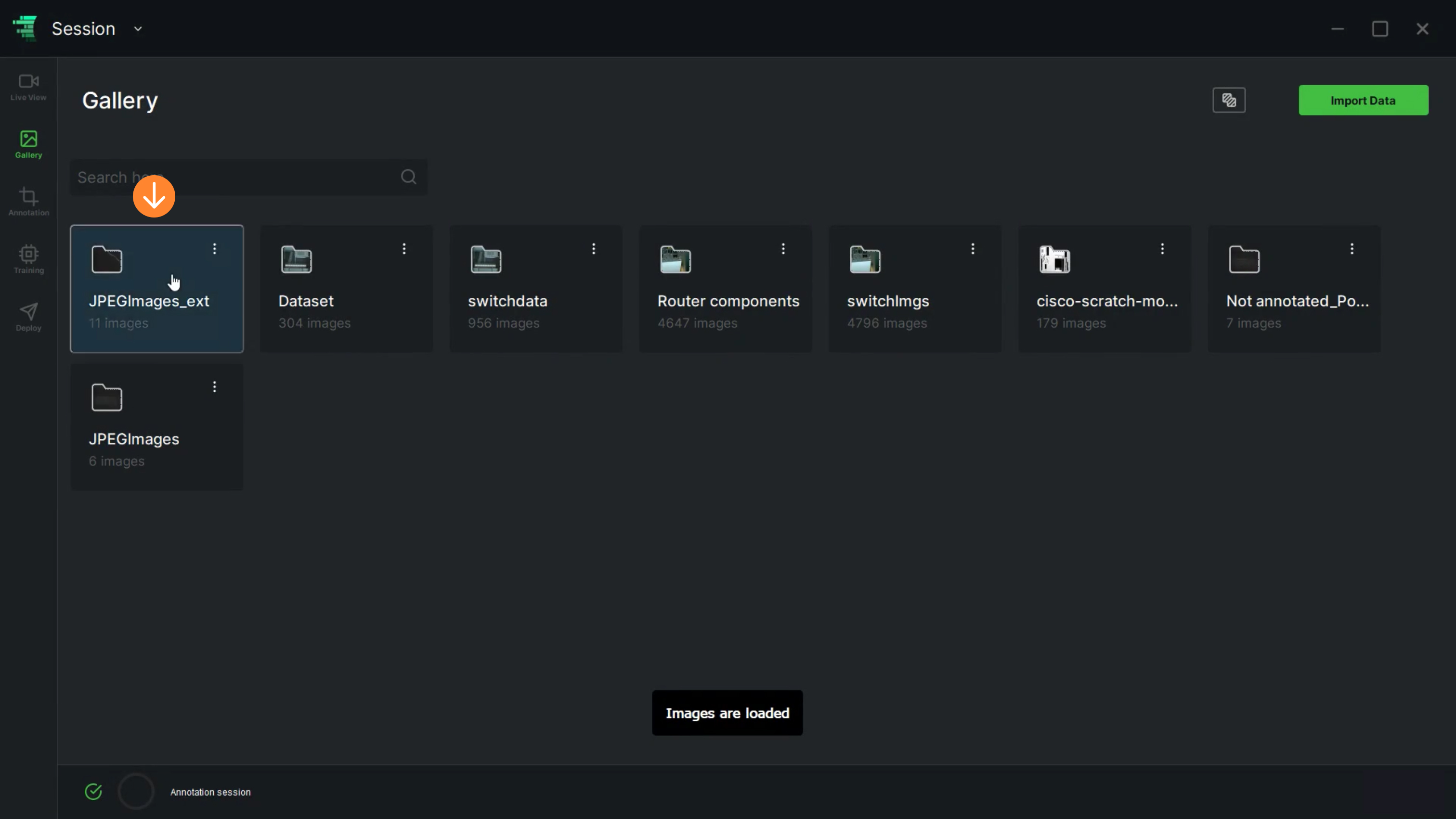

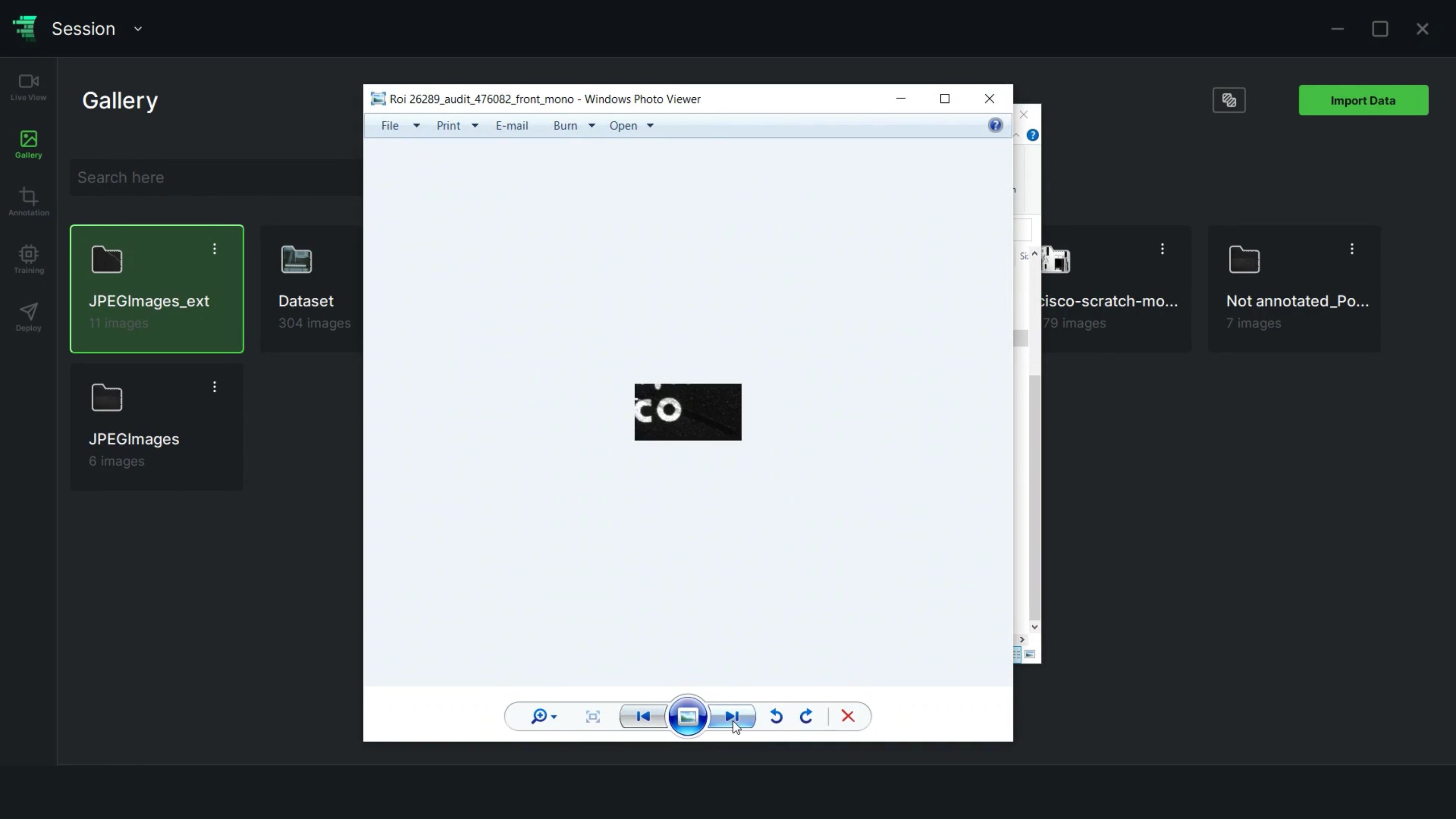

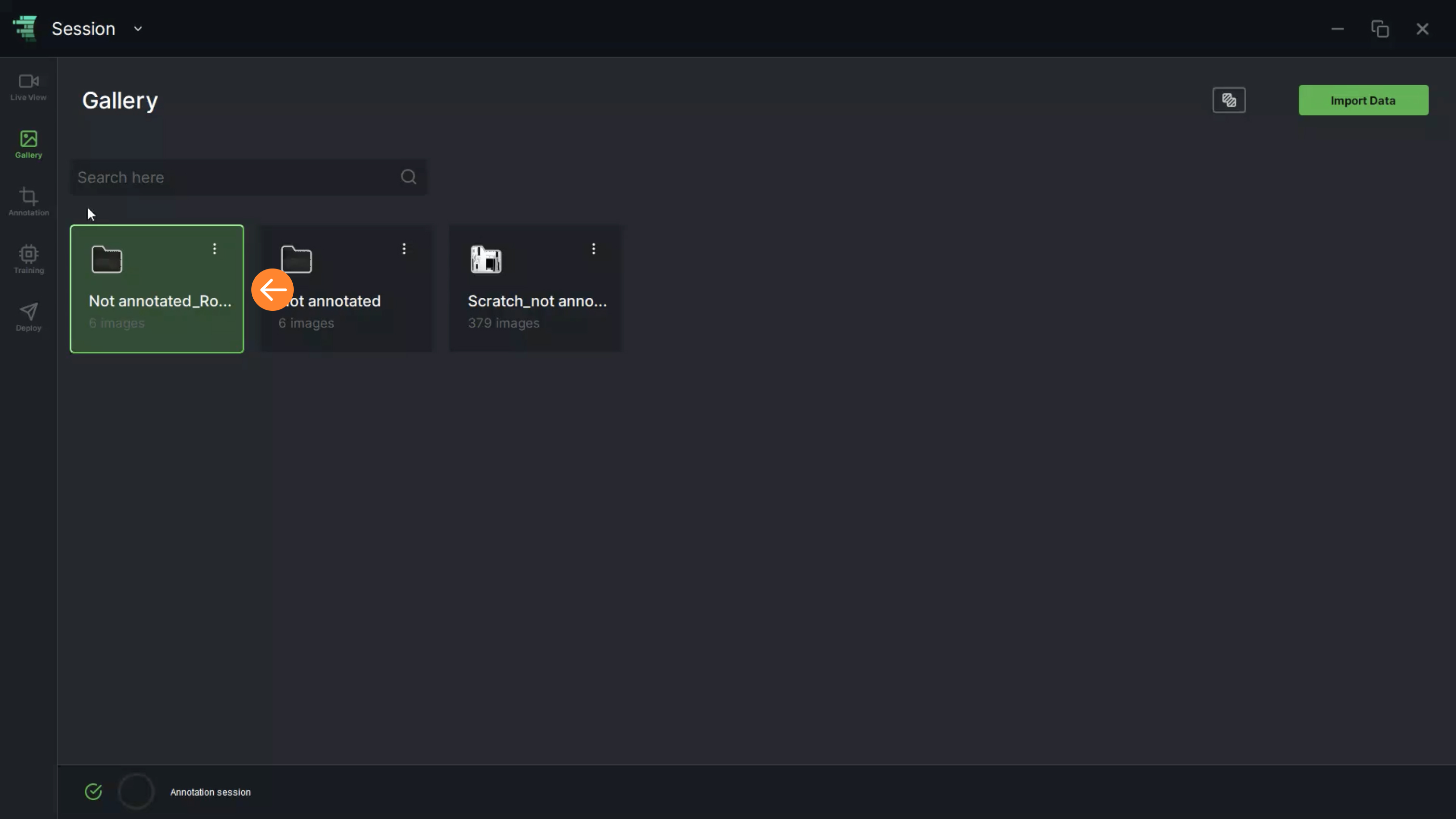

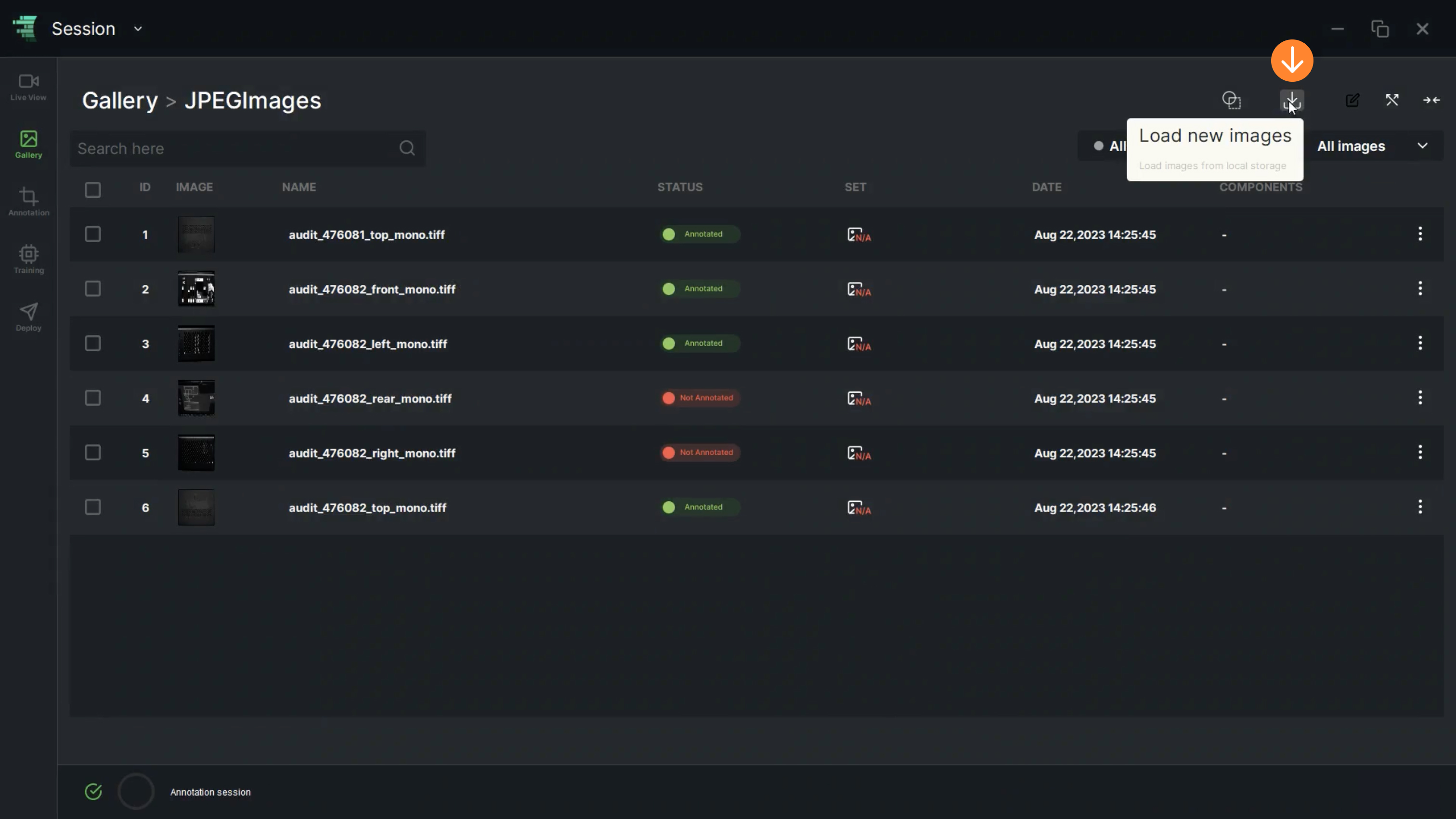

3. “Load New Images” allows the user to upload images locally

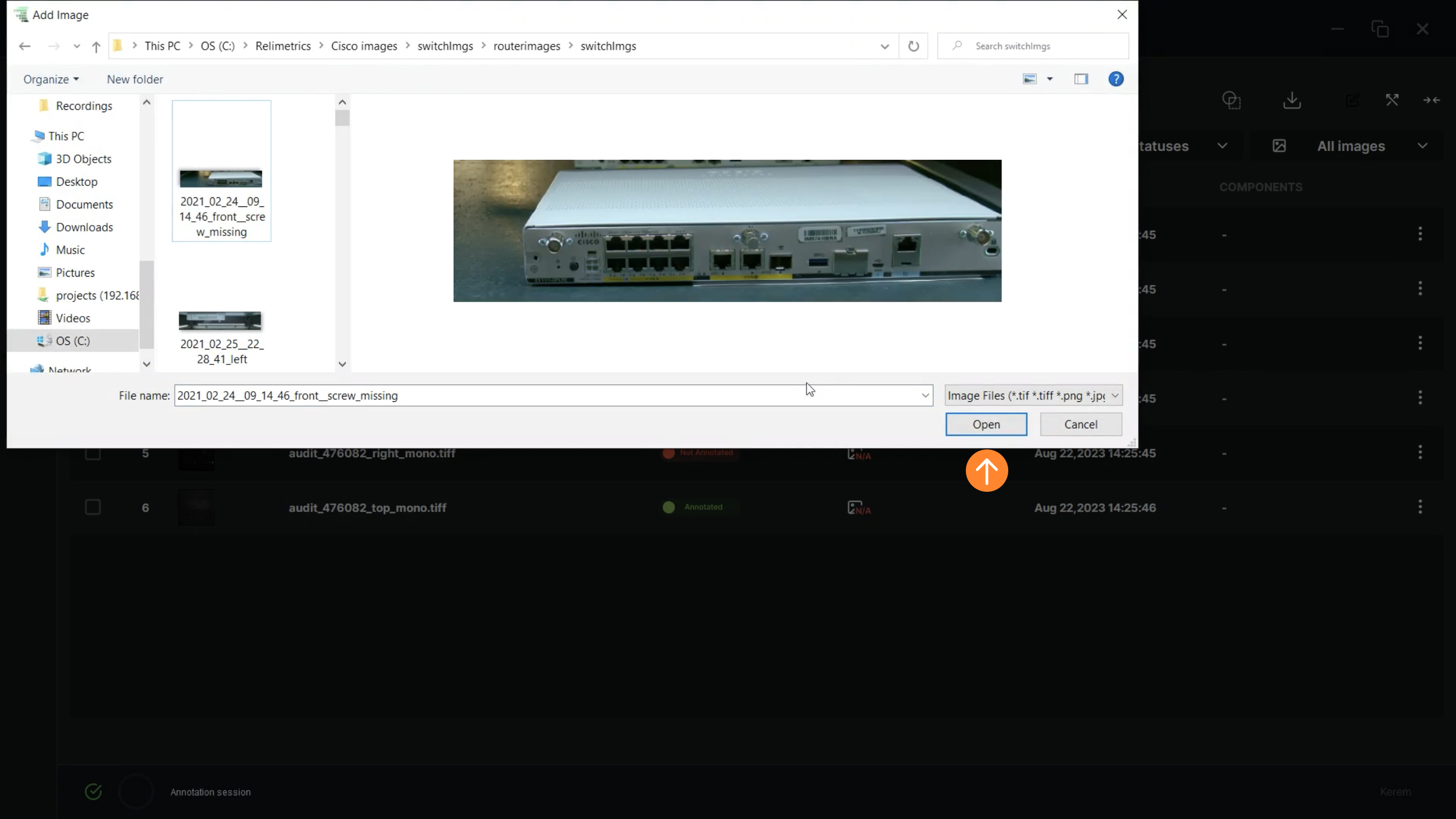

4. The user should select an image from the directory. Once an image is selected, click on “Open”

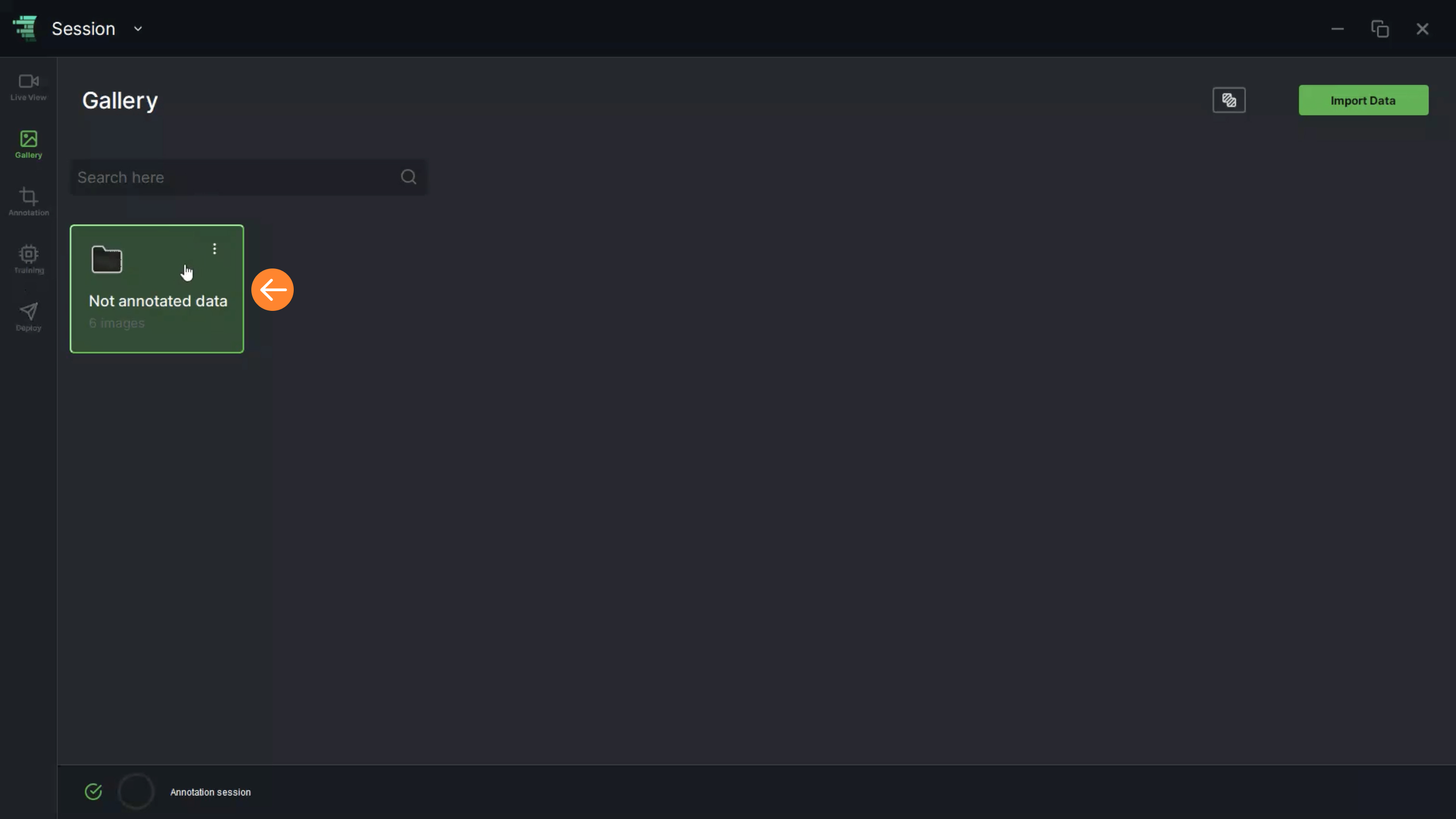

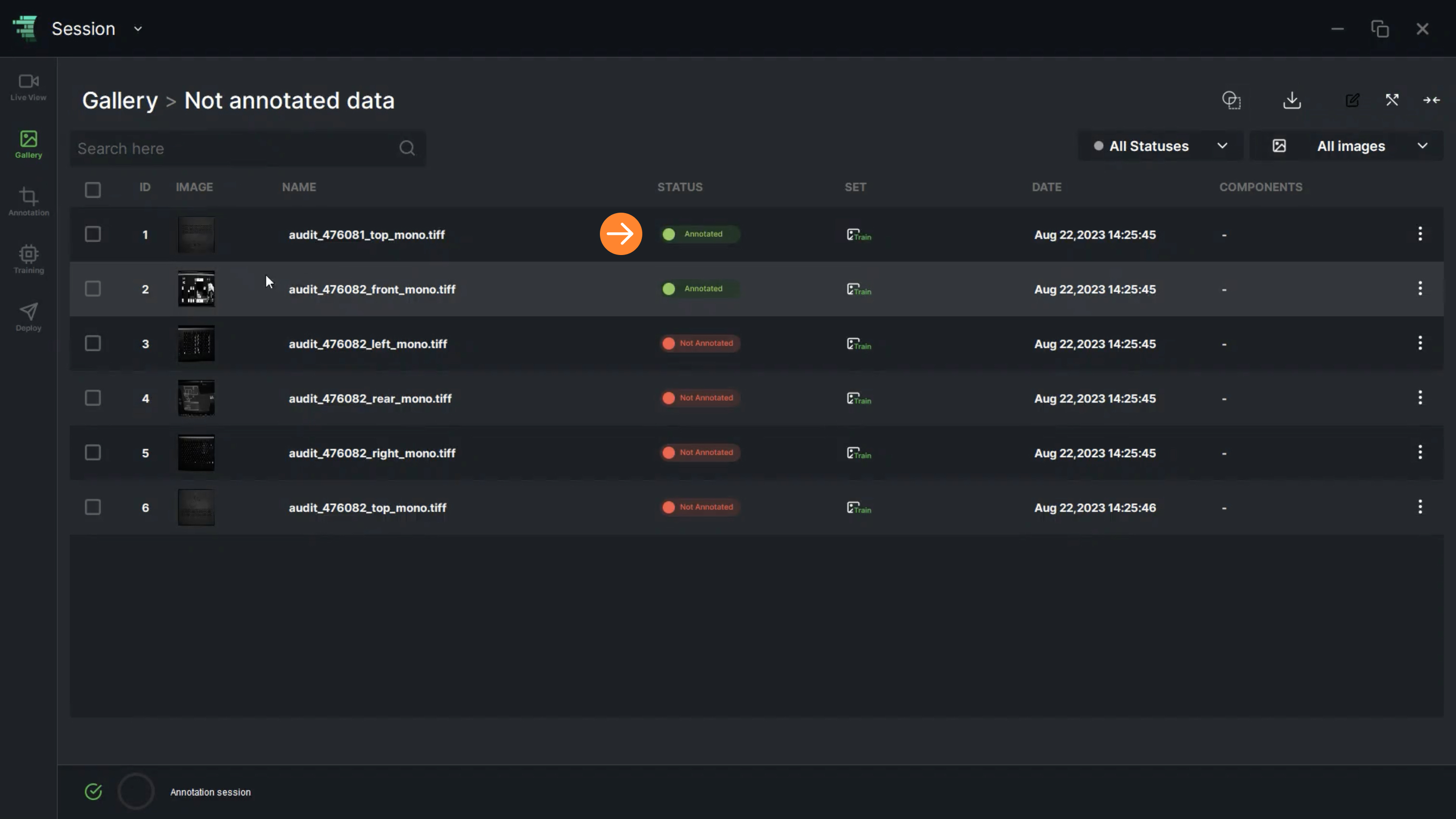

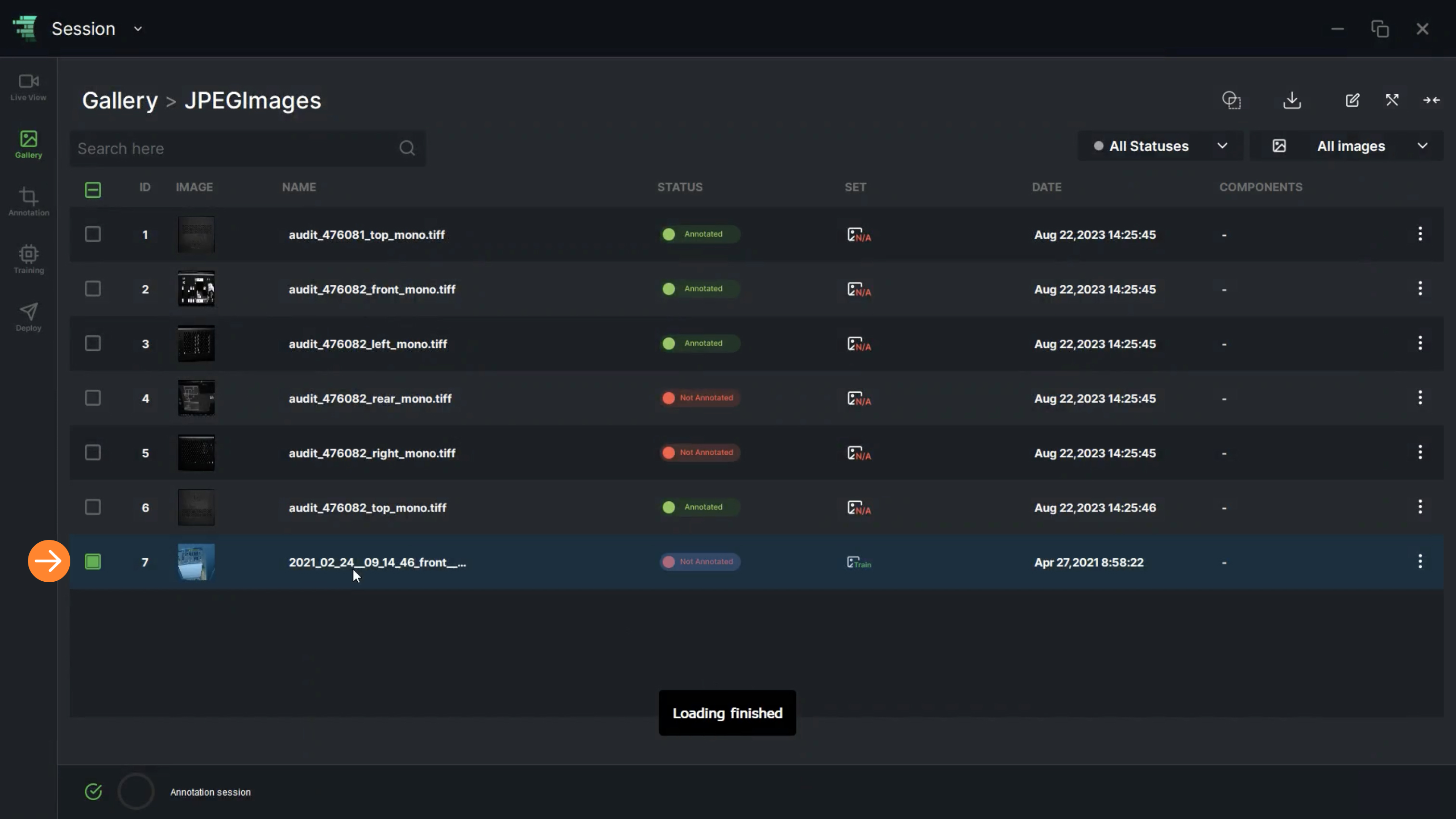

5. The imported image will be shown in the image list

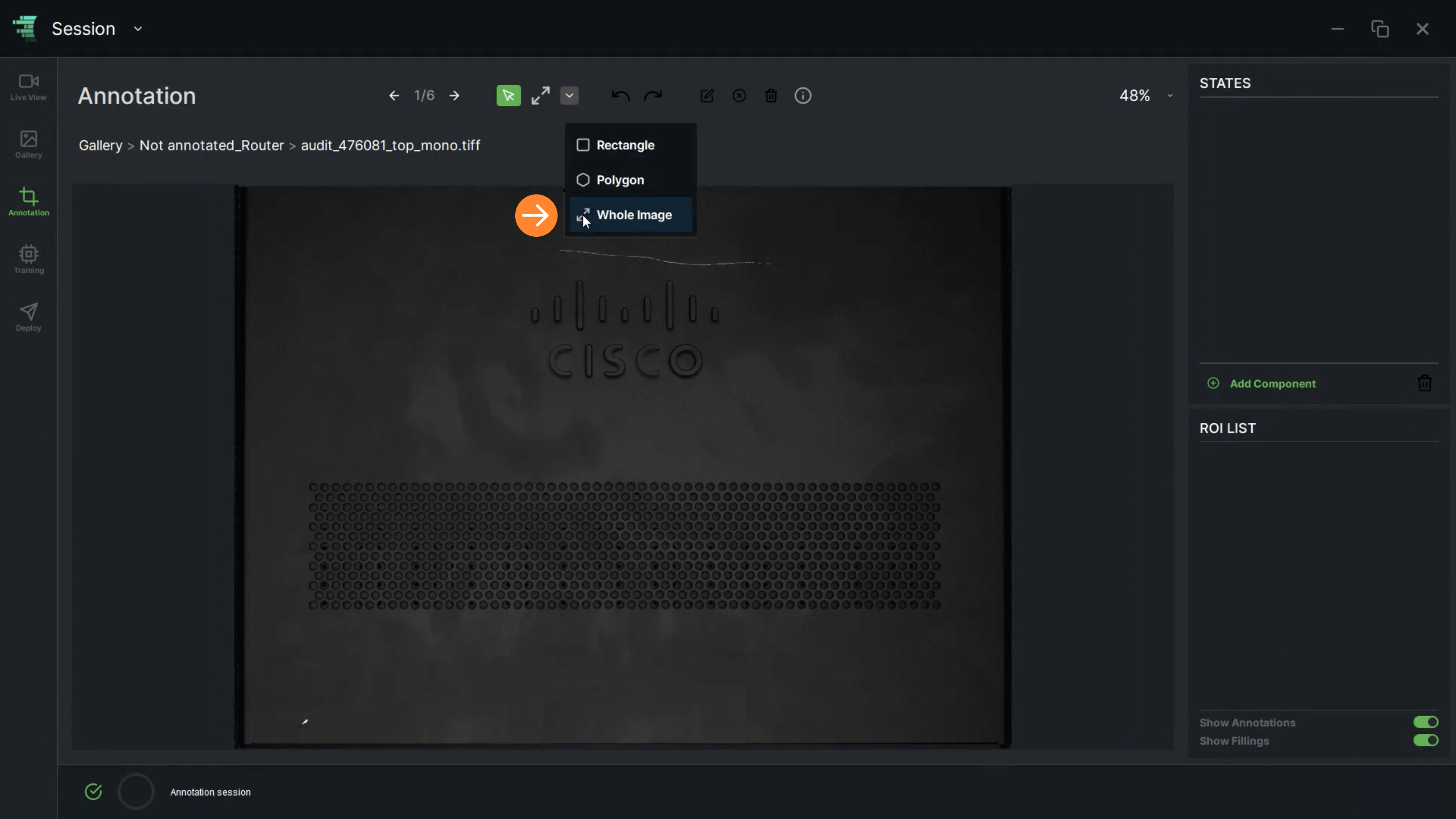

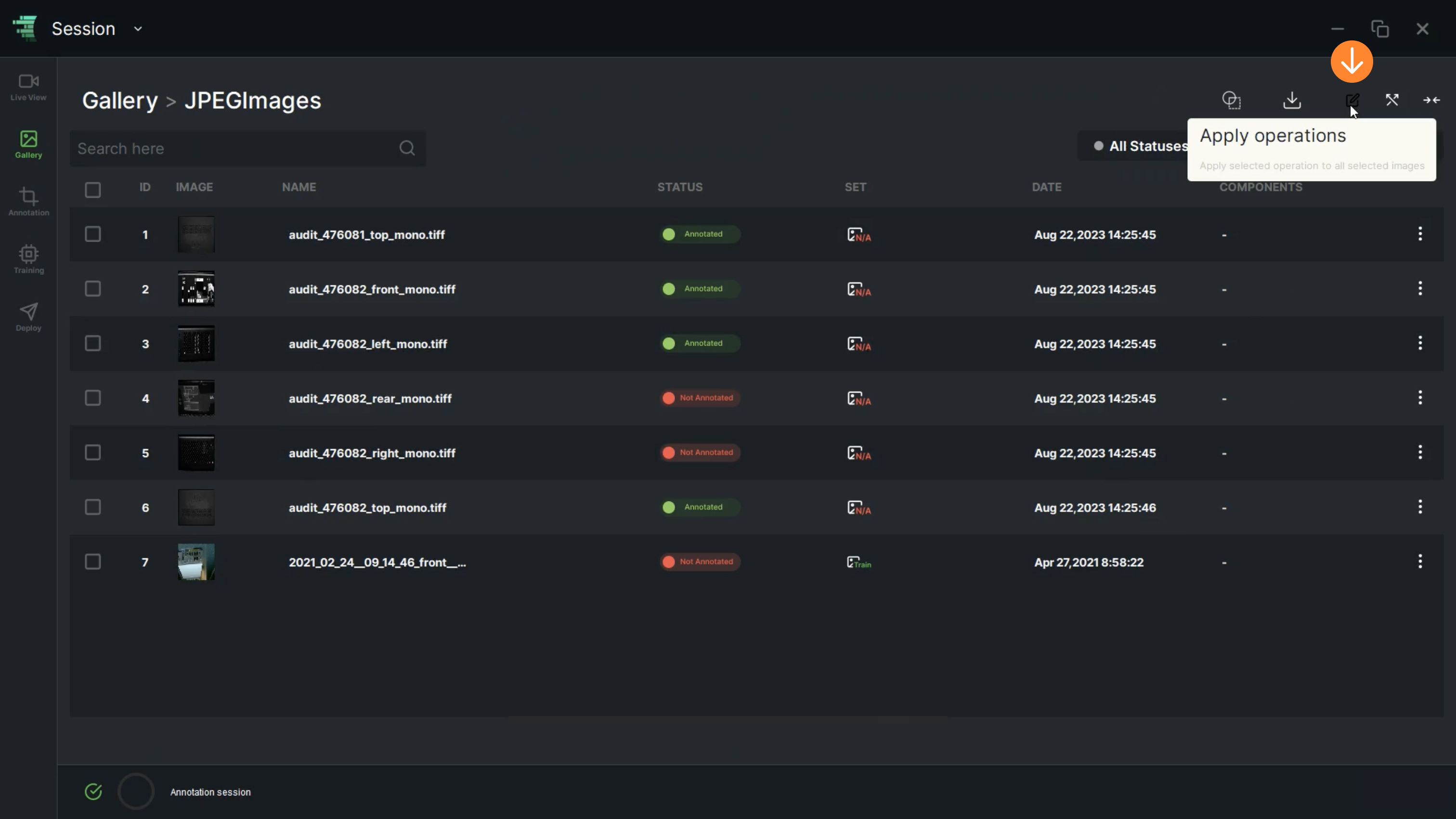

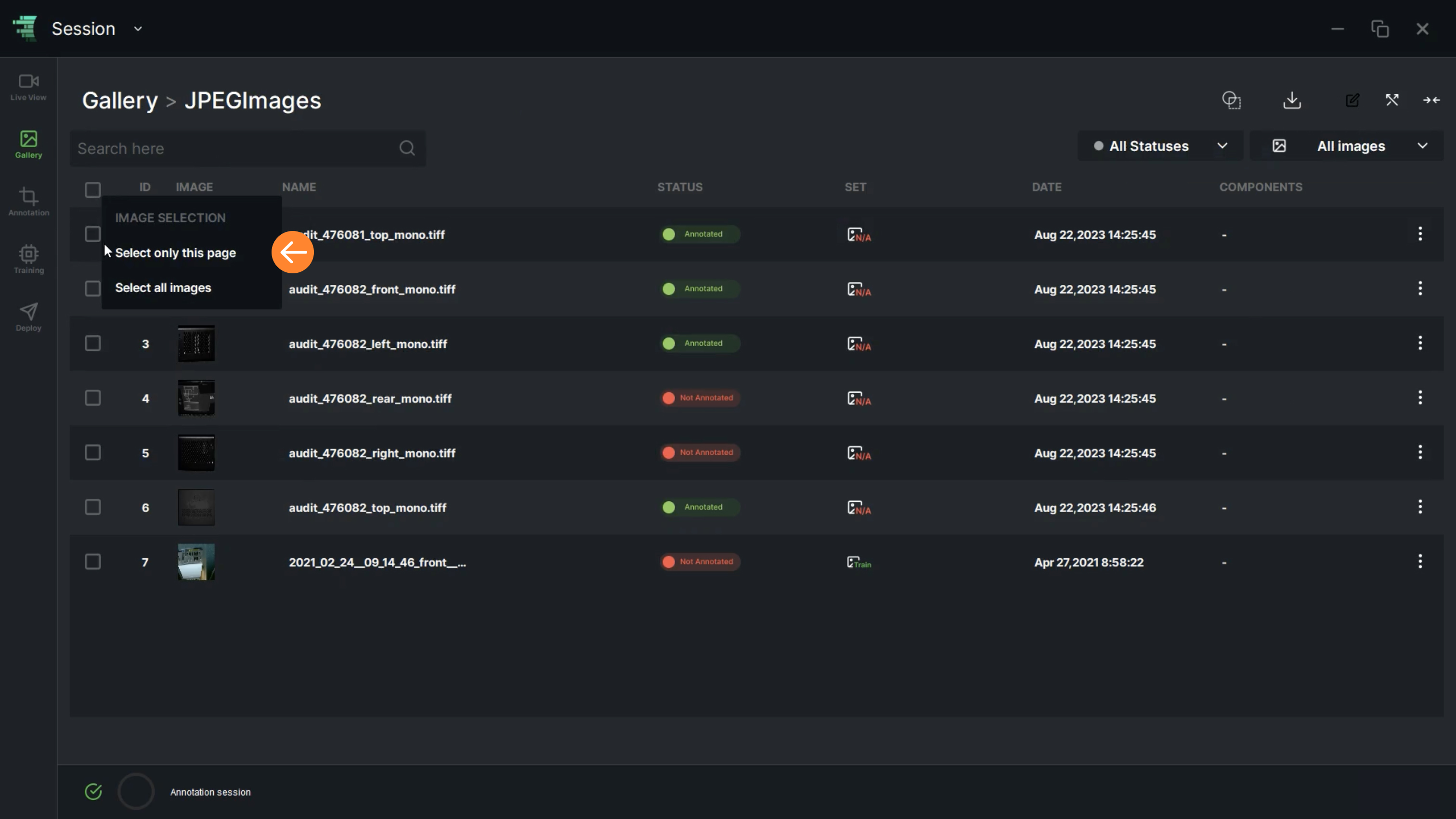

6. “Apply Operations” allows the user to perform multiple operations. To enable this function, the user should choose “Only this page” or “Select all images” option to select images

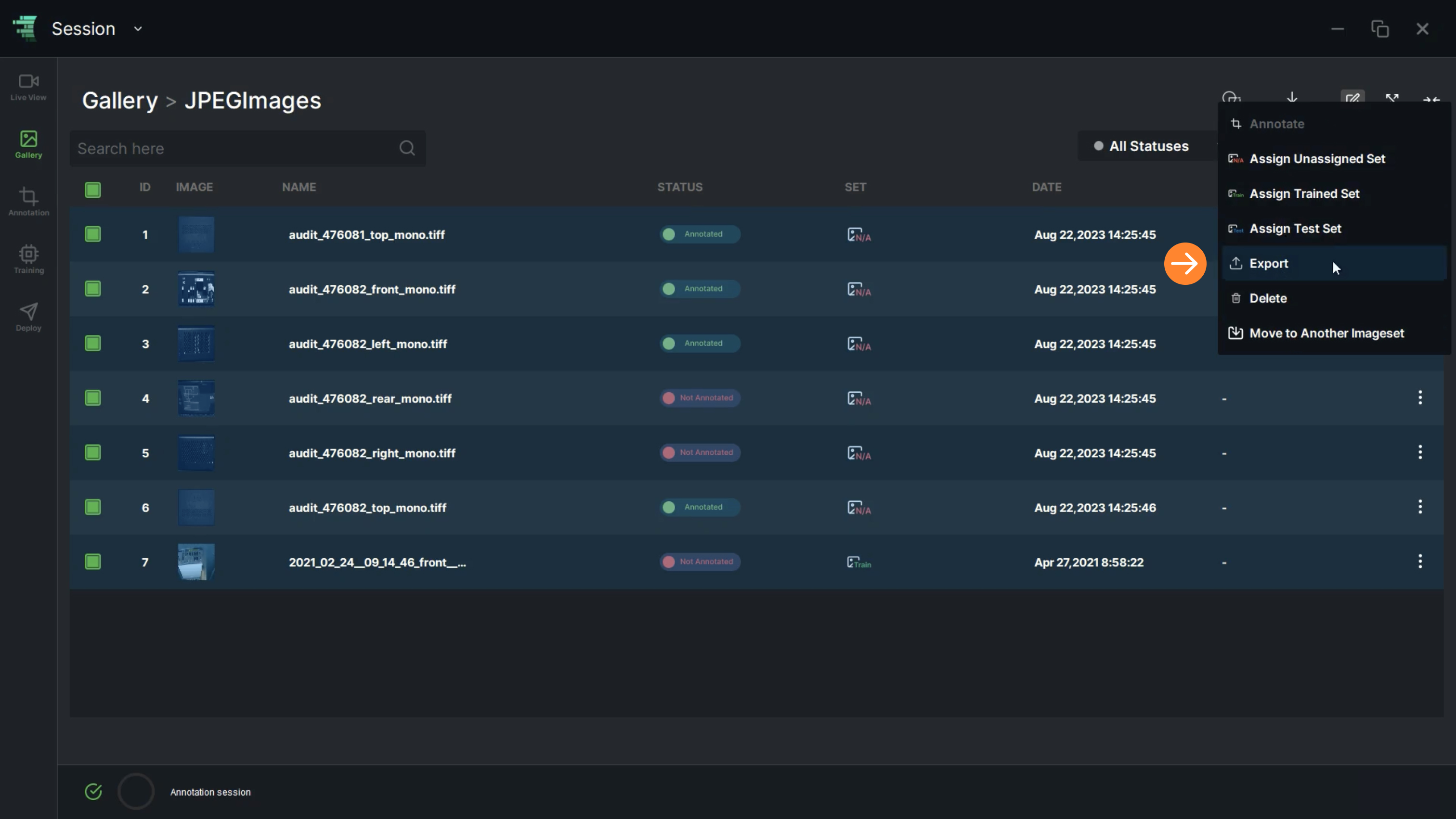

7. Once “Apply Operations” dialog box is displayed, the user can:

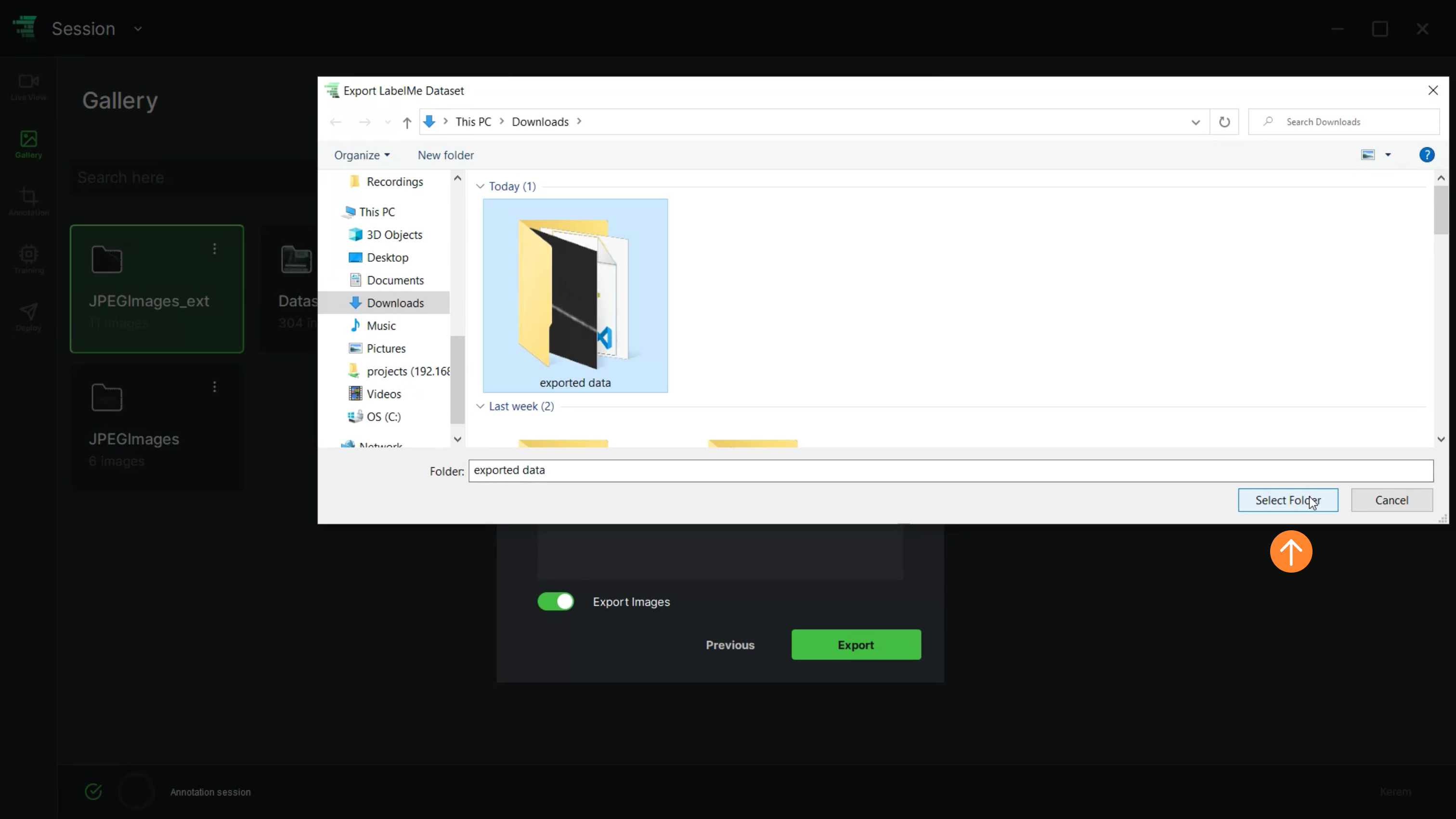

- Export or Delete images by clicking on these selections

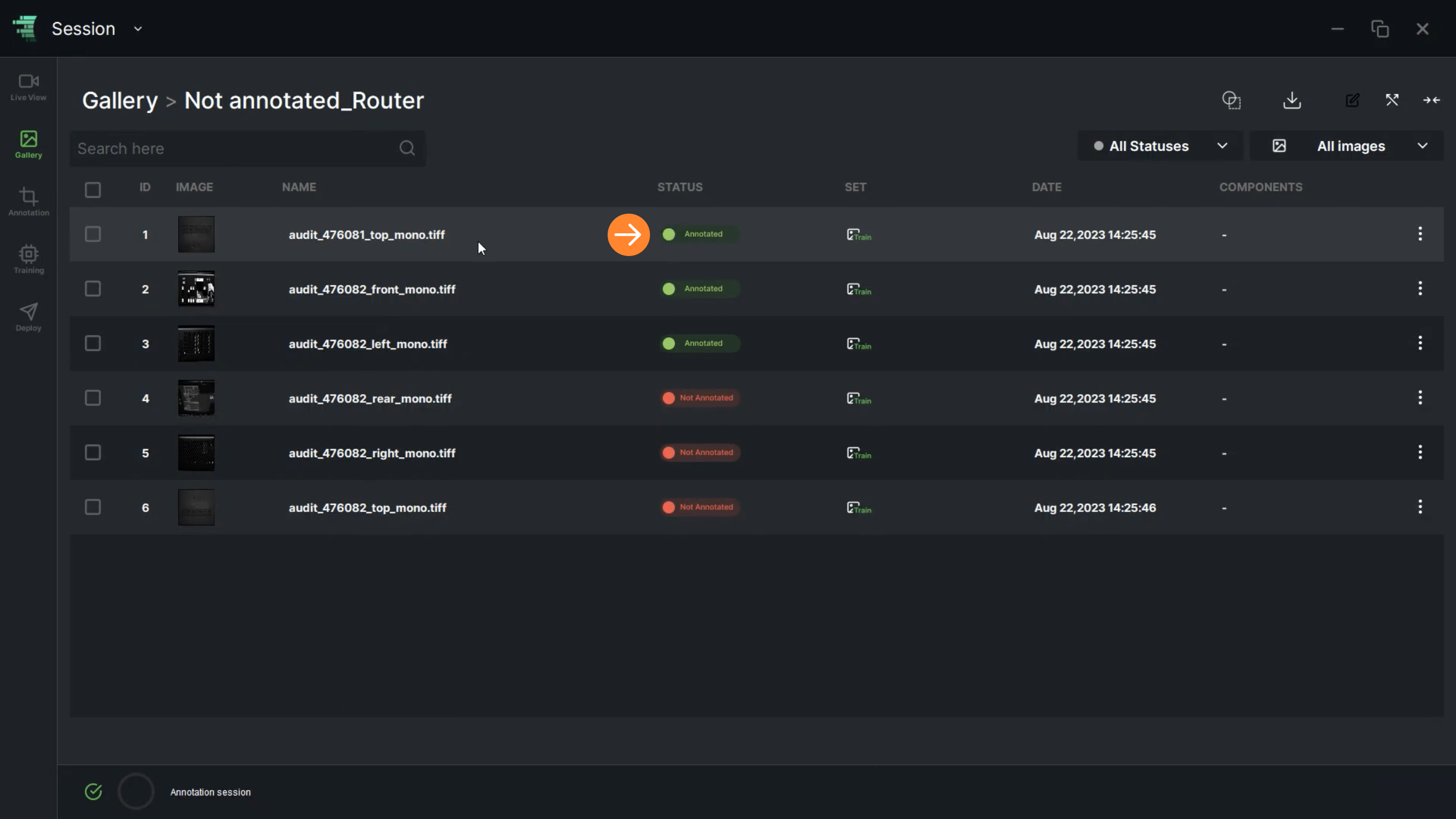

- Move images to another dataset by selecting Move to Another Imageset

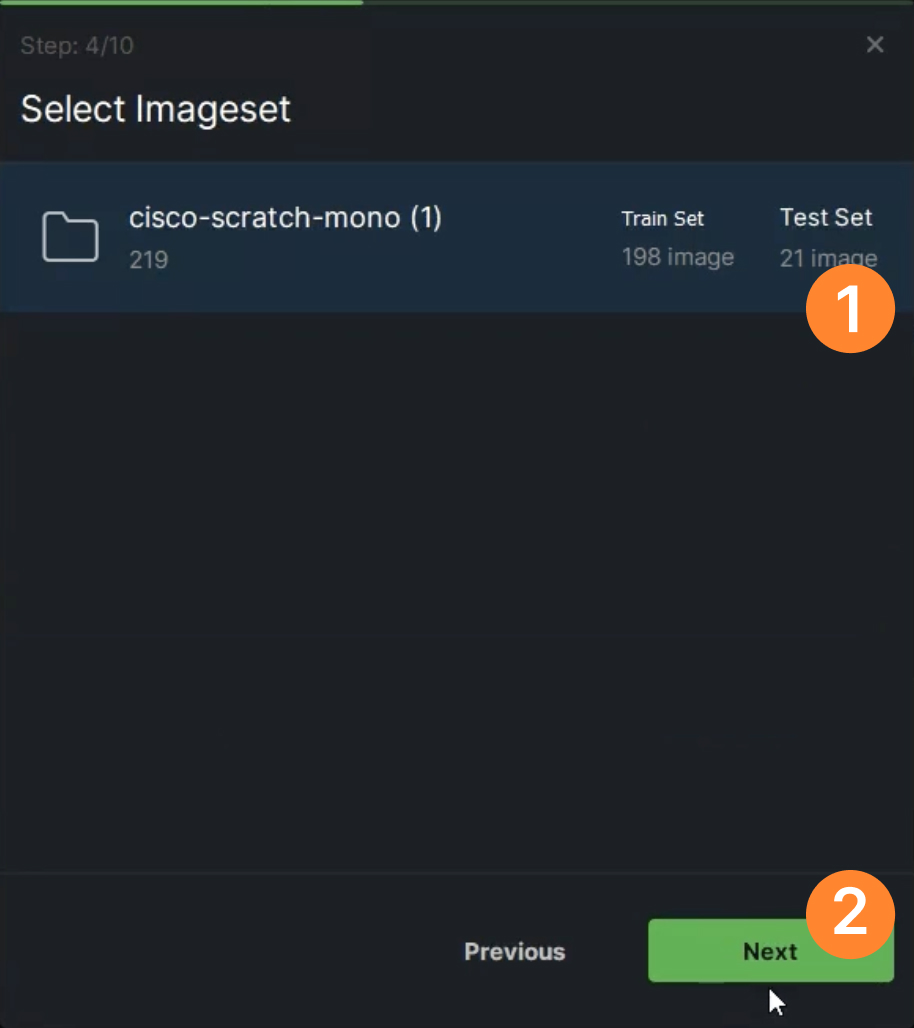

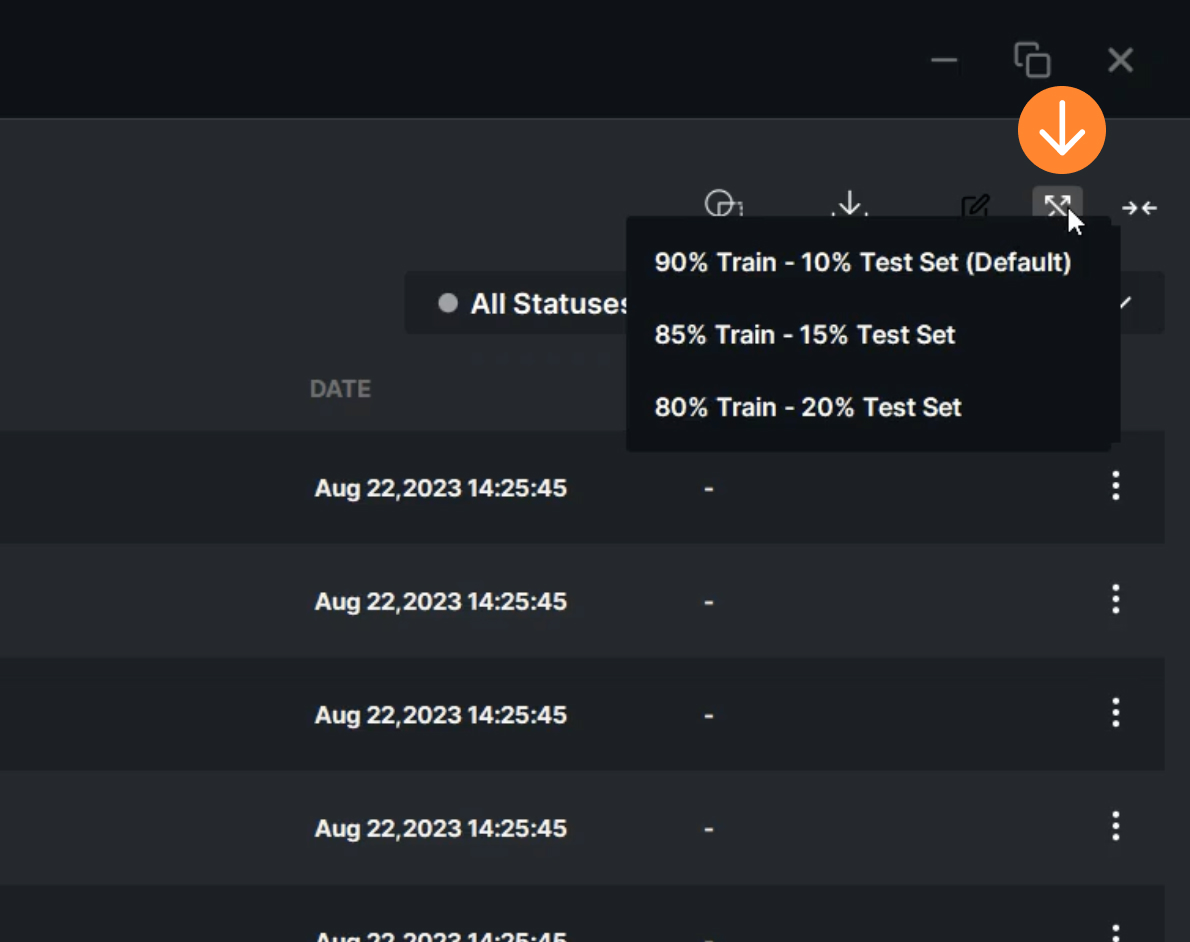

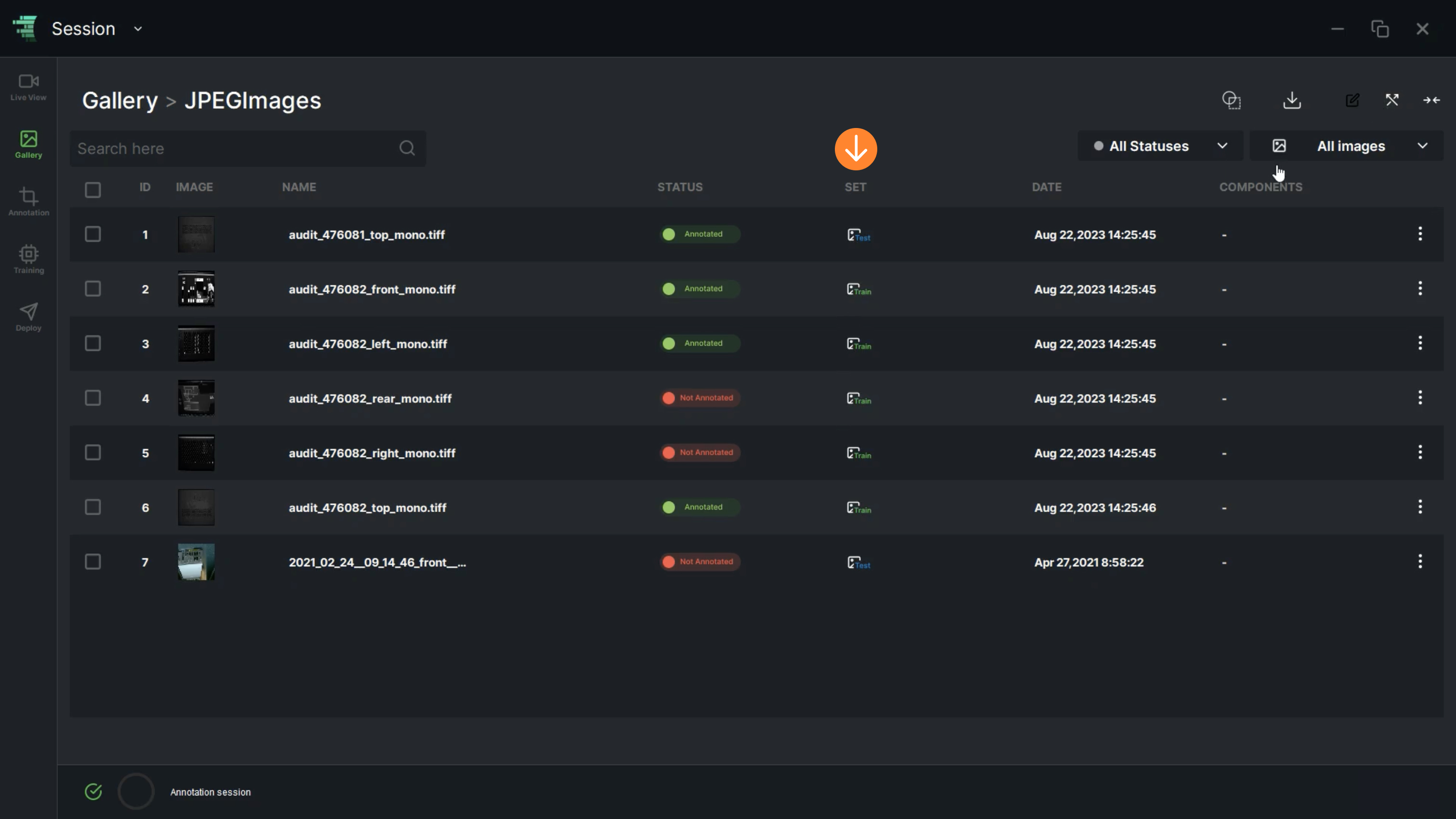

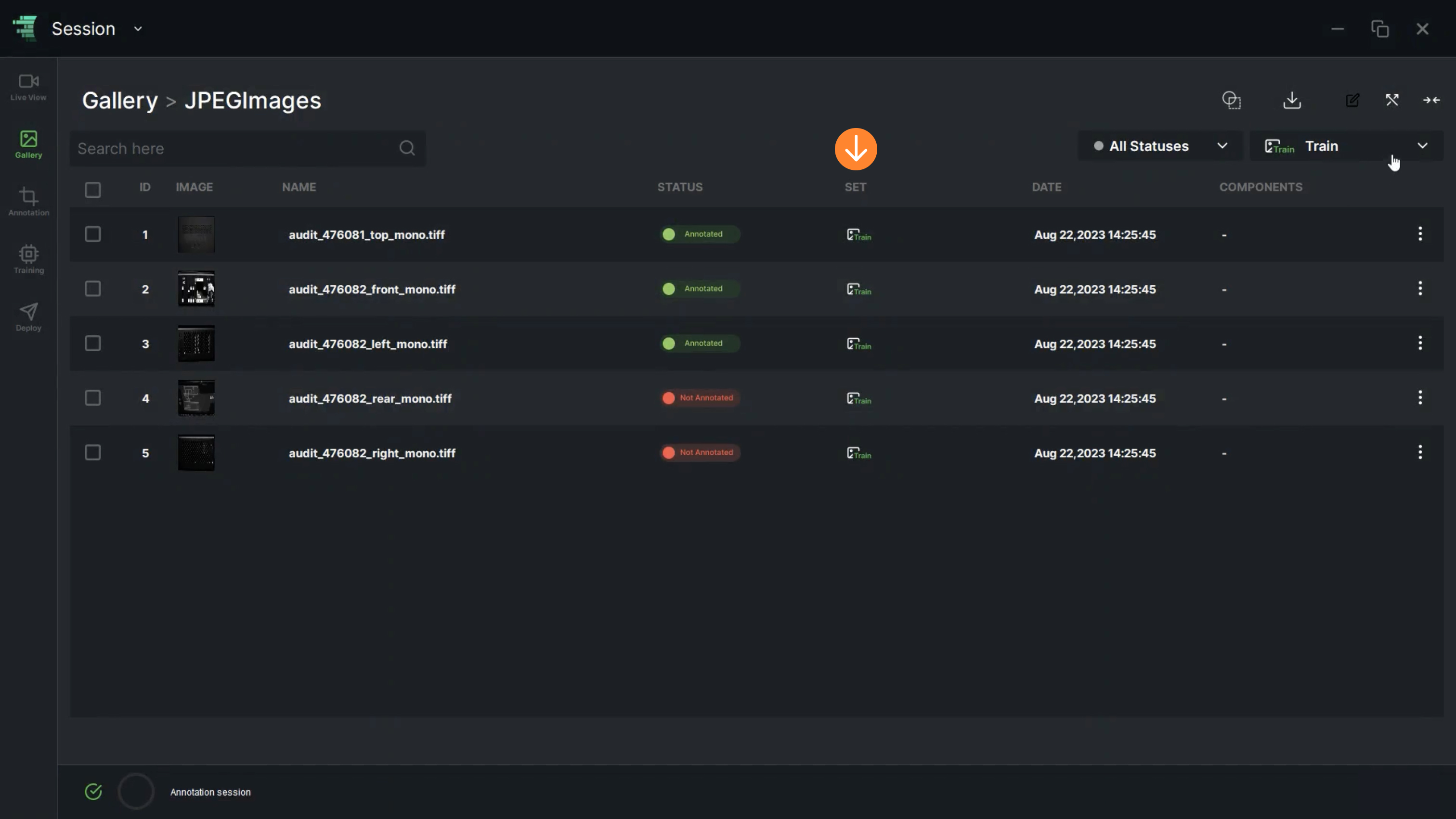

8. “Split Images” allows the user to split images before the training as Train/Test Sets with three options

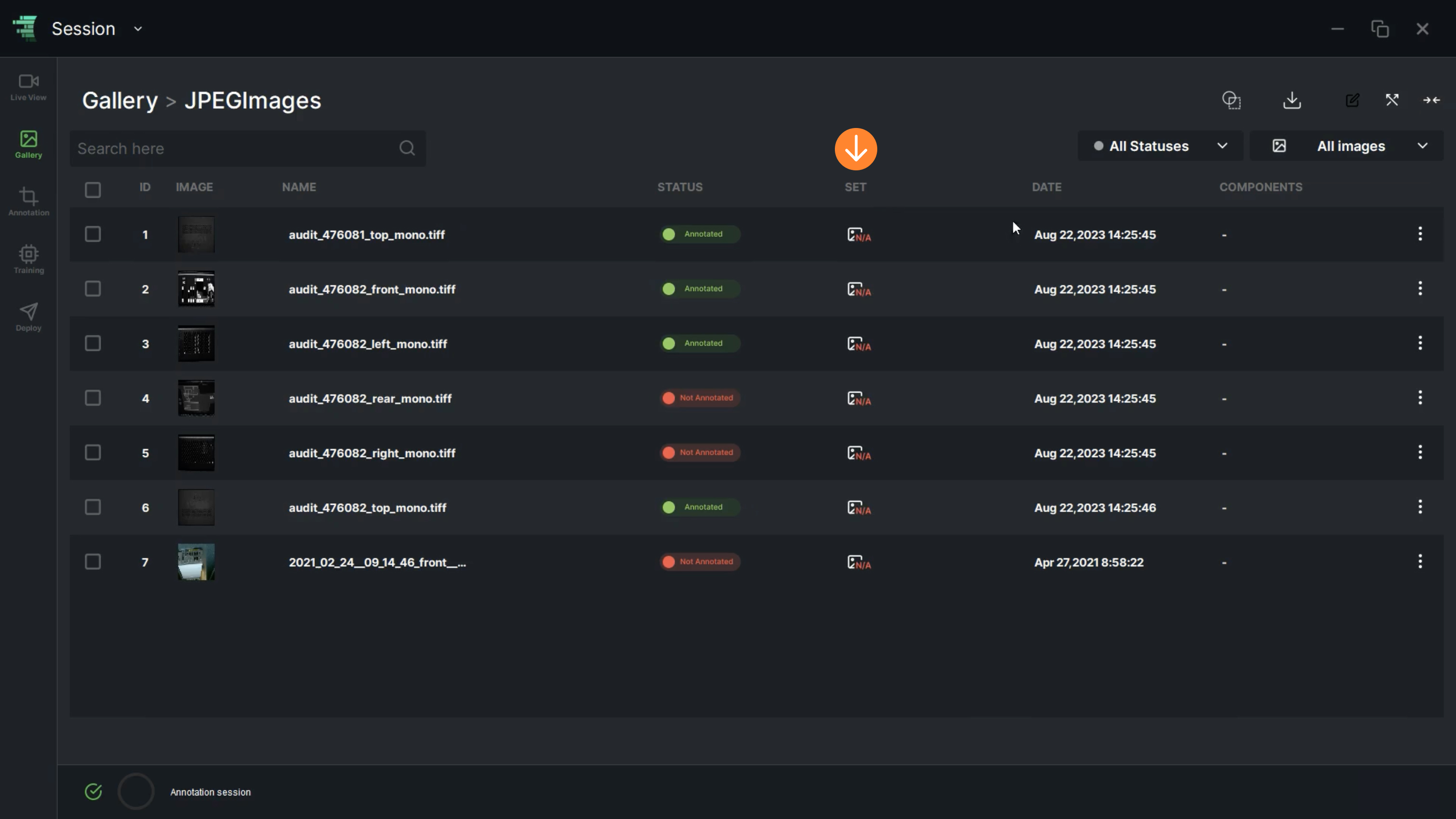

9. As the dataset is split, the image status will change from “Unassigned” to “Train/Test Sets”

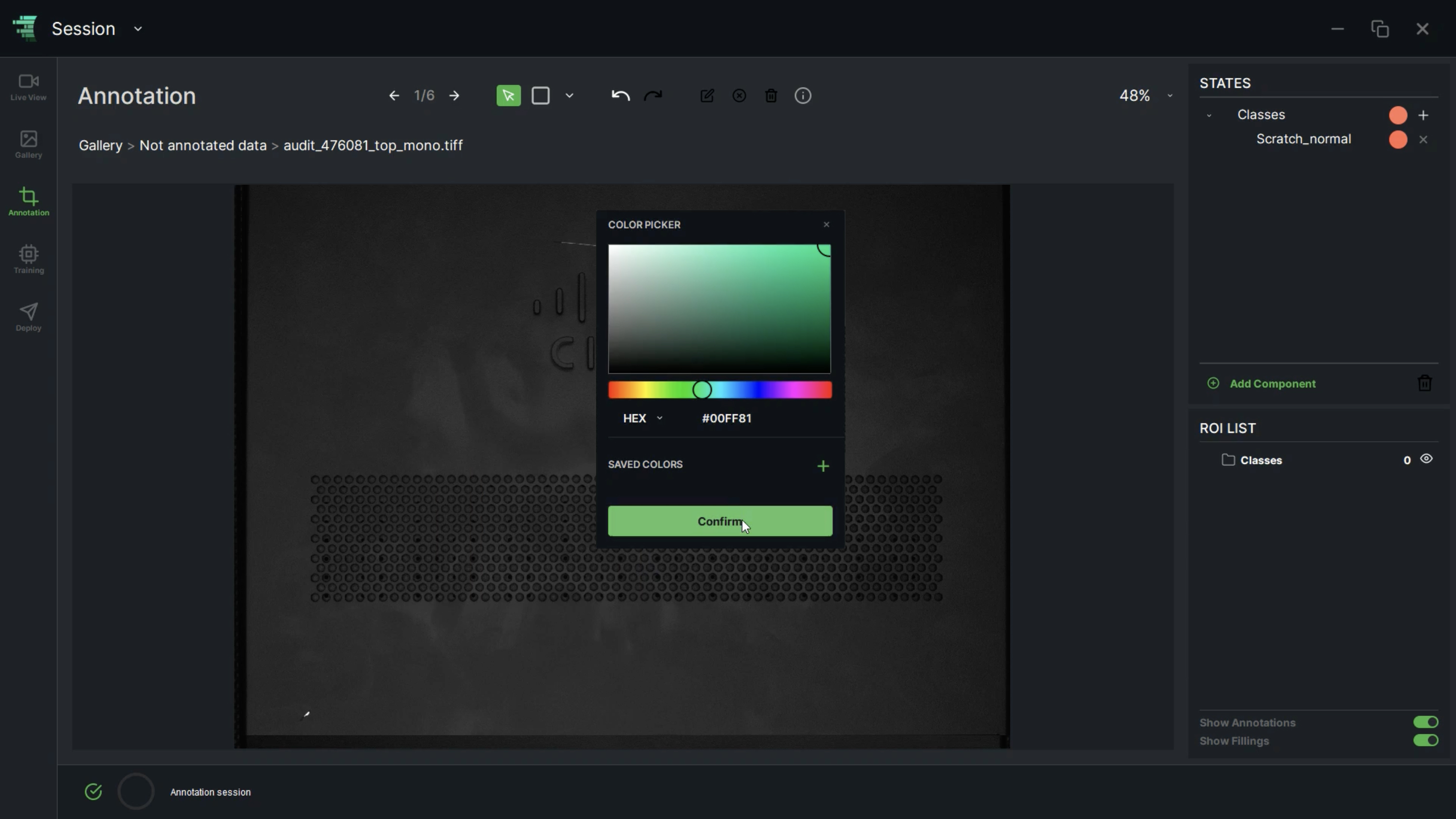

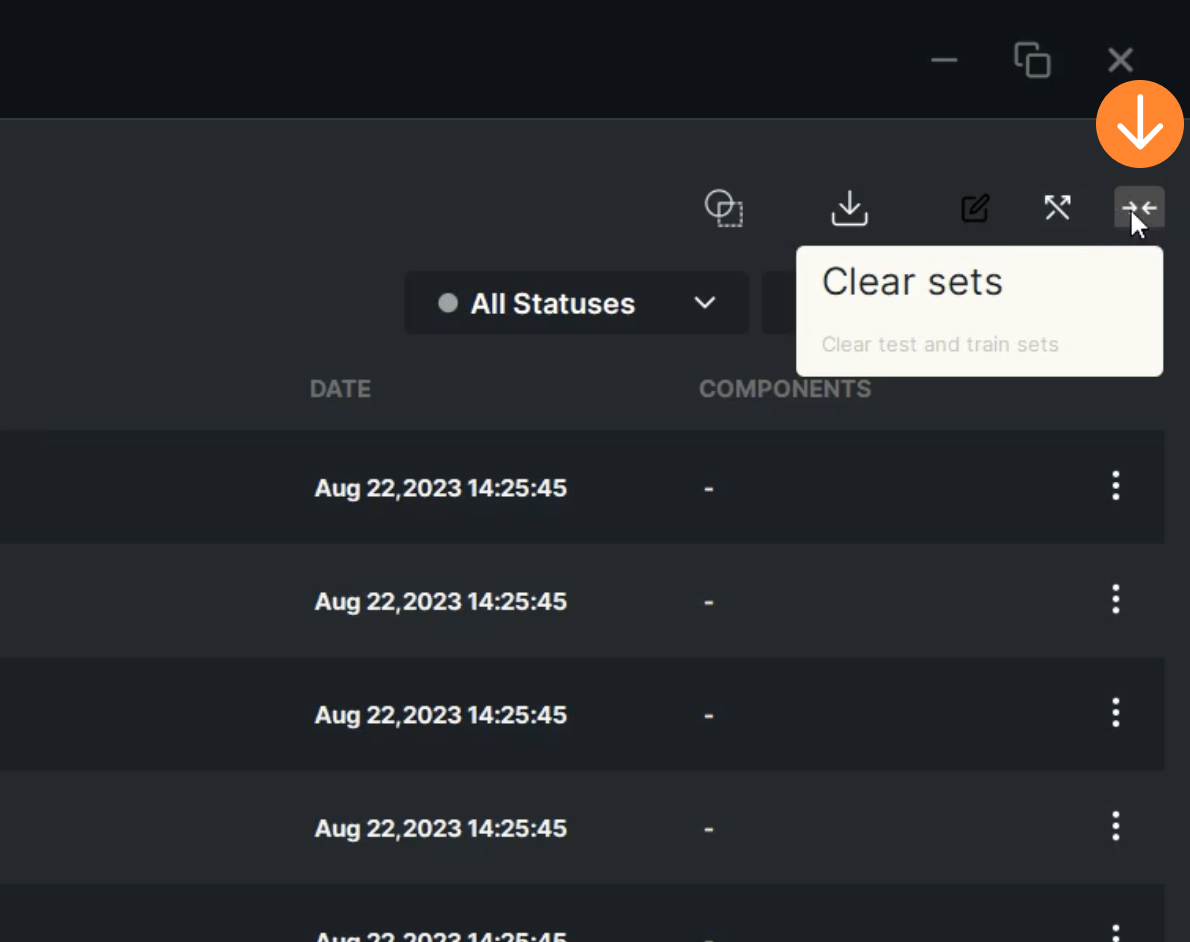

10. “Clear Sets” allows the user to clear all the current statuses. The new status of images will change to Unassigned Set

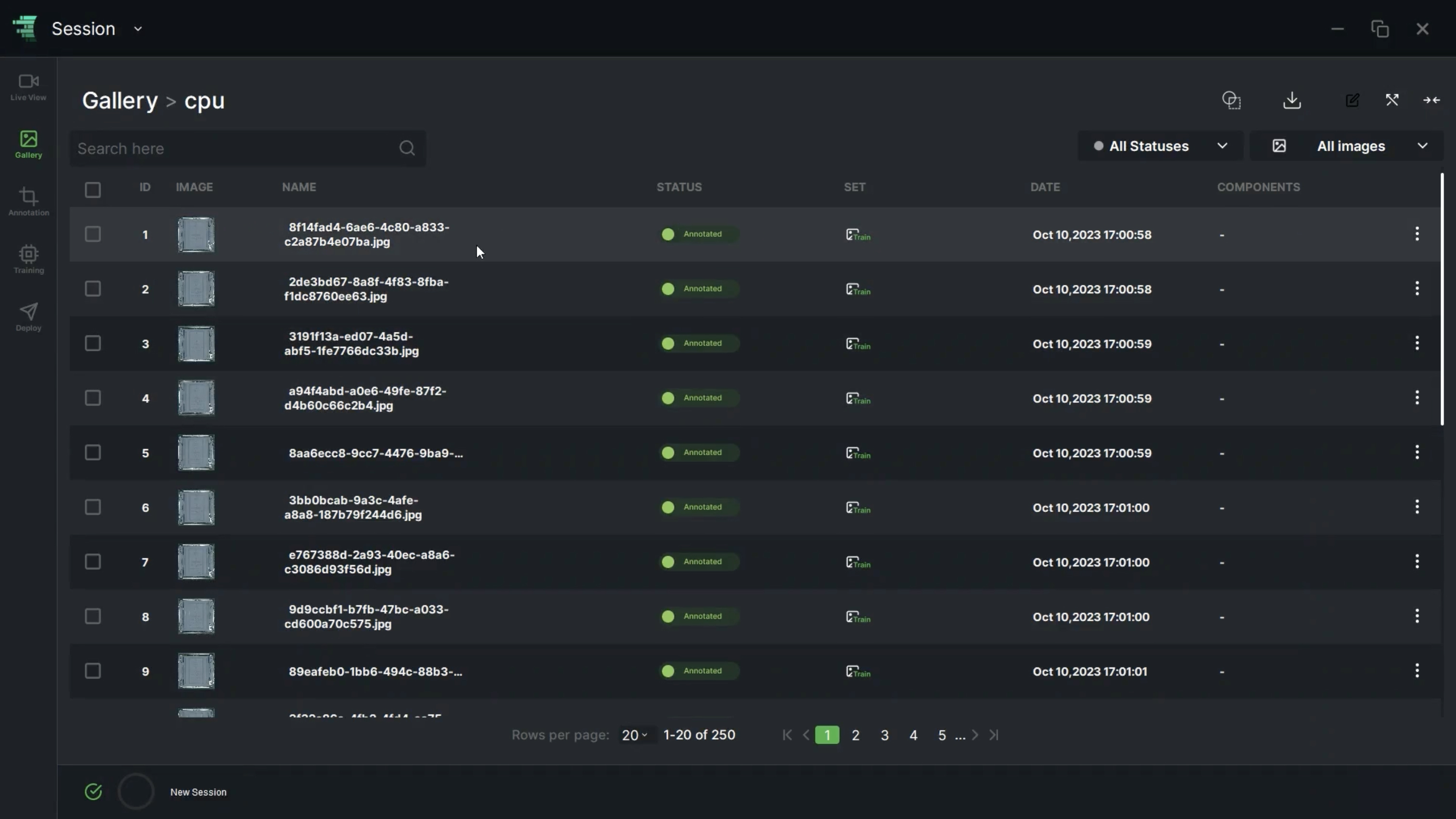

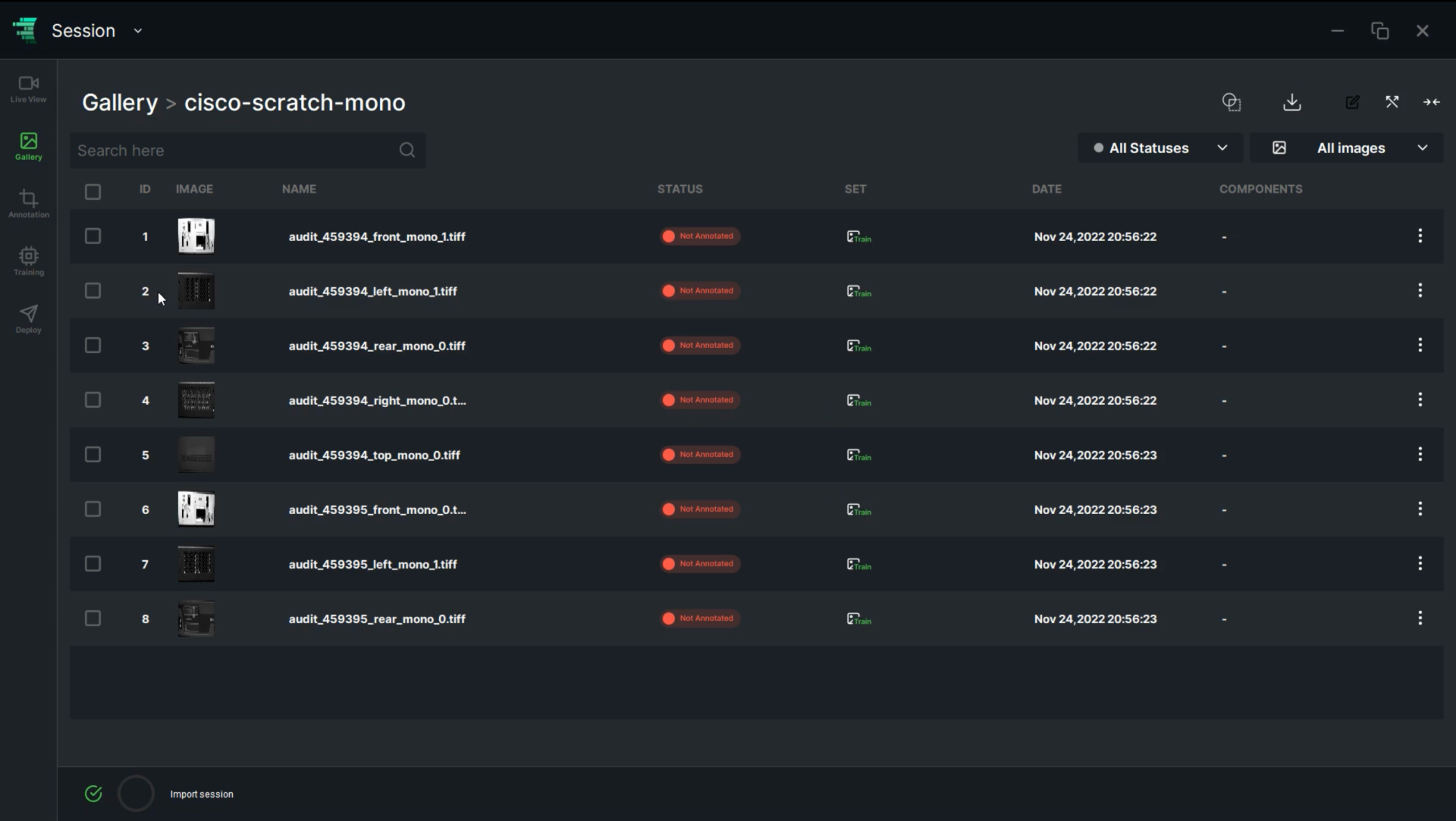

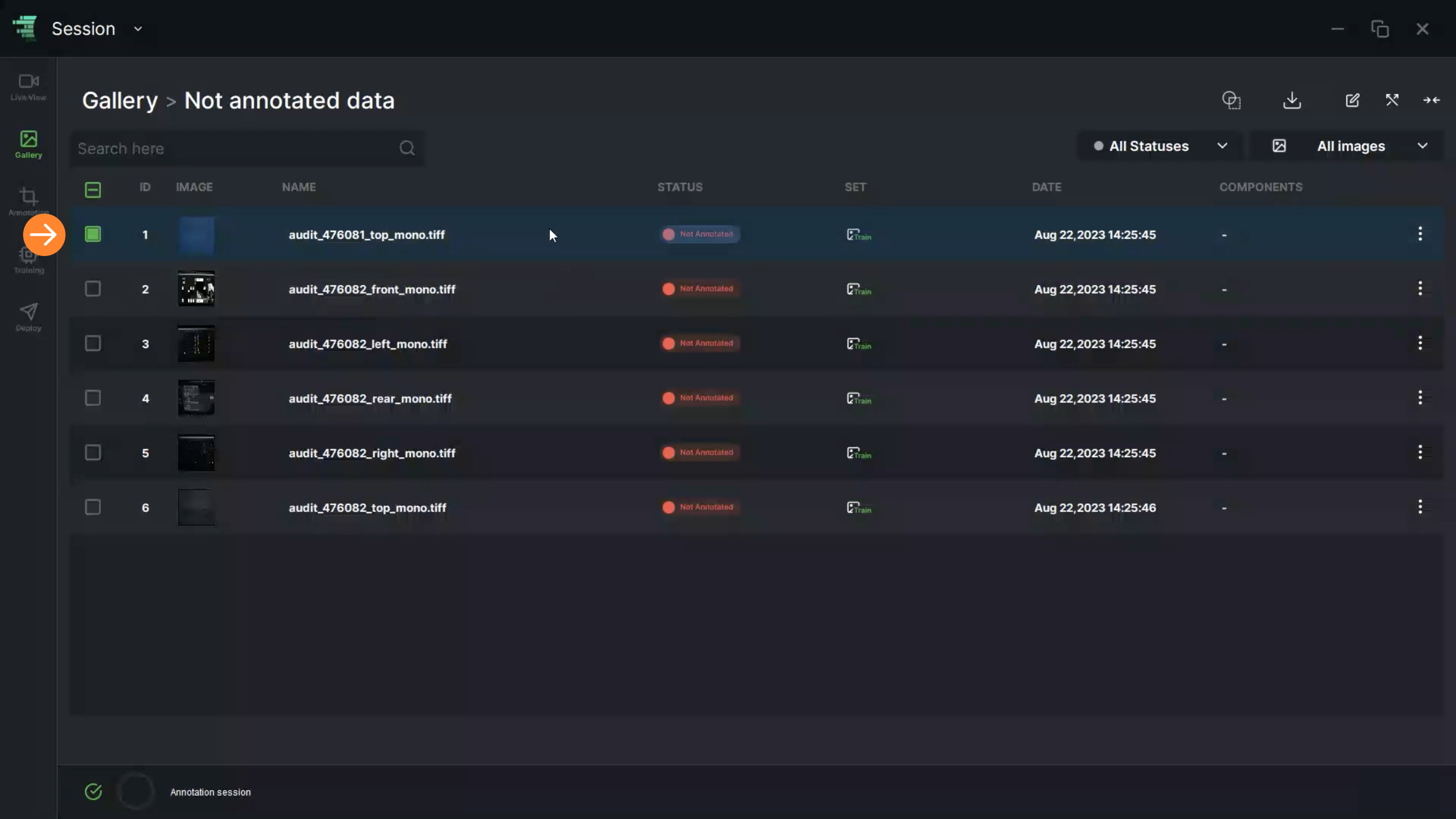

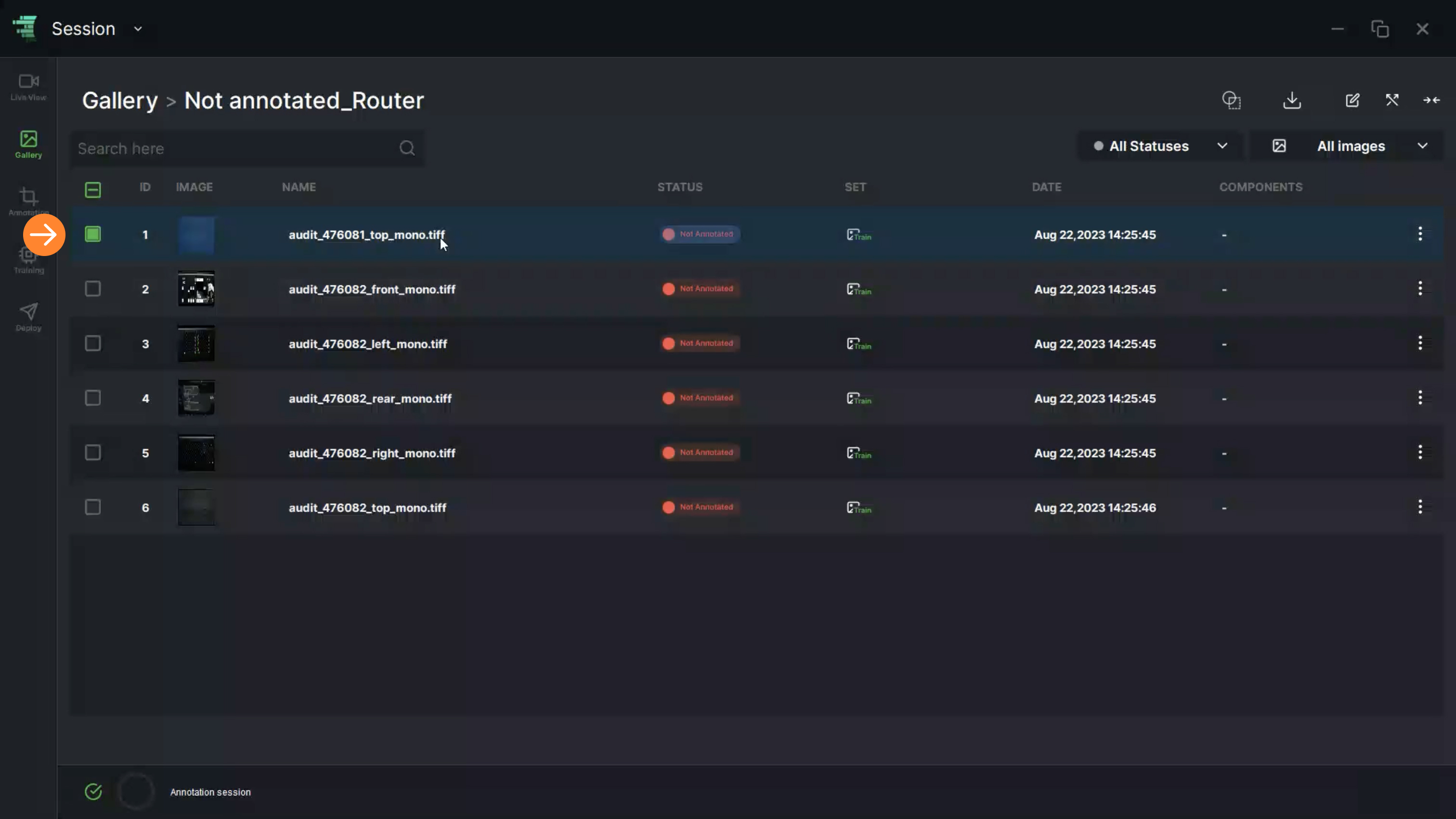

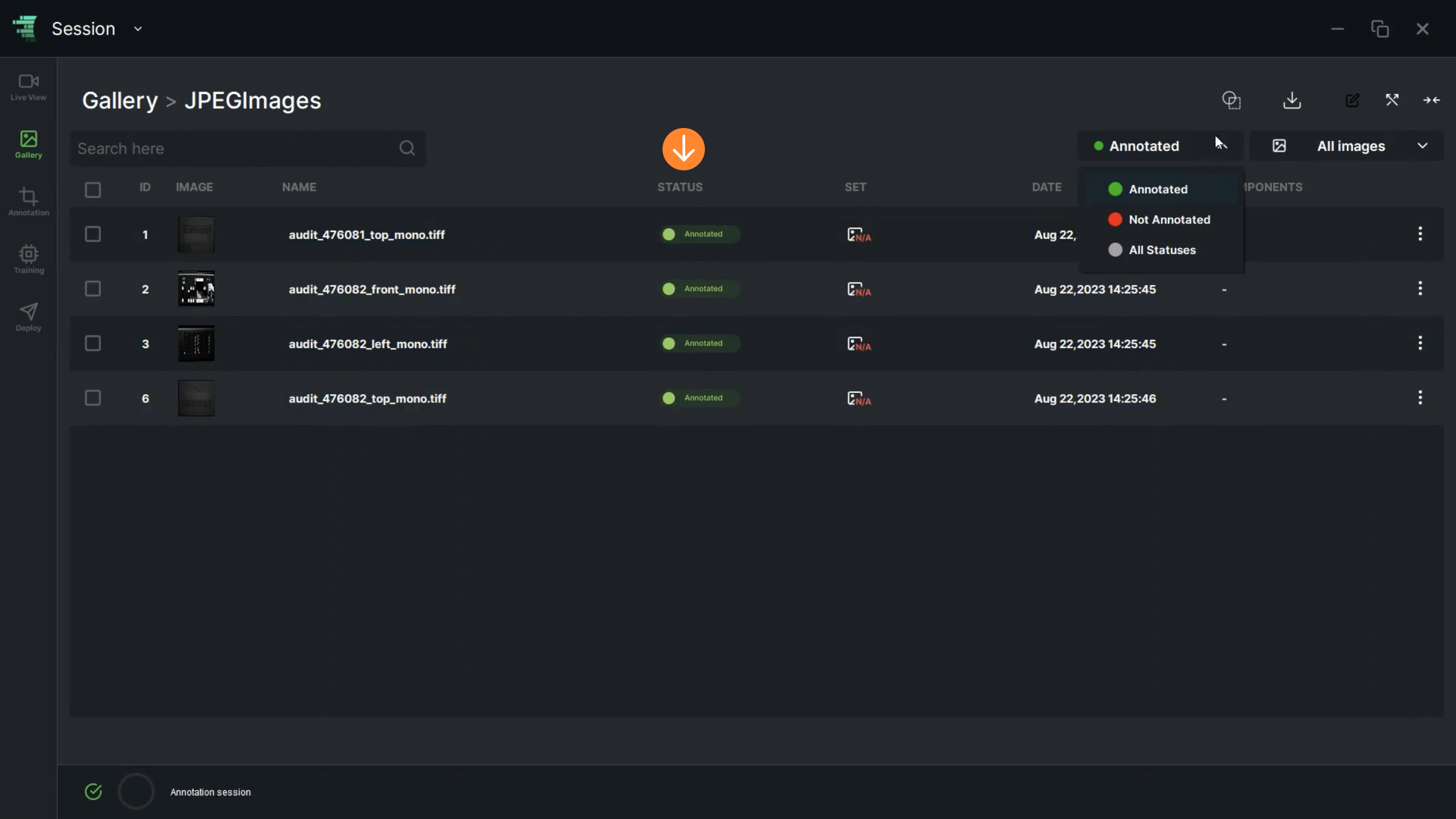

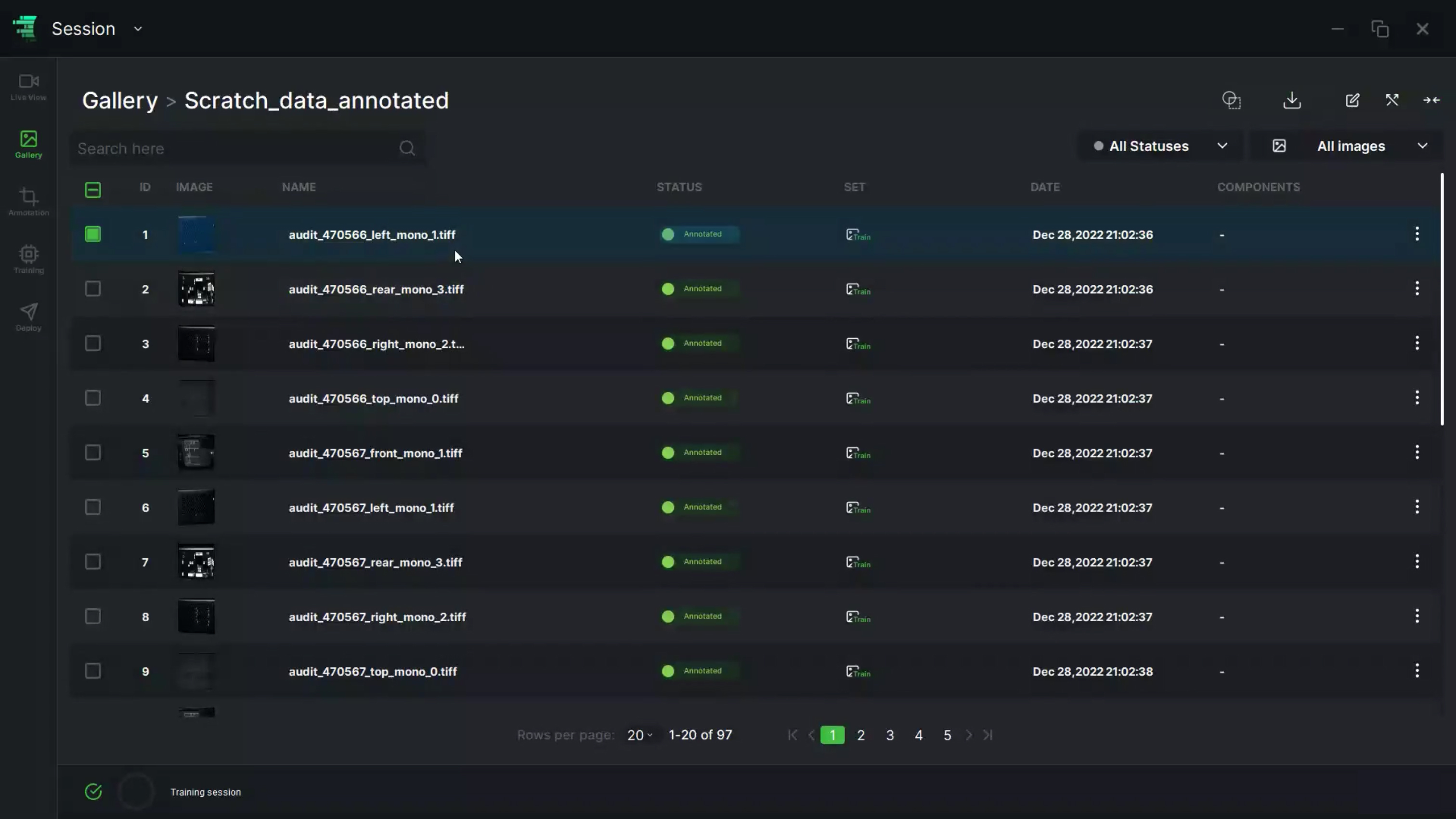

11. “All Statuses” option enables the user to filter images based on “Annotated” or “Not Annotated” statuses. Upon selecting either of these options, the list view will be automatically updated to reflect the chosen filter

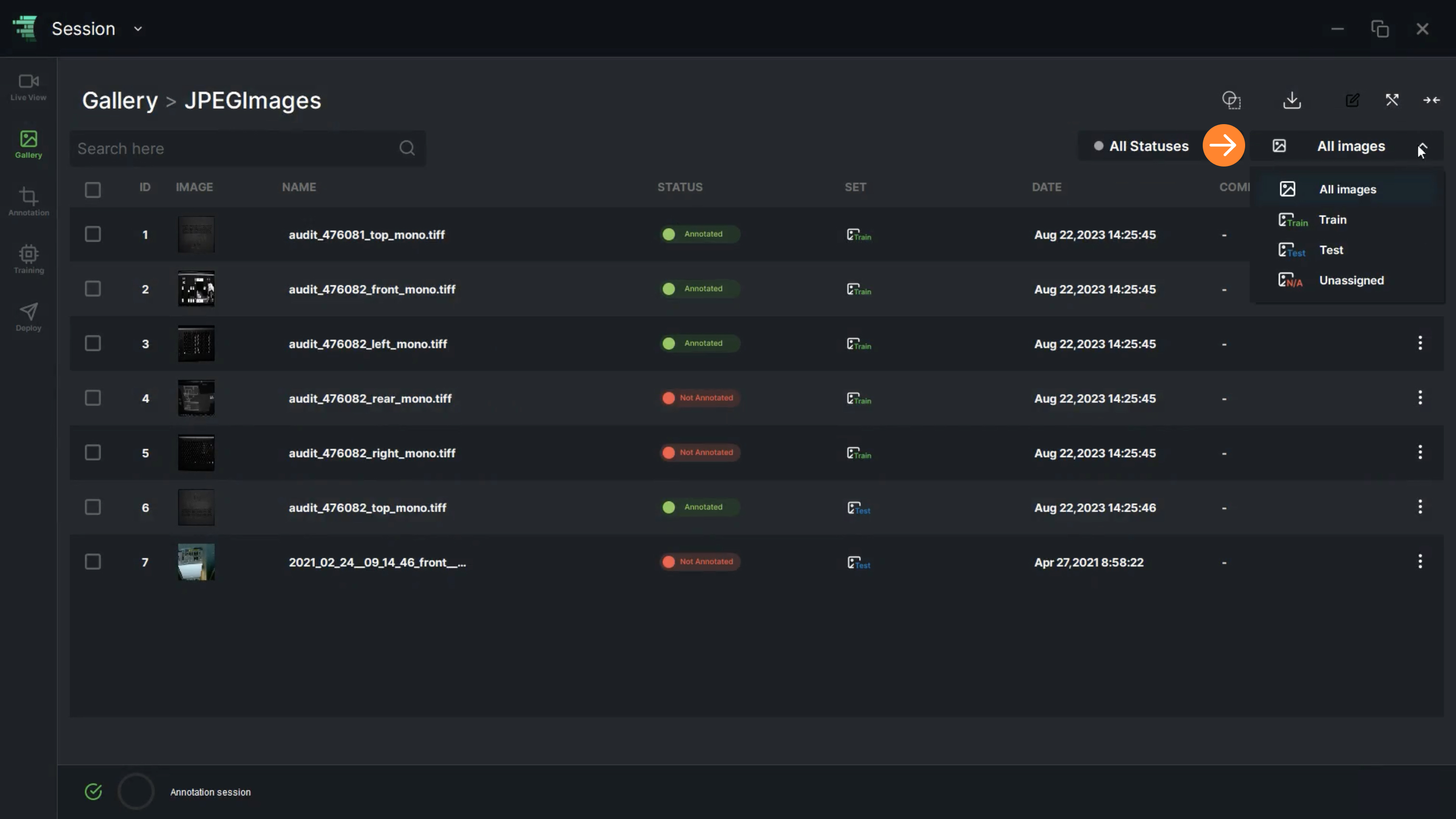

12. “All Images” allows the user to filter the images by selecting Train/Test/Unassigned statuses. Upon selecting either of these options, the list view will be automatically updated to reflect the chosen filter

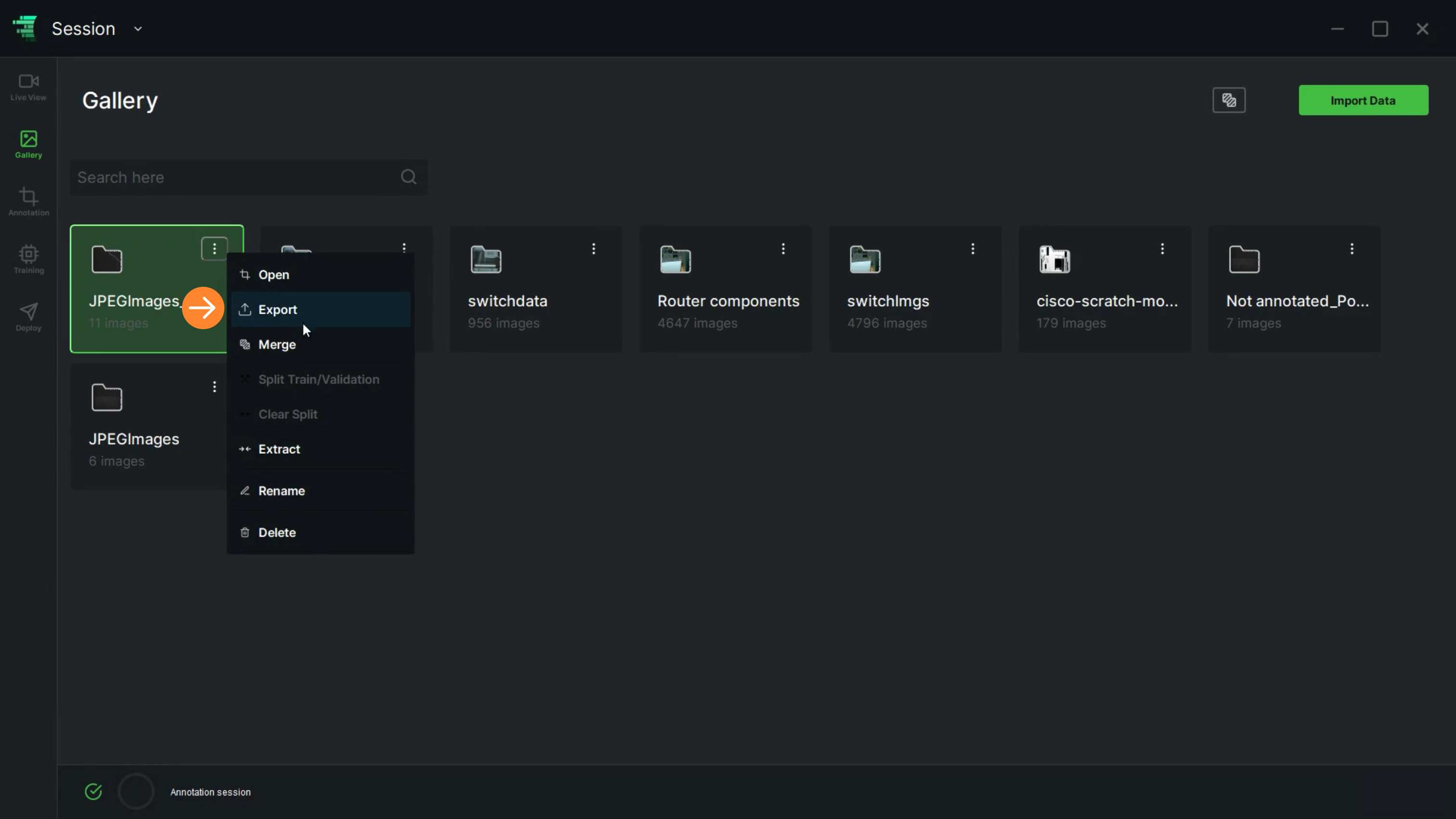

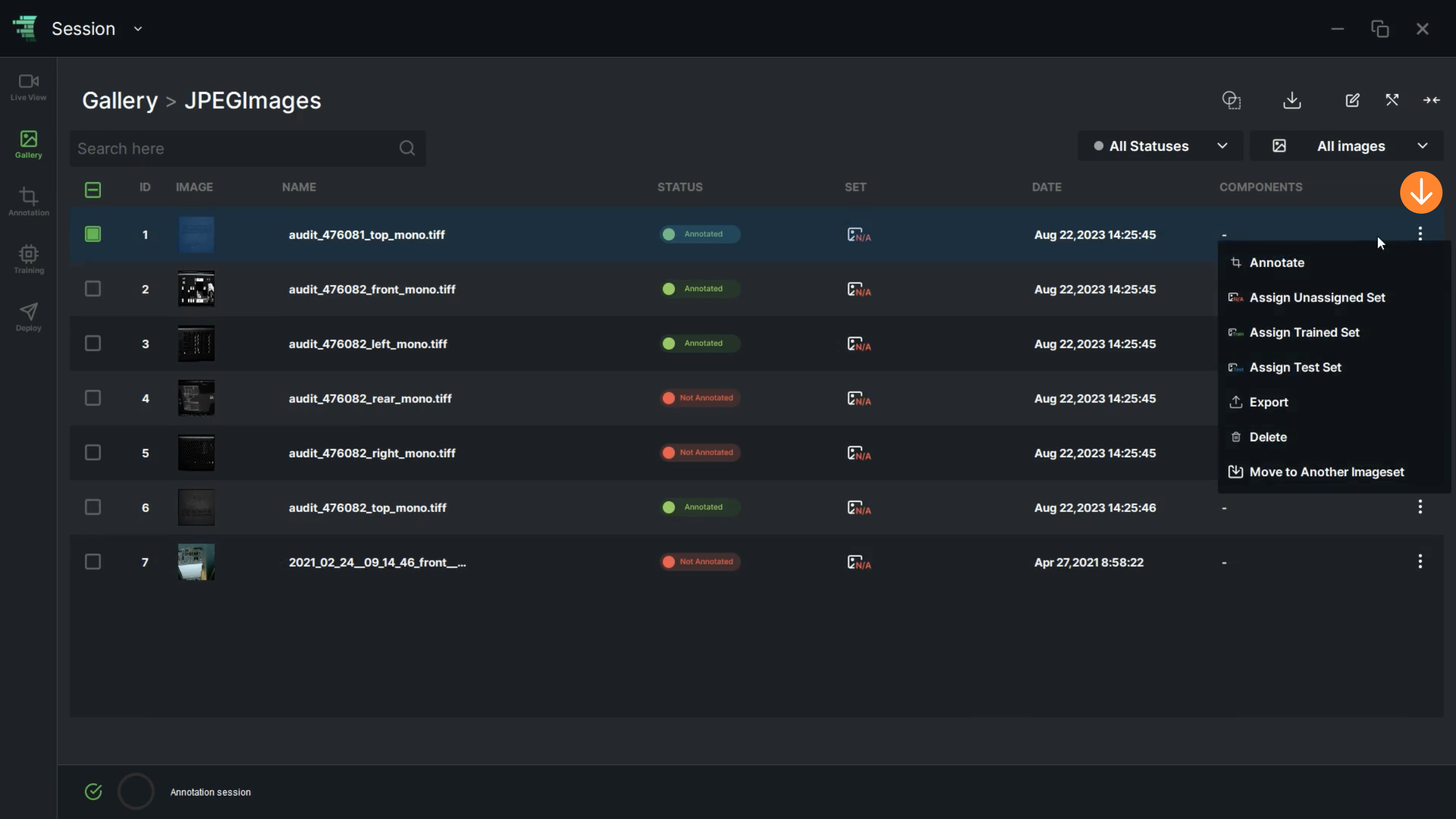

13. If the user clicks on the “More” icon (⋮) on the right, a dialog box will be displayed containing functions similar to “Apply Operation”

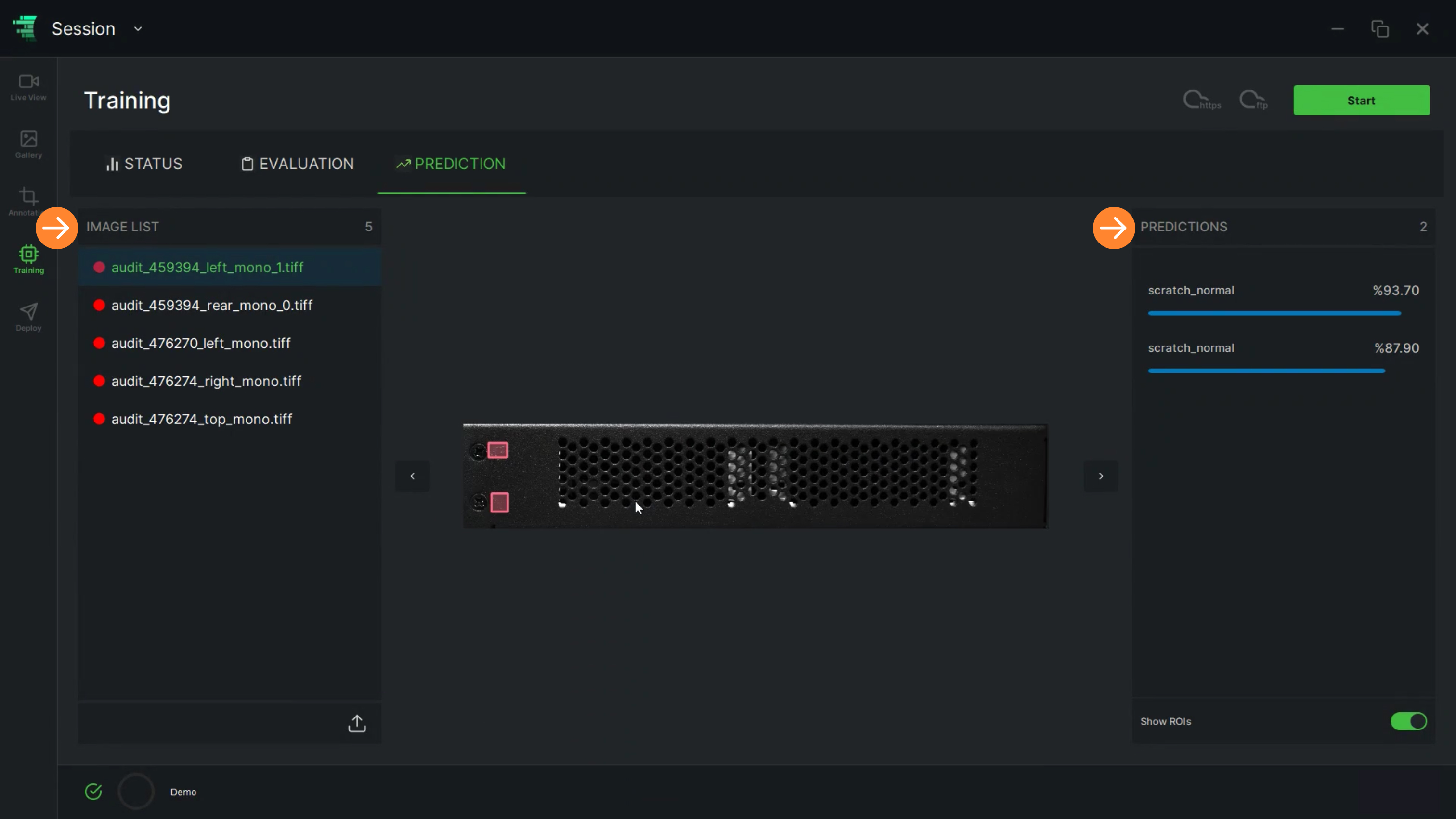

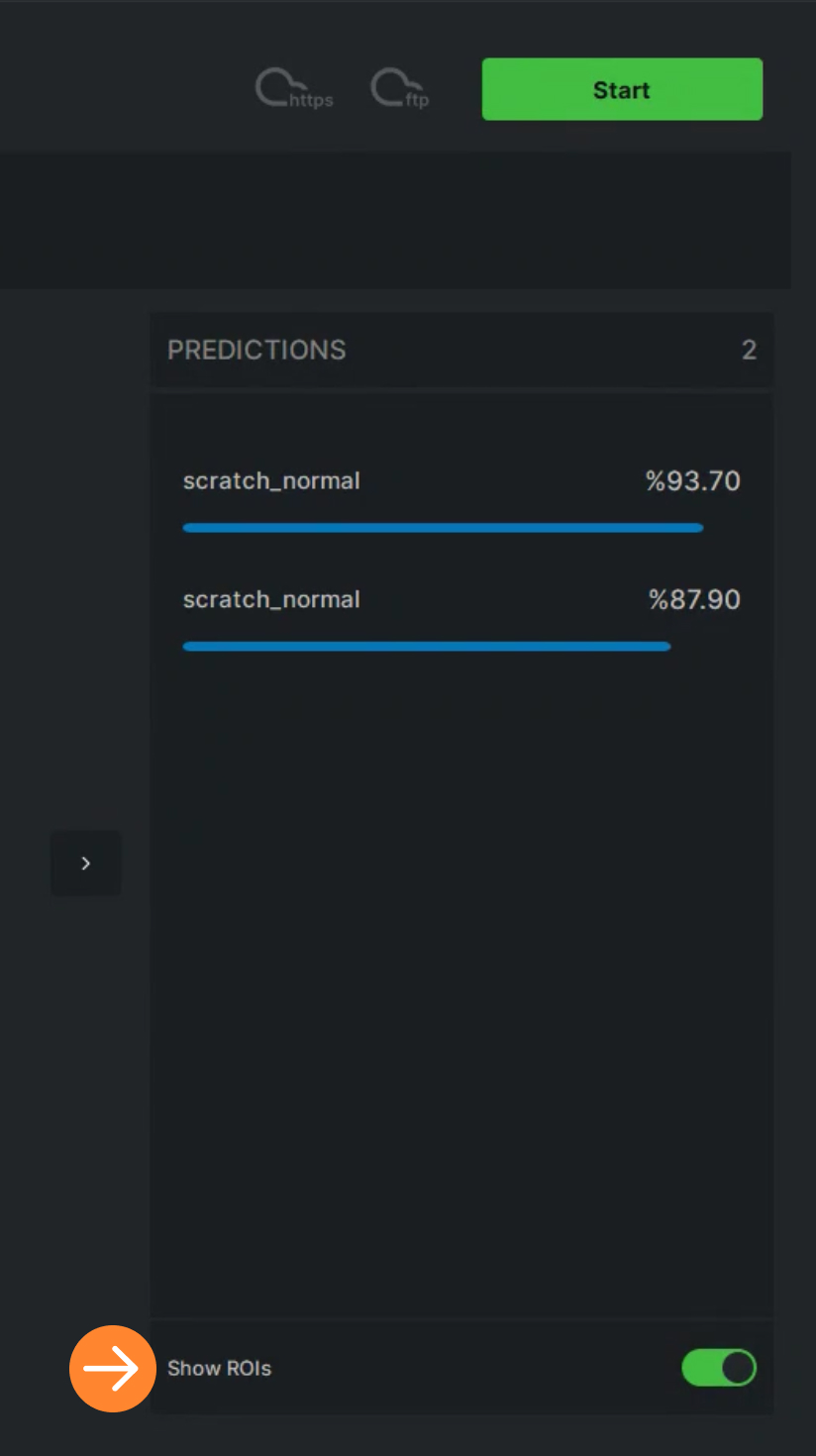

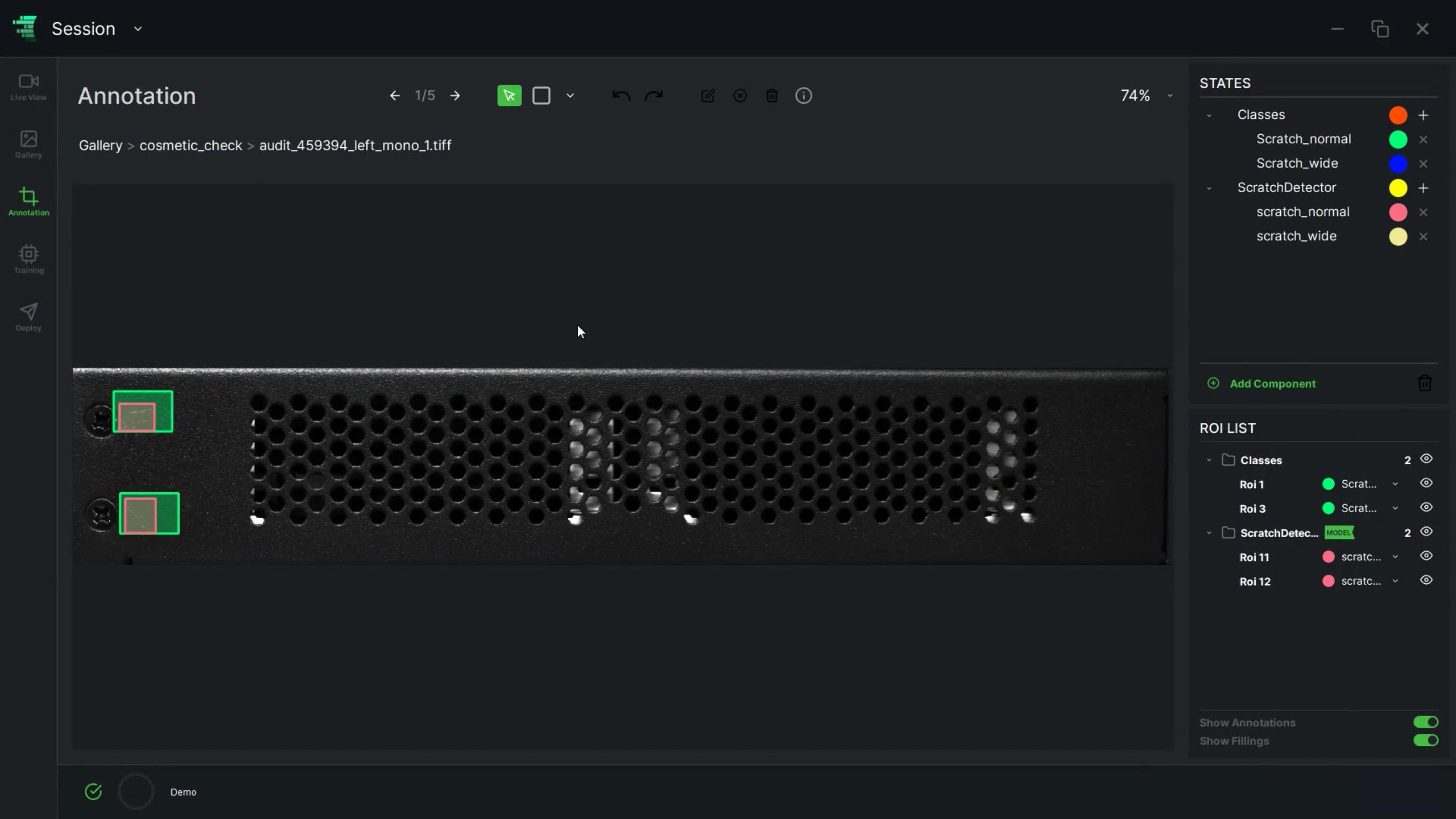

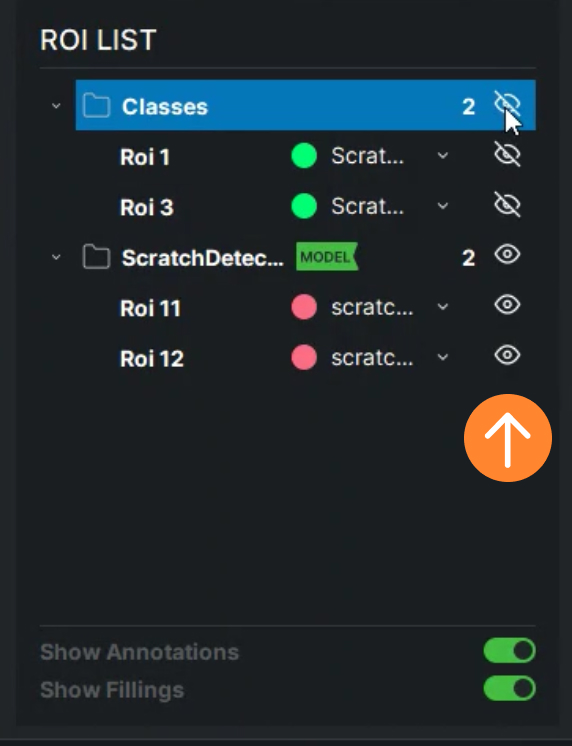

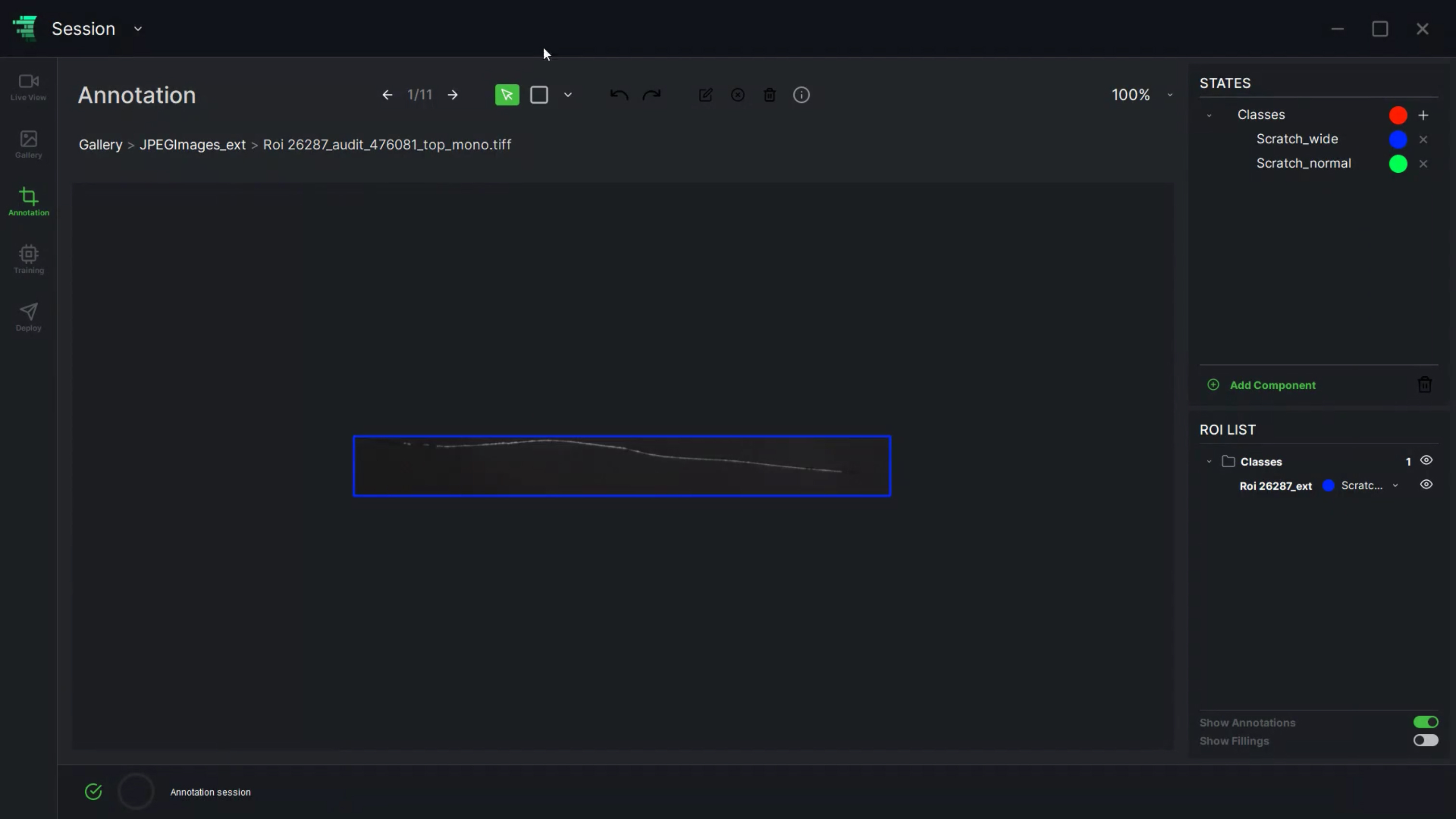

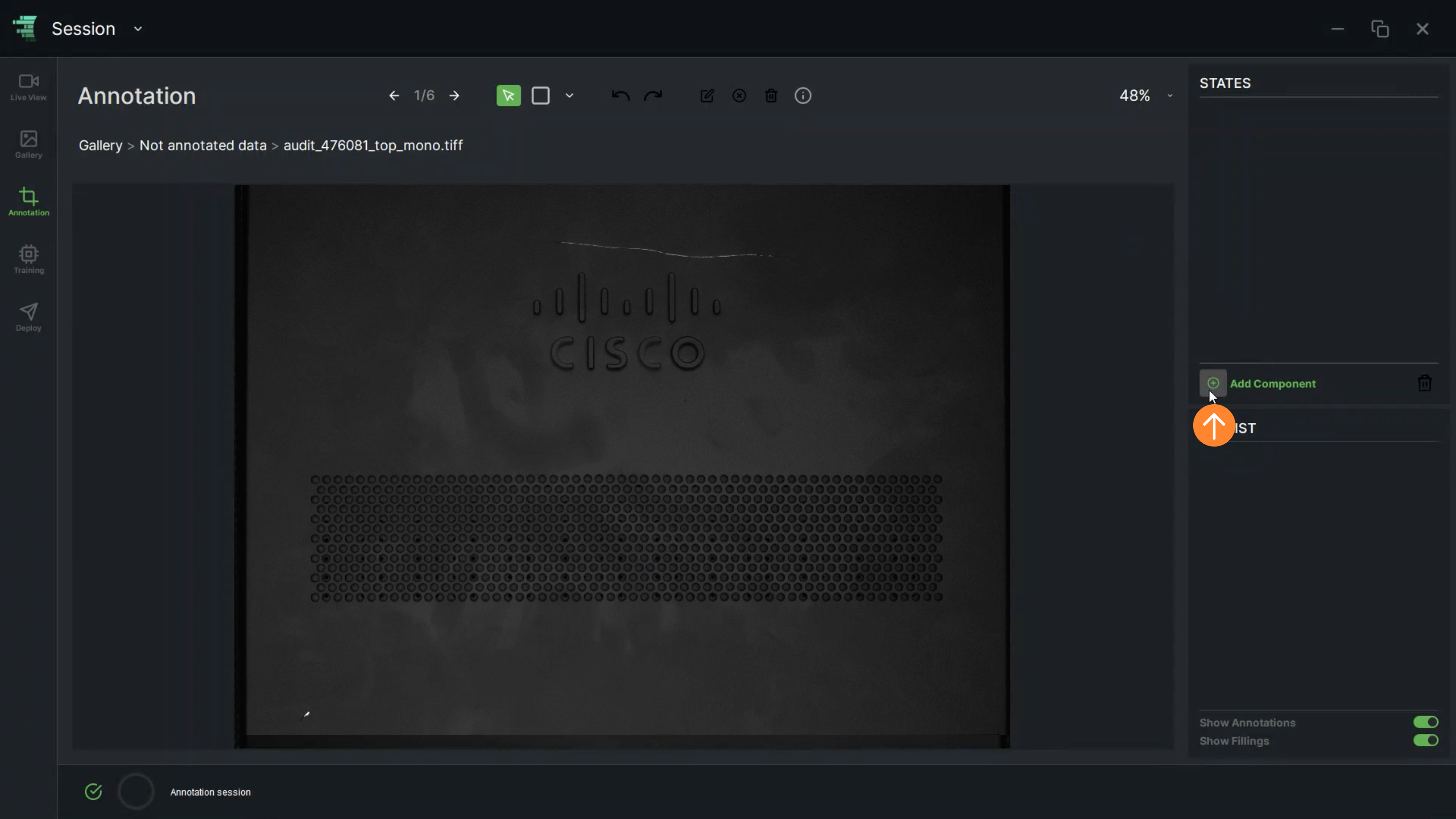

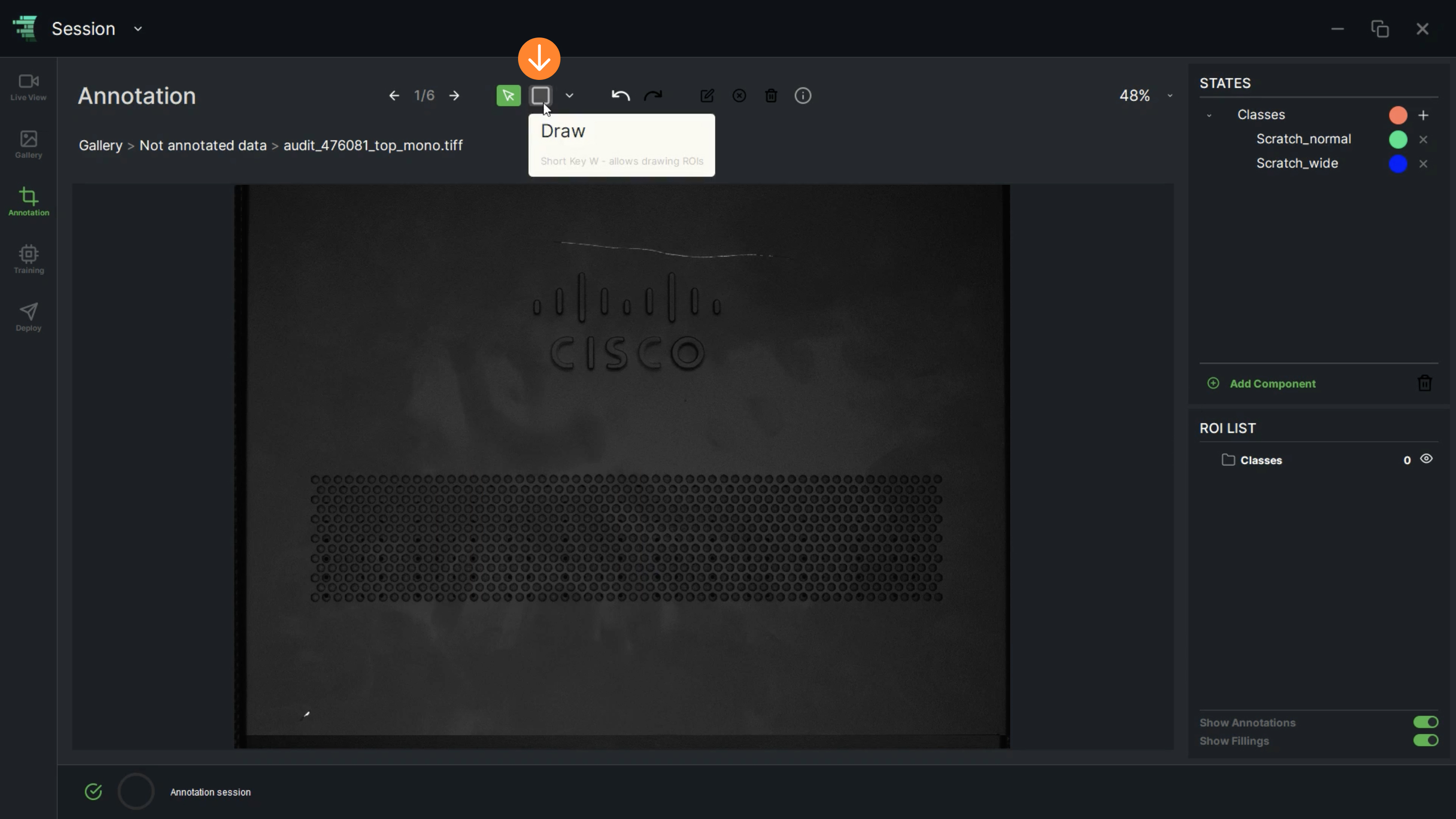

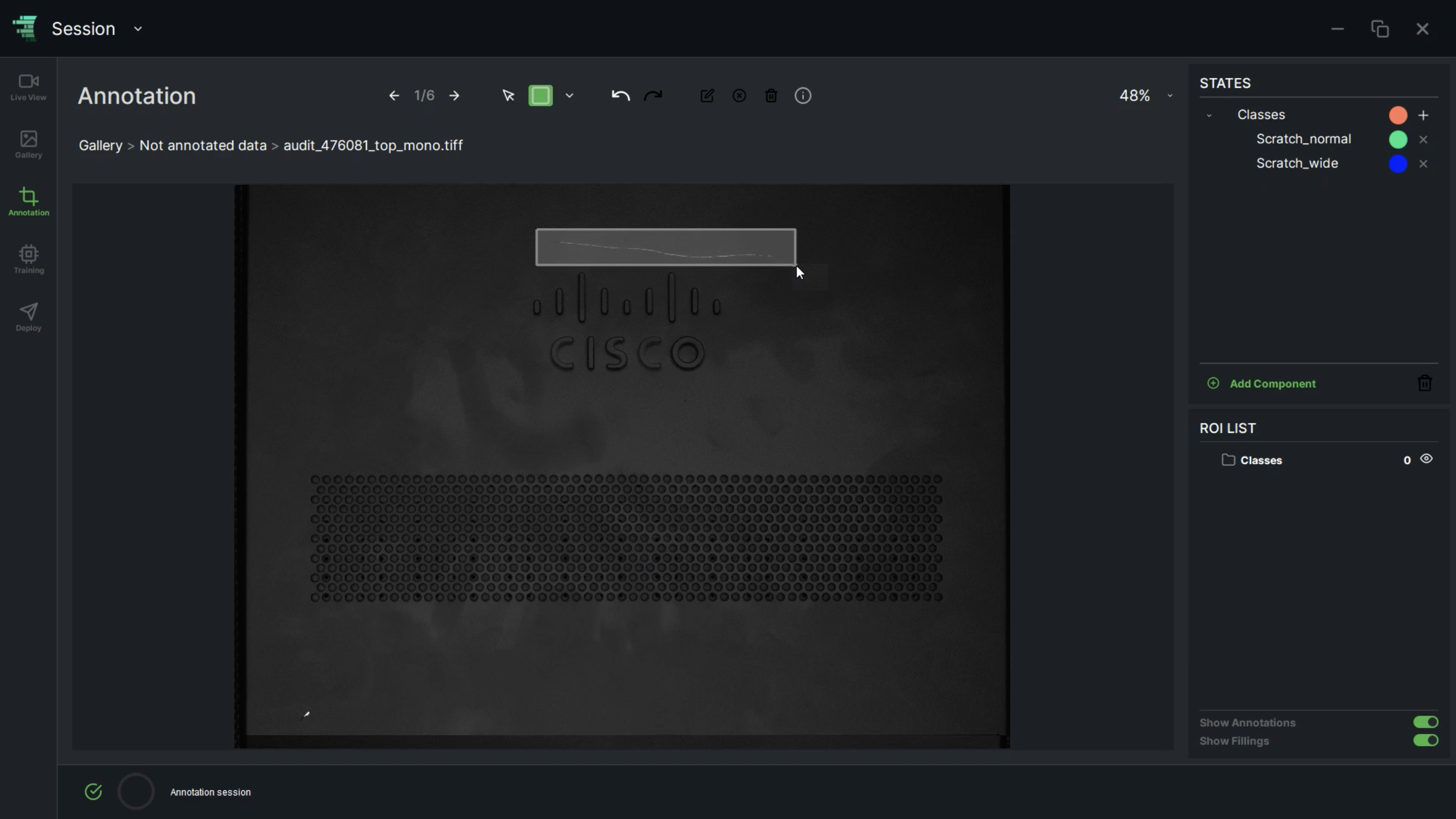

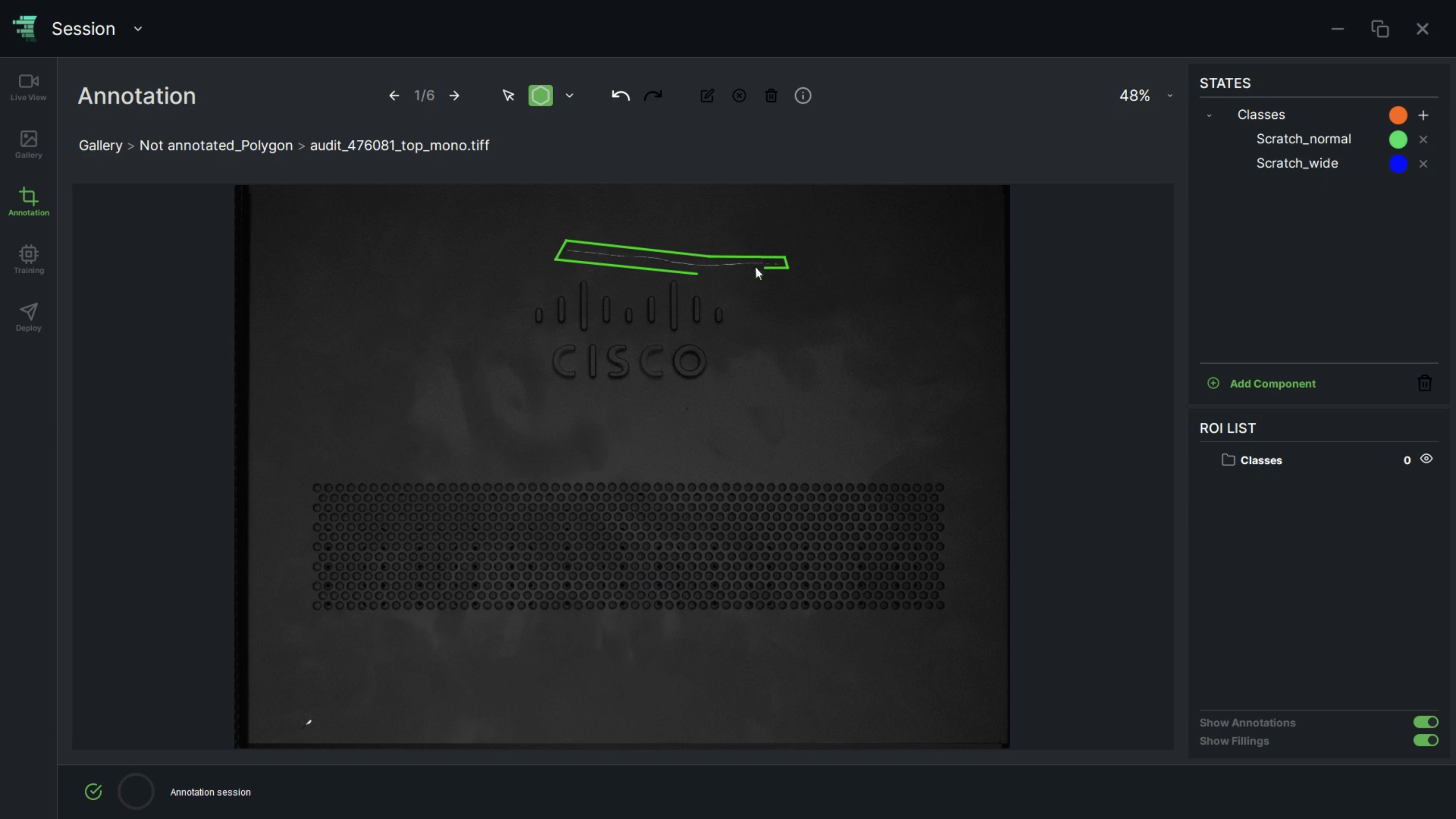

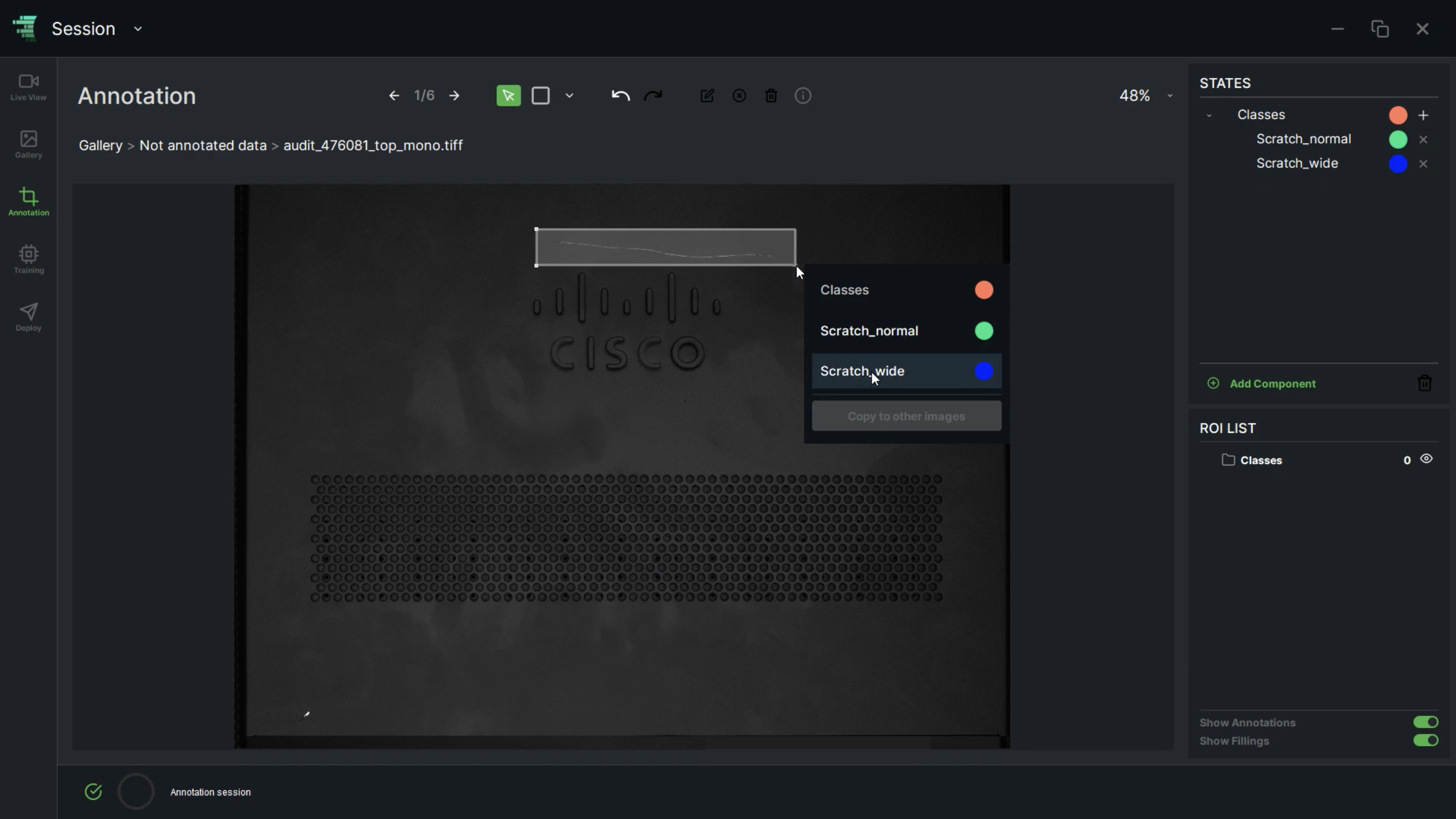

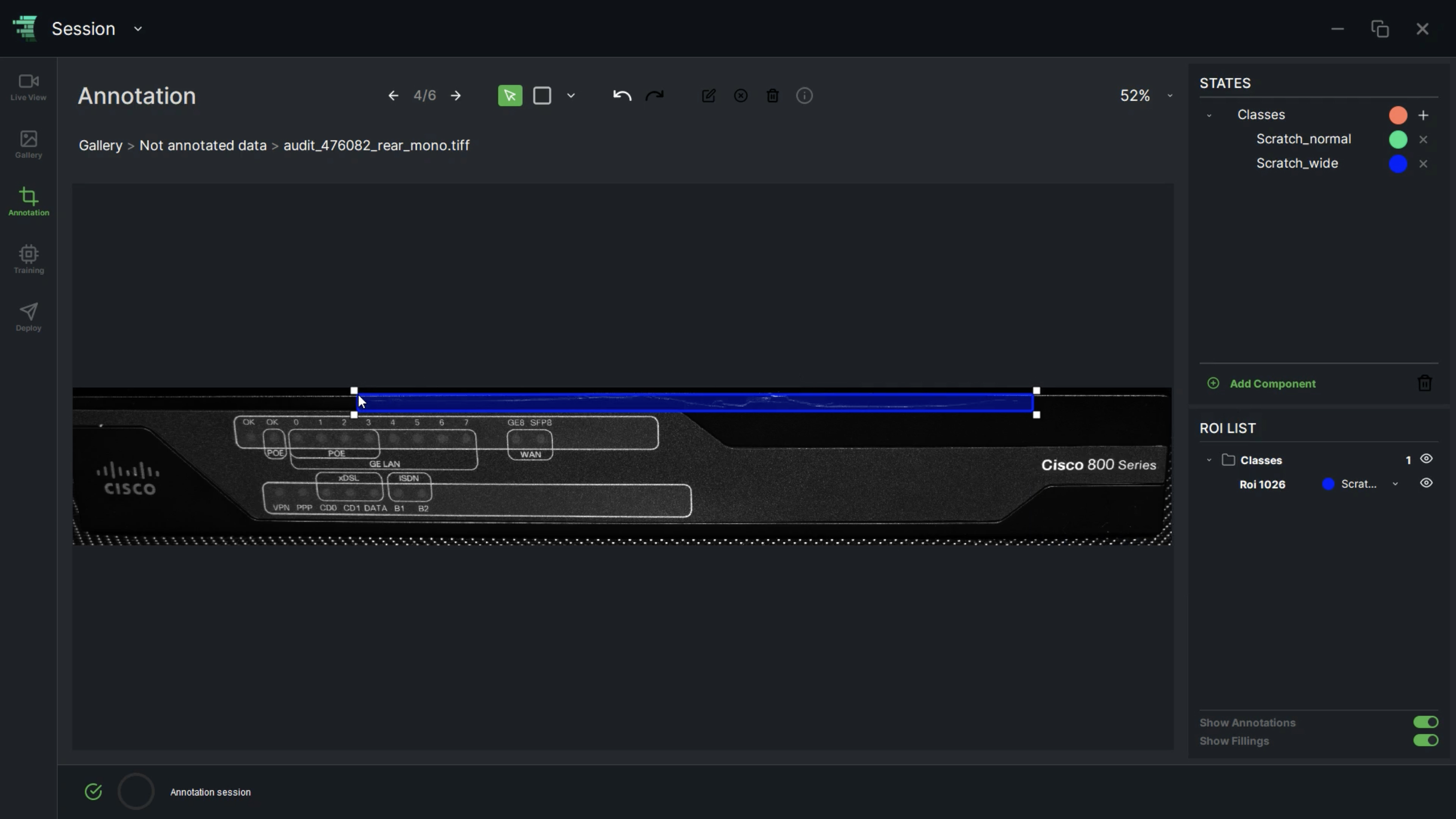

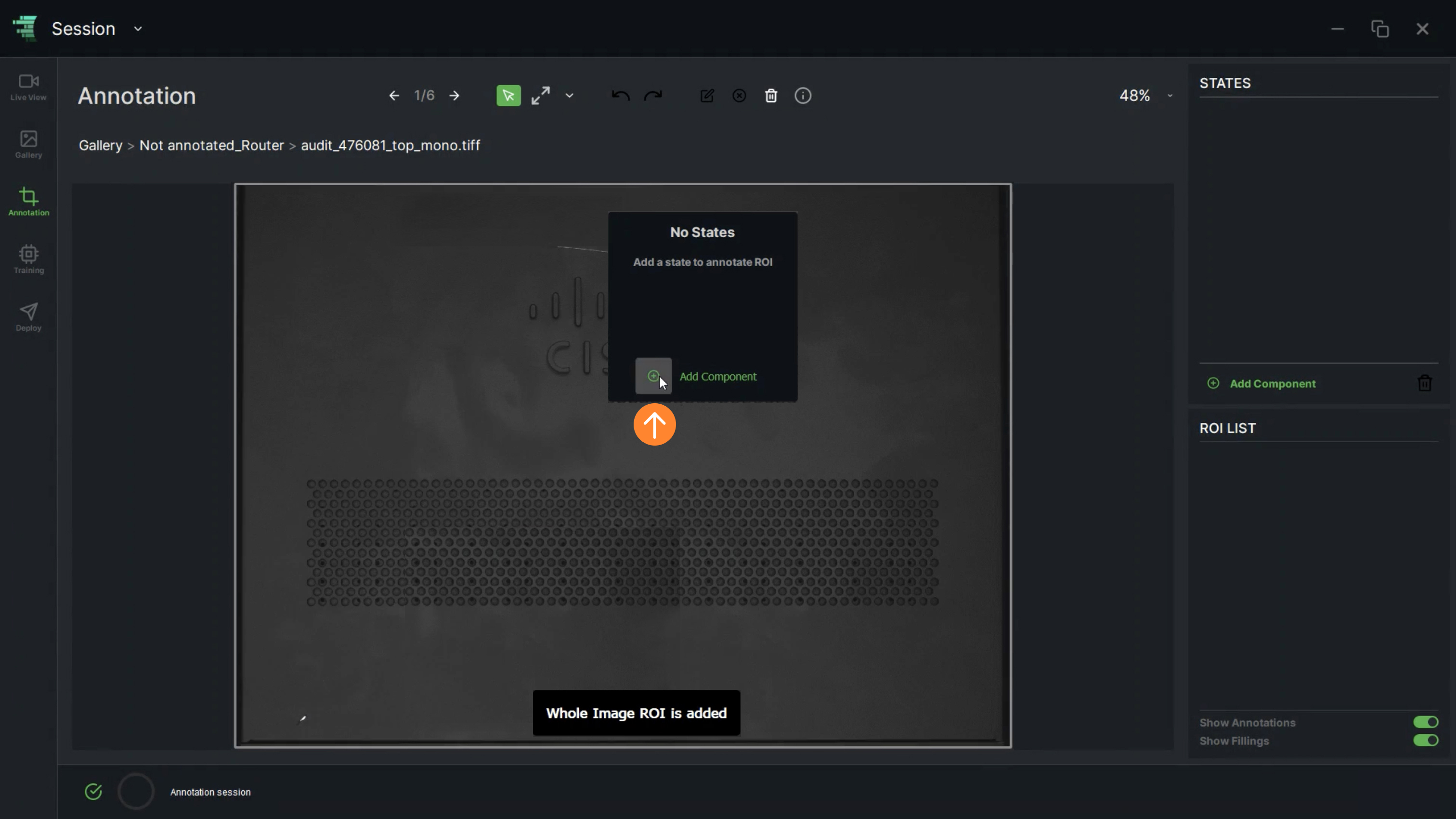

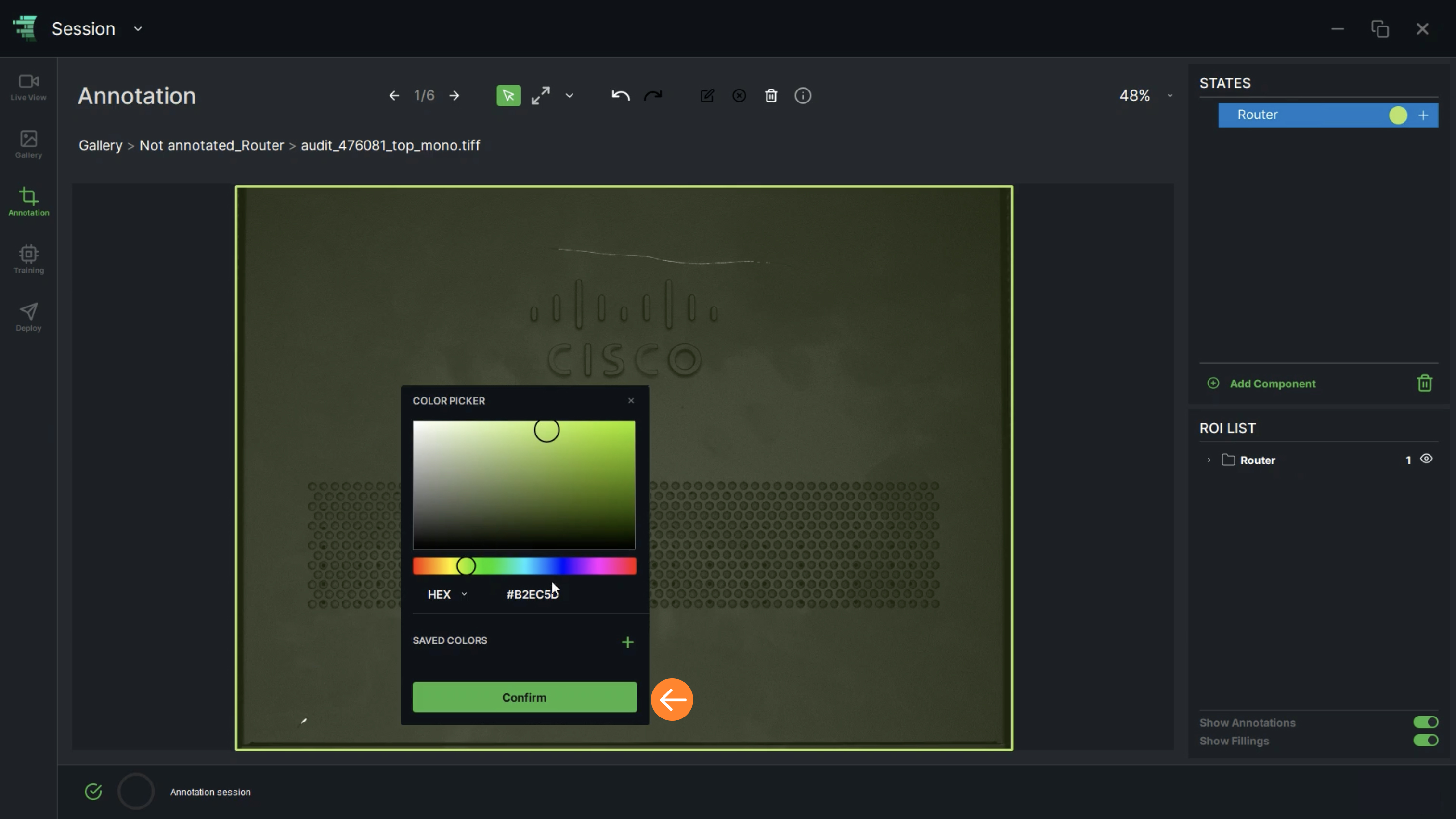

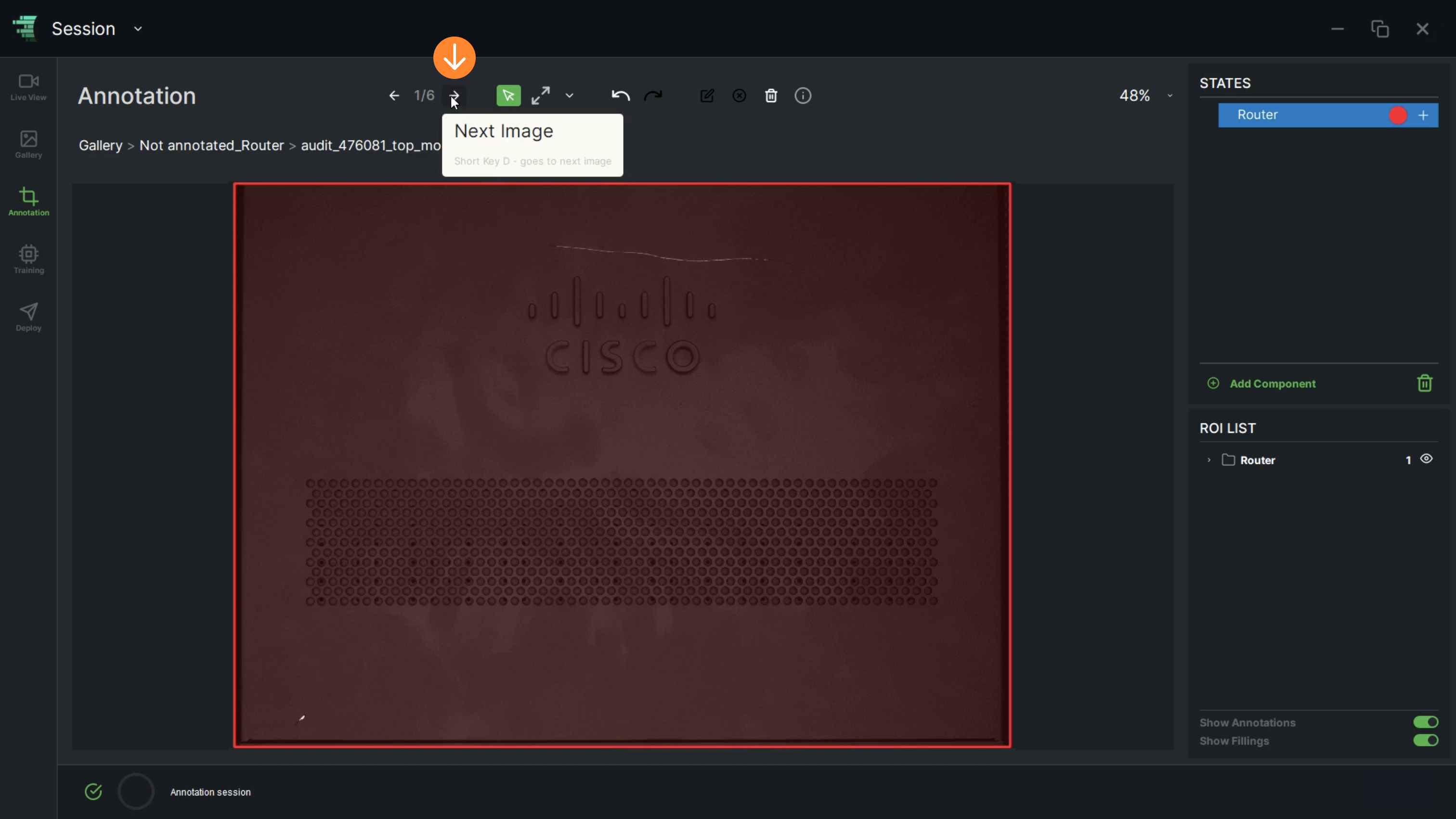

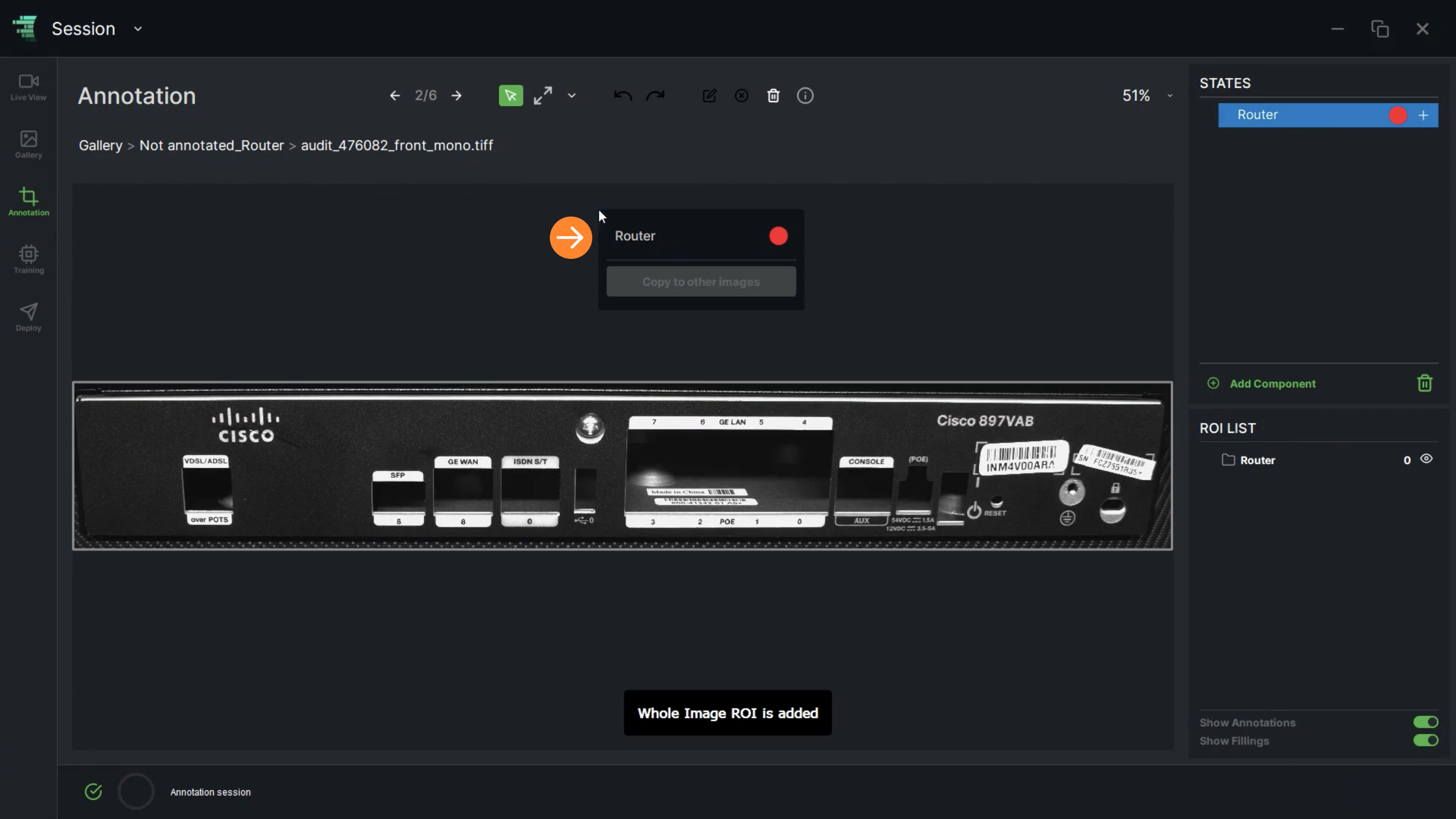

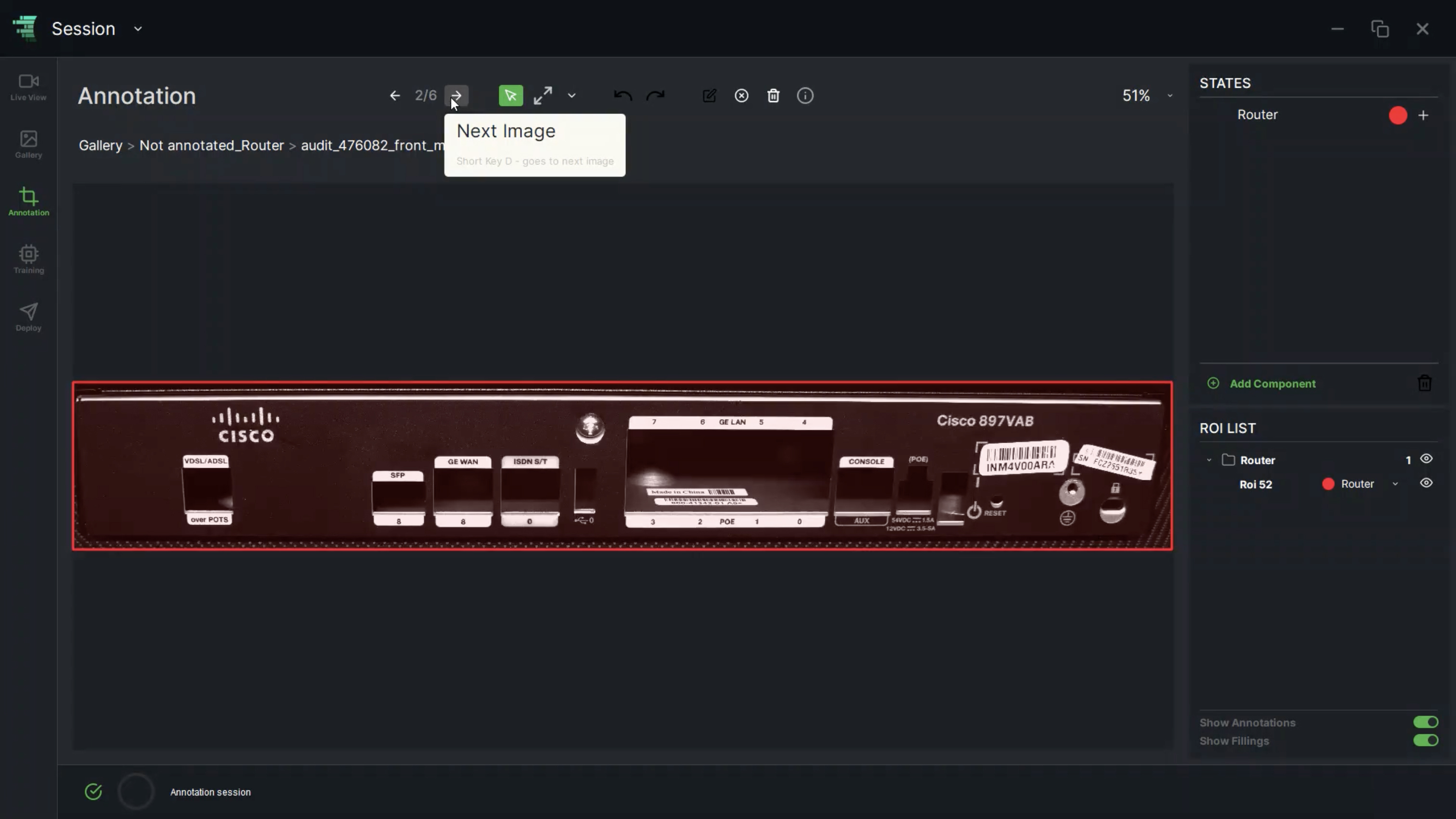

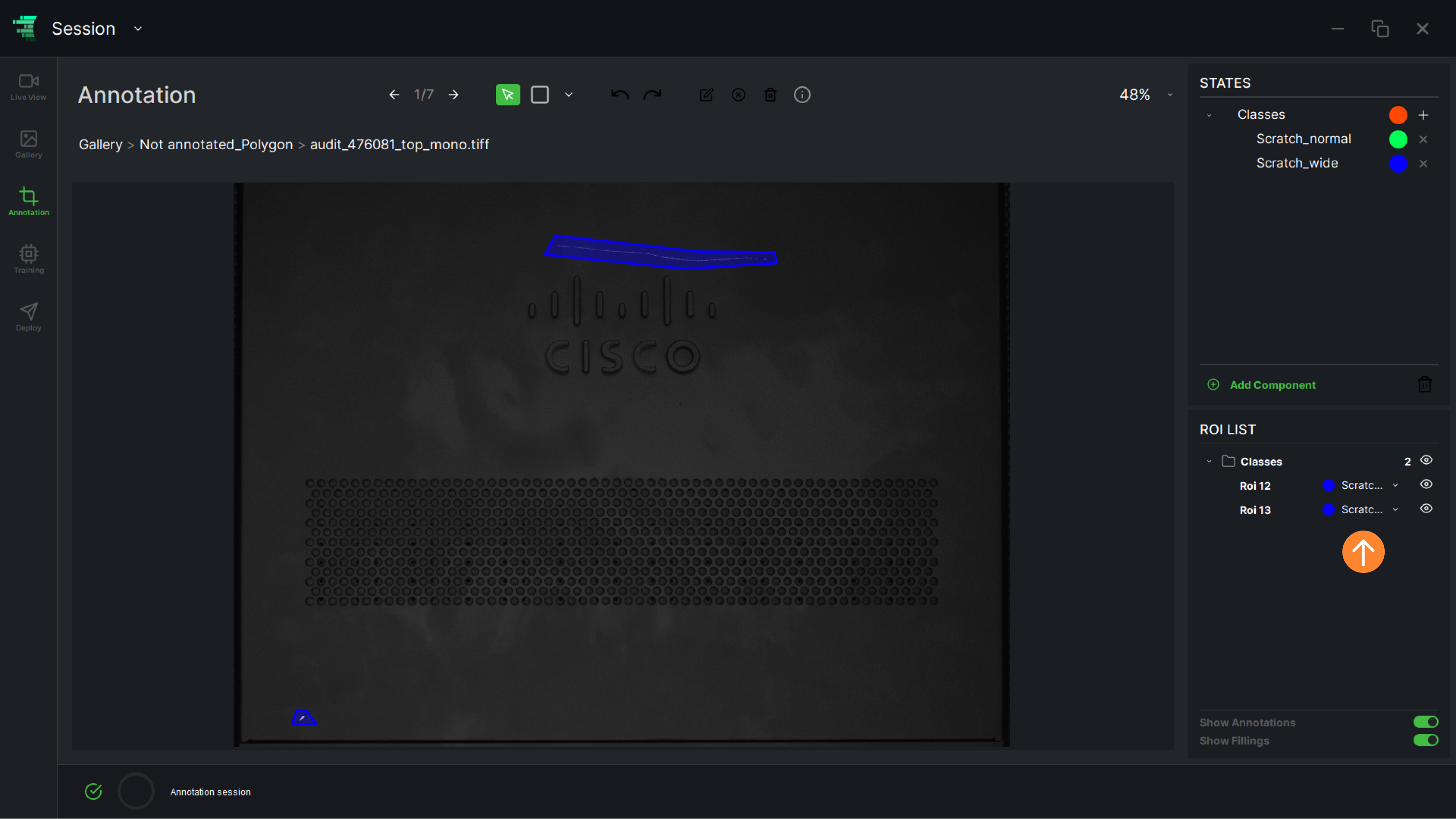

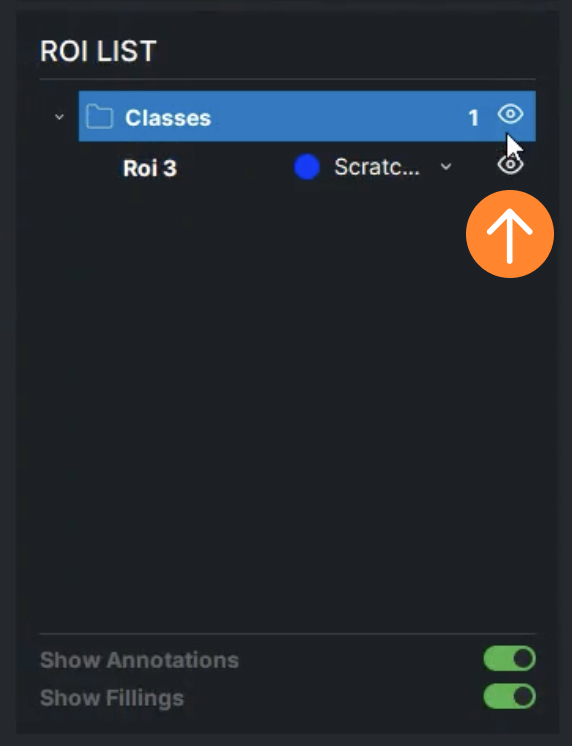

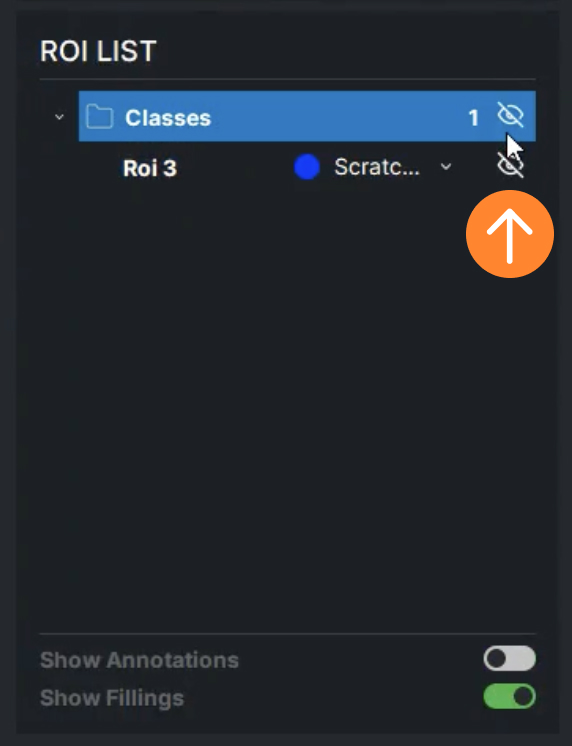

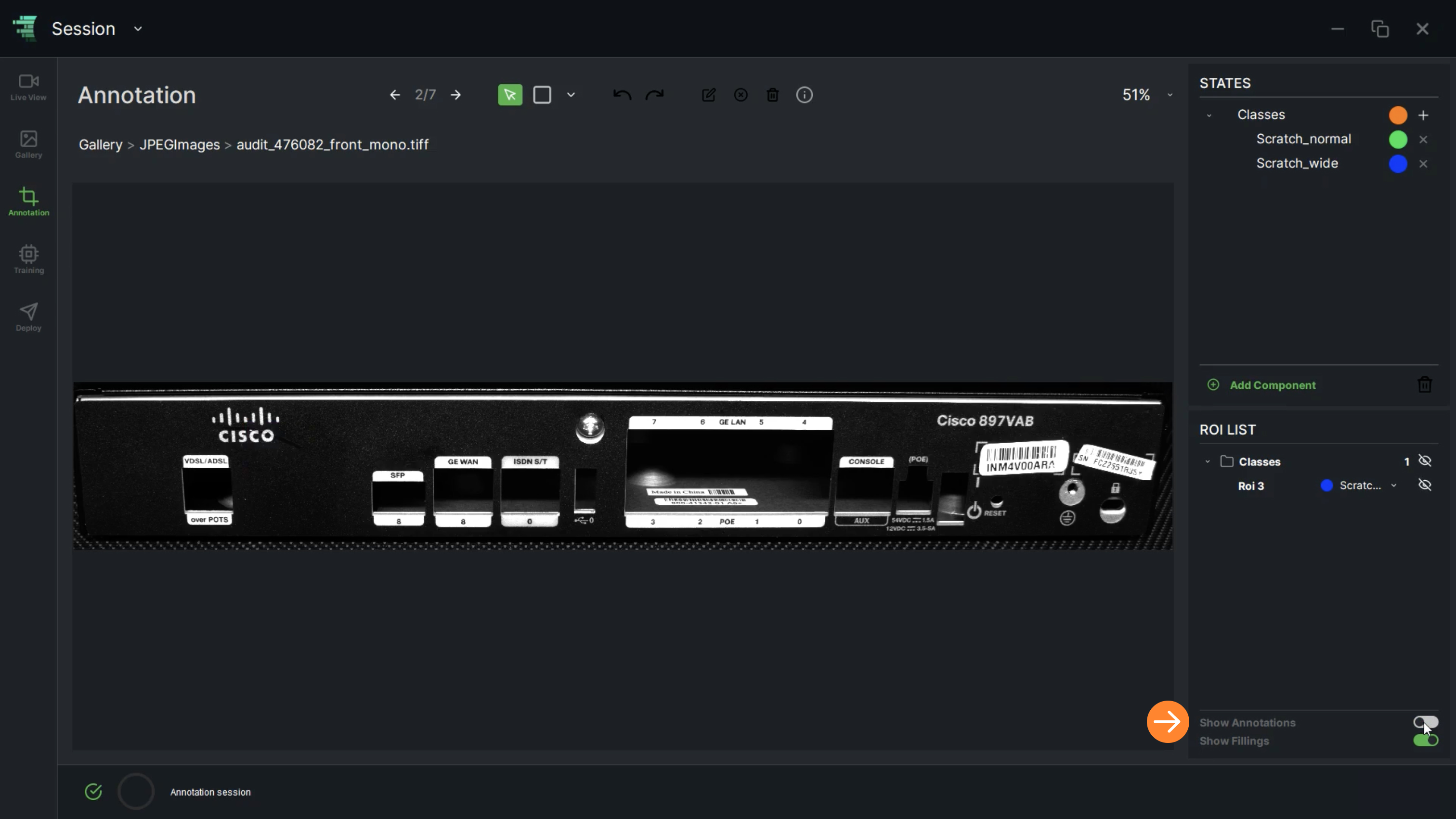

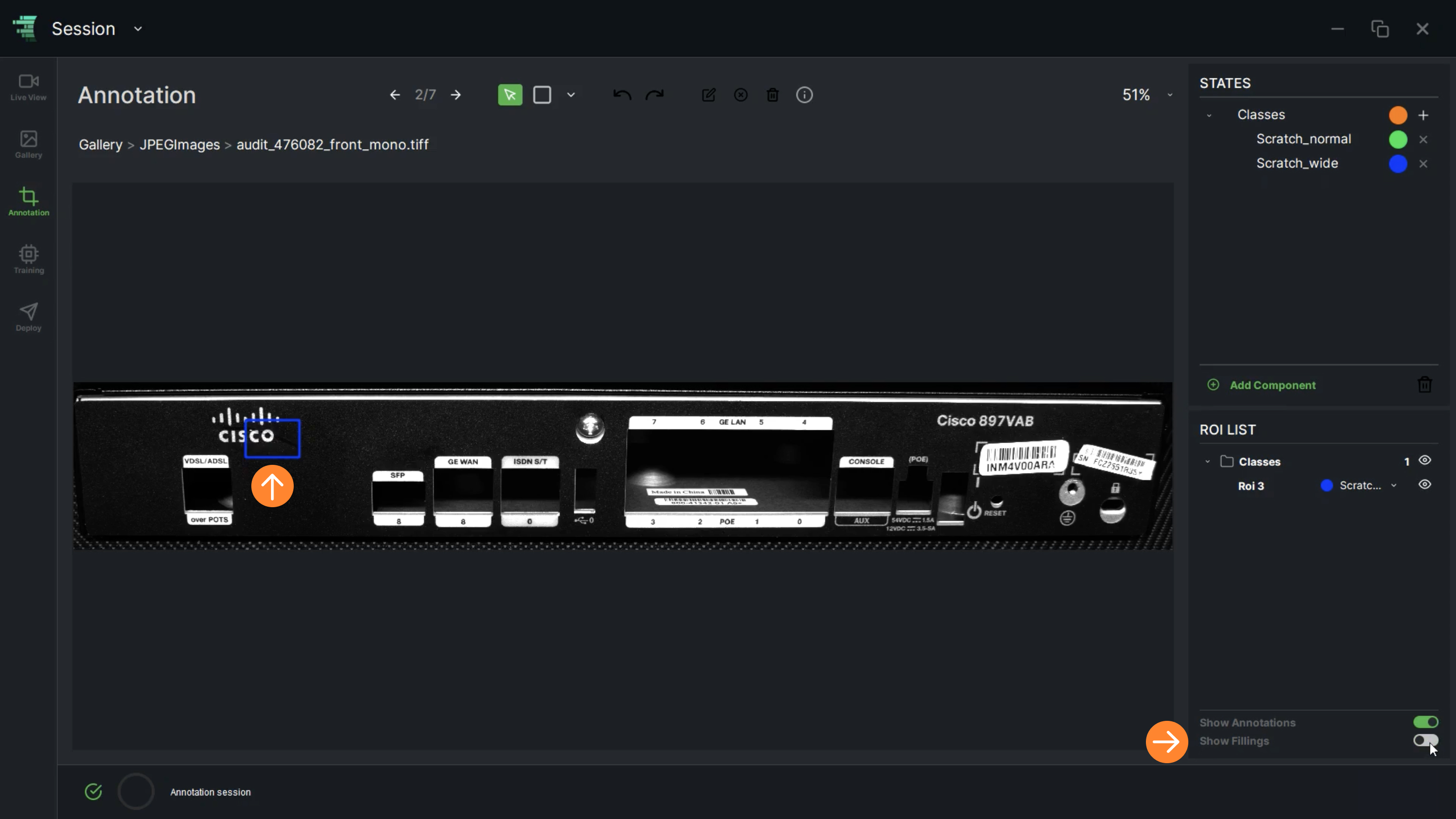

14. In the annotation screen, ROI LIST displays the defined Regions of Interest. The user has the option to switch the visibility of these ROIs by clicking on the “View” icon

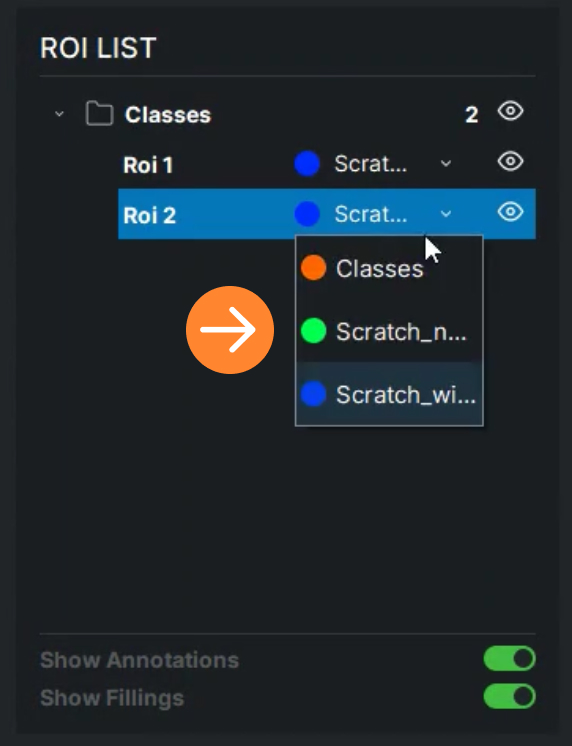

15. The user can change the ROI states by selecting options from the dropdown menu

16. “Show Annotations” switch button allows the user to show/hide annotations

17. “Show Filling” switch button allows the user to show/hide the ROI fillings. If the fillings are hidden, only the edges will be displayed

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)